Azure Daily 2022

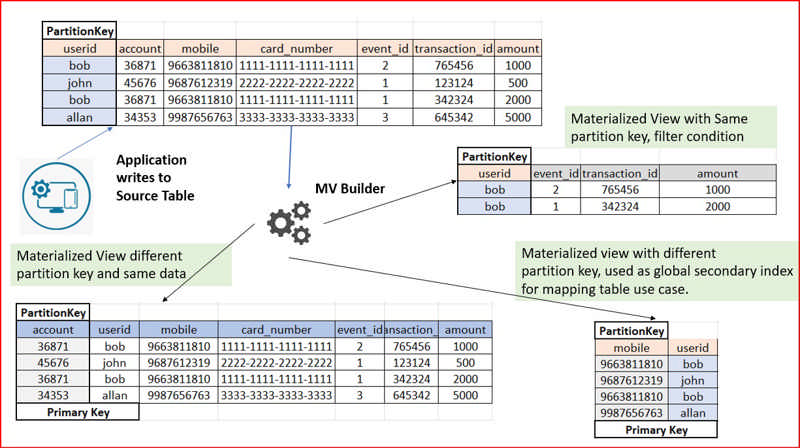

Materialized view provides the ability to create Apache Cassandra tables with different primary/partition keys. This reduces write latency for your source table since the service handles populating the materialized views automatically and asynchronously. Benefit from low latency point reads directly from the views and overall greater compatibility with native Apache Cassandra.

Source: Public Preview: Materialized view for Azure Cosmos DB for Apache Cassandra

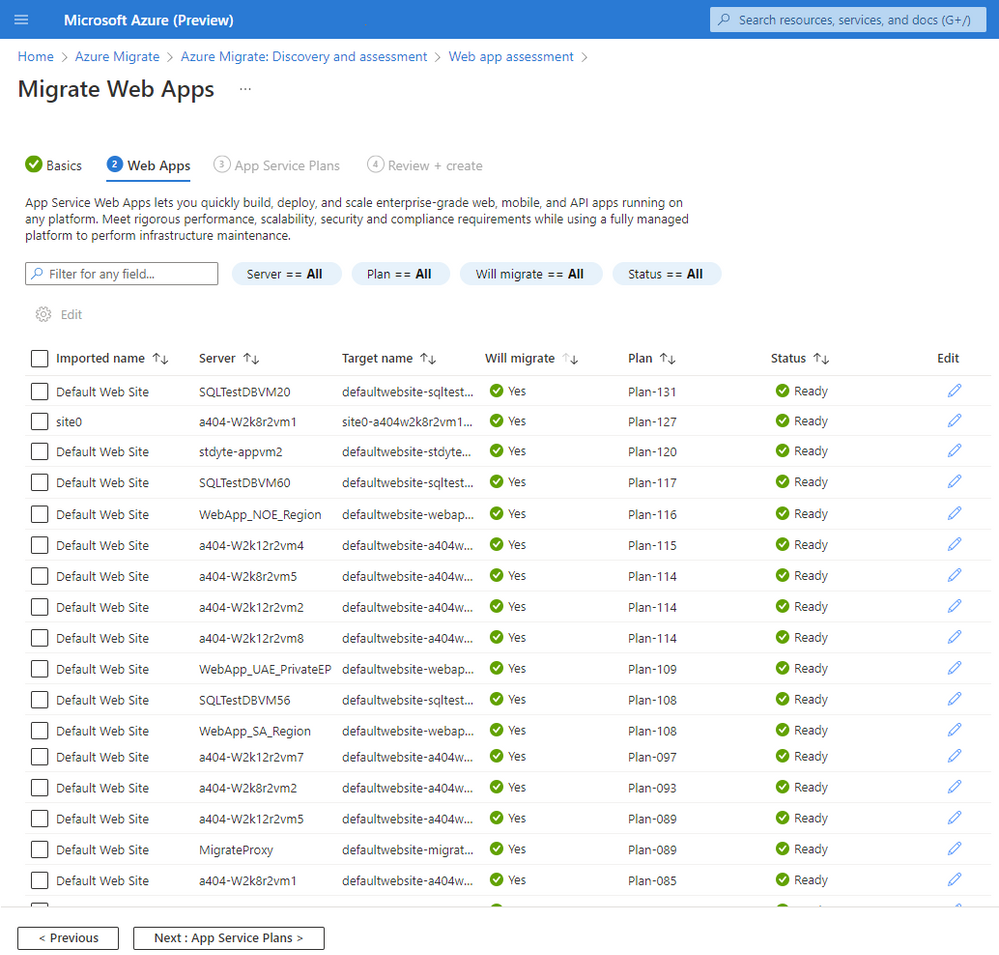

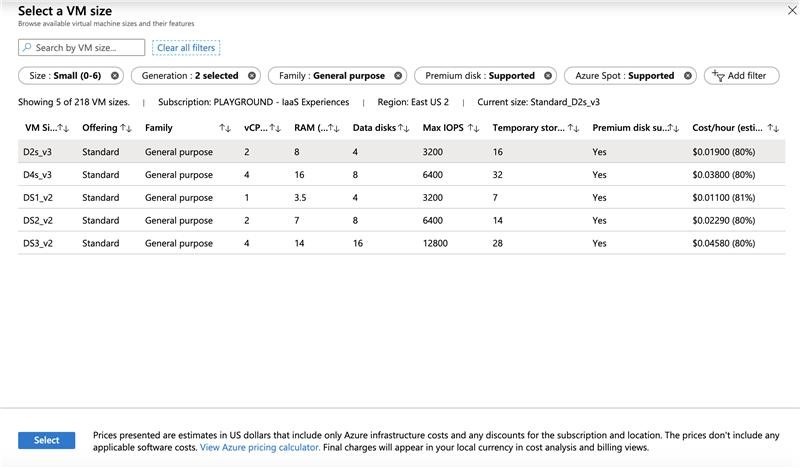

The Business case capability in Azure Migrate helps you build business proposals to understand how Azure can bring the most value. It can help you understand the return on investment for migrating your servers, SQL Server deployments and ASP.NET web apps running in your VMware environment to Azure. The business case can be created with just a few clicks and can help you understand:

- On-premises vs Azure total cost of ownership and year on year cashflow.

- Resource utilization-based insights to identify servers and workloads that are ideal for cloud and right-sized recommendations in Azure.

- Quick wins for migration and modernization including end of support Windows OS and SQL versions.

- Long term cost savings by moving from a capital expenditure model to an Operating expenditure model, by paying for only what you use.

Build your first business case today. Learn more

Source: Public preview: Build a business case with Azure Migrate

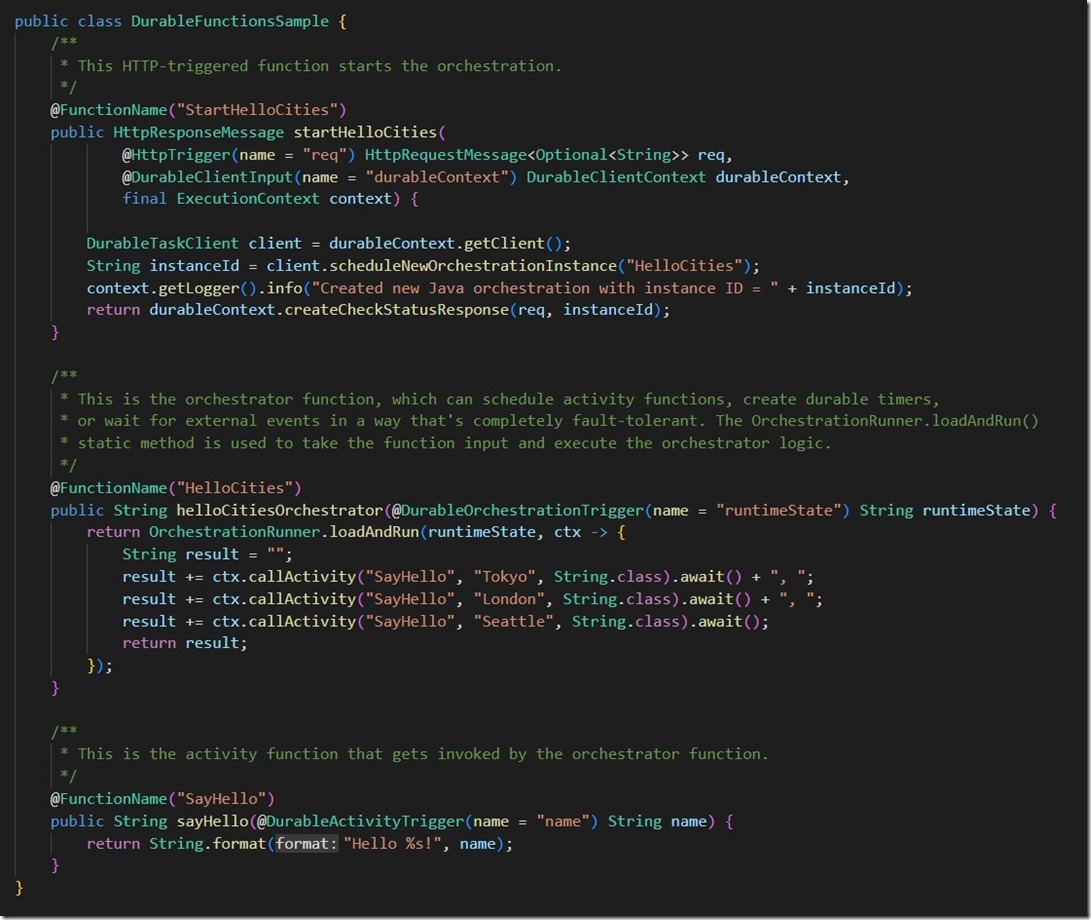

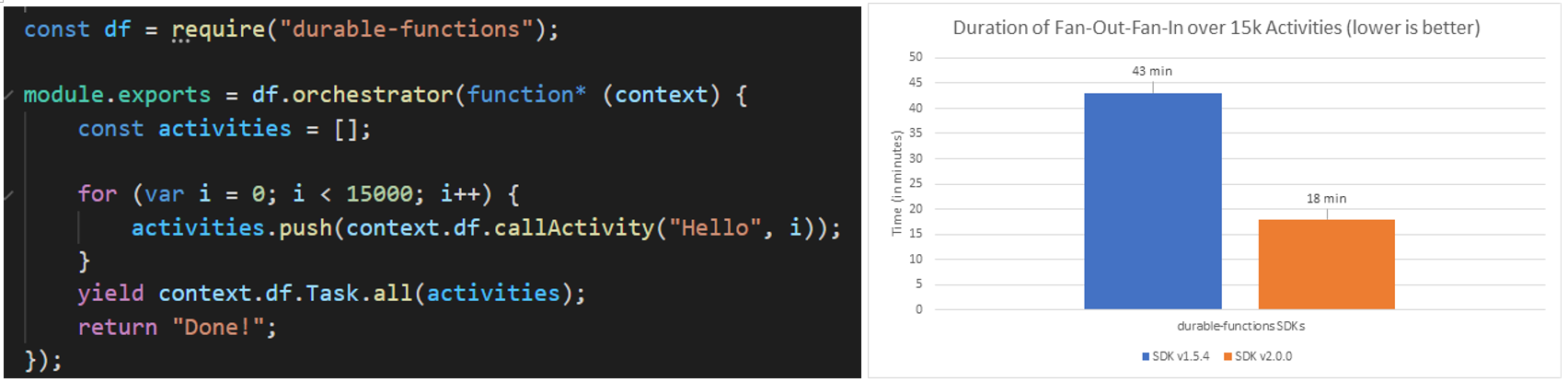

Durable Functions for Java is now generally available. Durable Functions makes it easy to orchestrate stateful workflows as-code in a serverless environment. Some common stateful application patterns that Durable Functions facilitates include "function chaining", "fan out/fan in", "async http APIs", "monitor", and "human interaction". More details about Durable Functions concepts and patterns can be found in our documentation.

Source: Generally Available: Durable Functions support for Java

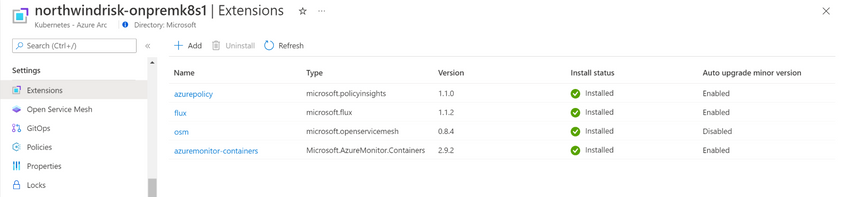

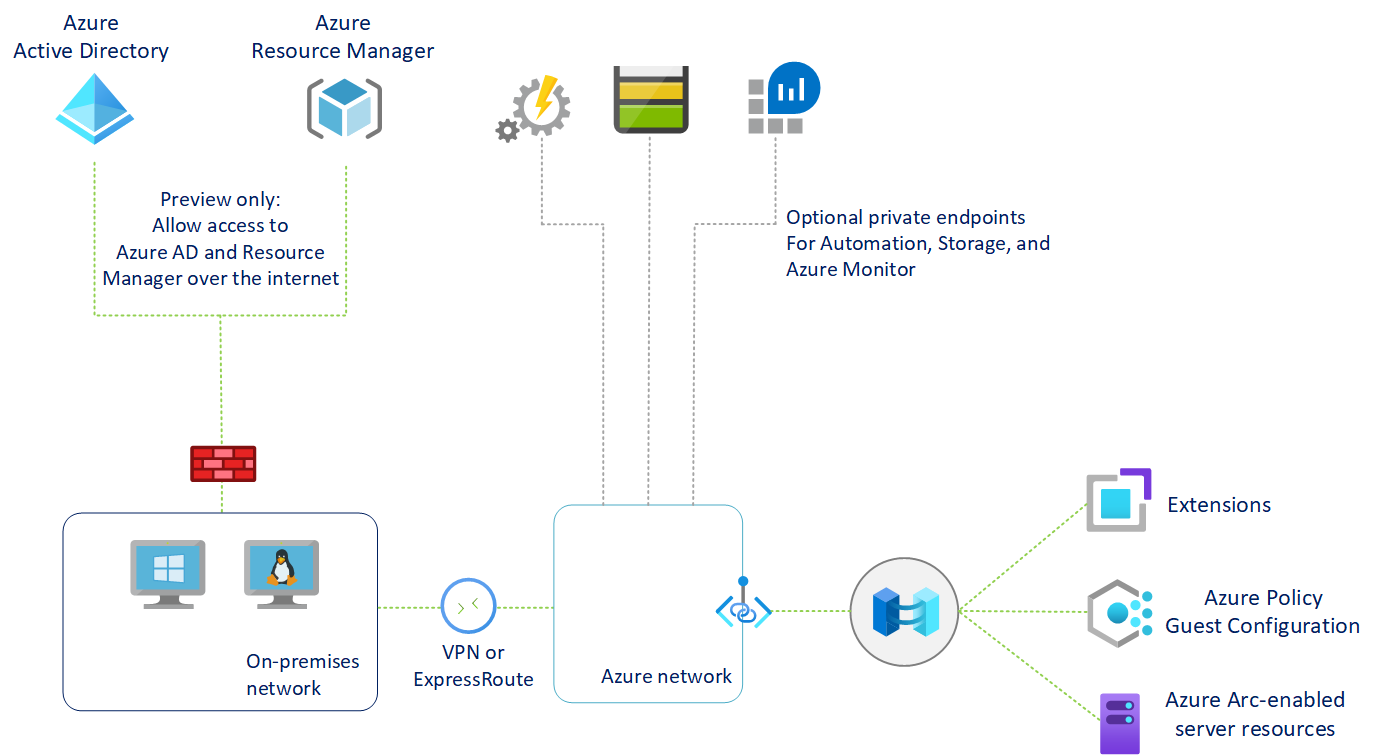

Public preview: Azure Arc enabled Azure Container Apps

The cluster can be on-premises or hosted in a third-party cloud. This approach allows developers to take advantage of the features and developer productivity of Azure Container Apps. Meanwhile it allows IT Administrators to maintain corporate compliance by hosting the application in Hybrid environments.

Azure Container Apps allows developers to rapidly build and deploy microservices and containerized applications. Common uses of Azure Container Apps include, but are not limited to: API endpoints, background or event-driven processing, and running microservices. Applications can dynamically scale within the limits of the Arc-enabled Kubernetes cluster.

By deploying an Arc extension on the Azure Arc-enabled Kubernetes cluster, IT administrators gain control of the underlying hardware and environment, while still enabling the high productivity of Azure PaaS services from within a hybrid environment.

Source: Public preview: Azure Arc enabled Azure Container Apps

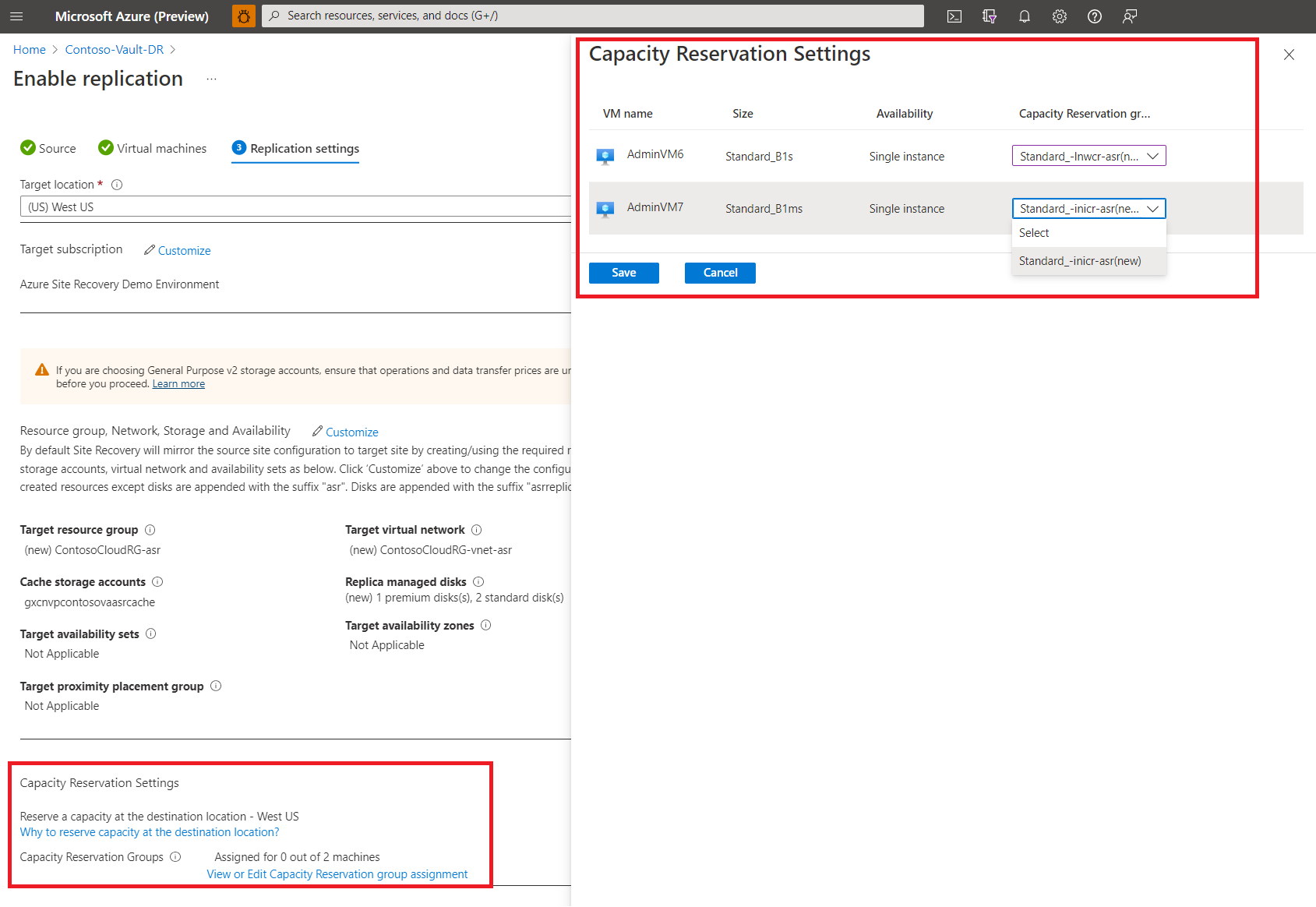

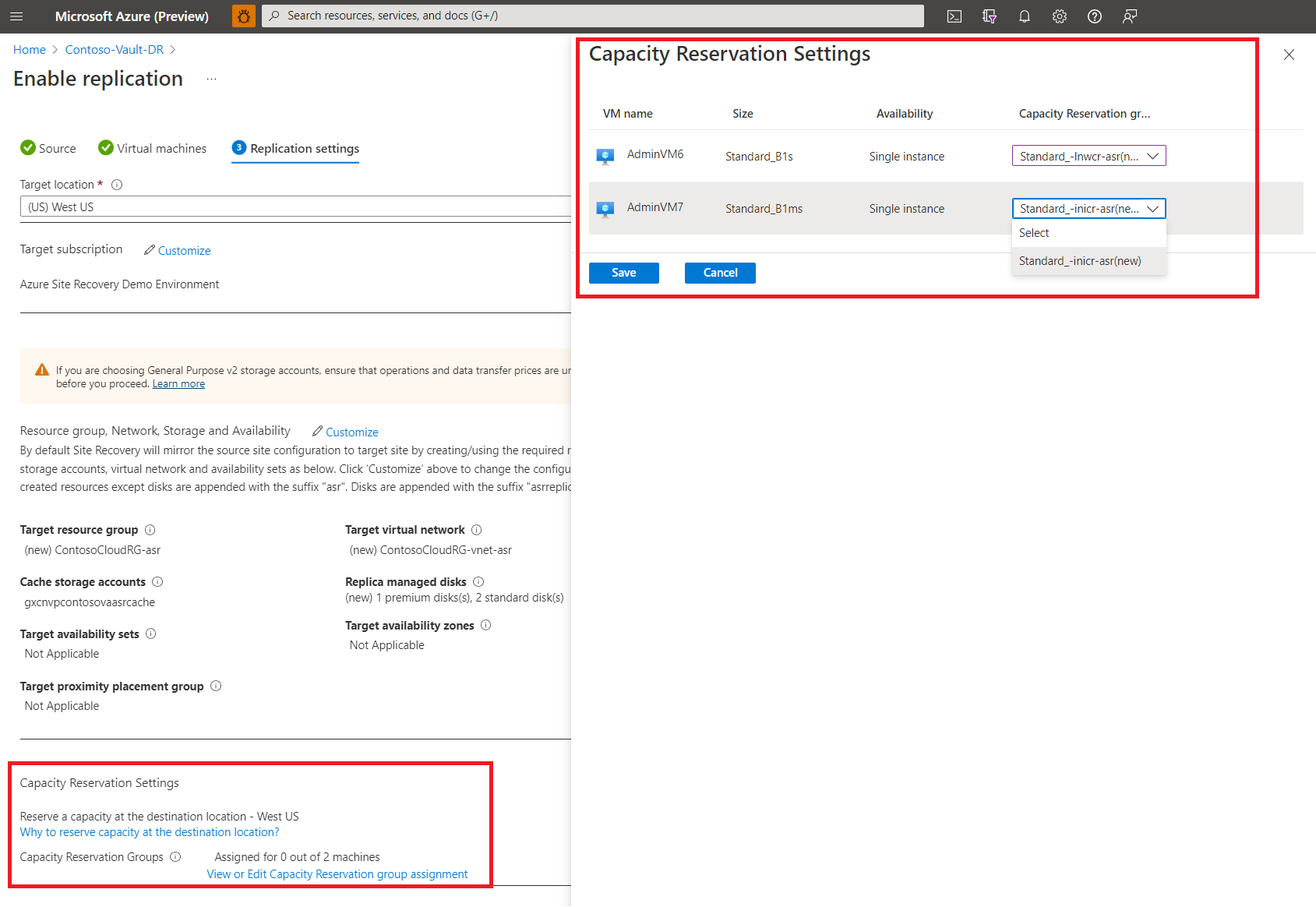

Azure Site Recovery (ASR) has increased its data churn limit by approximately 2.5x to 50 MB/s per disk. With this, you can configure disaster recovery (DR) for Azure VMs having data churn up to 100 MB/s. This helps you to enable DR for more IO intensive workloads.

To opt for the higher churn limit is very easy – you need to select the option High Churn (Public Preview) when enabling the replication. By default, Normal Churn option is selected. If you want to use the higher churn limit for Azure VMs already protected using ASR, you need to disable replication and re-enable replication with the High Churn (Public Preview) option selected. Please note that this feature is only available for Azure-to-Azure scenarios.

Source: Public Preview: Azure Site Recovery Higher Churn Support

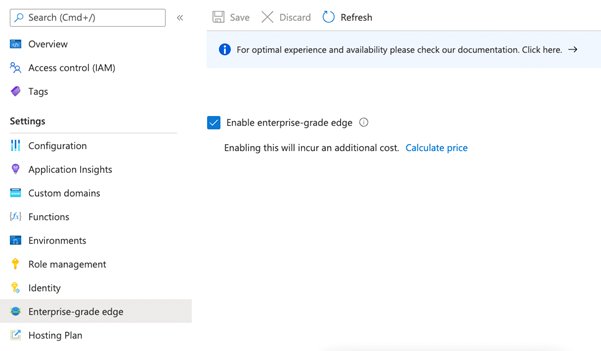

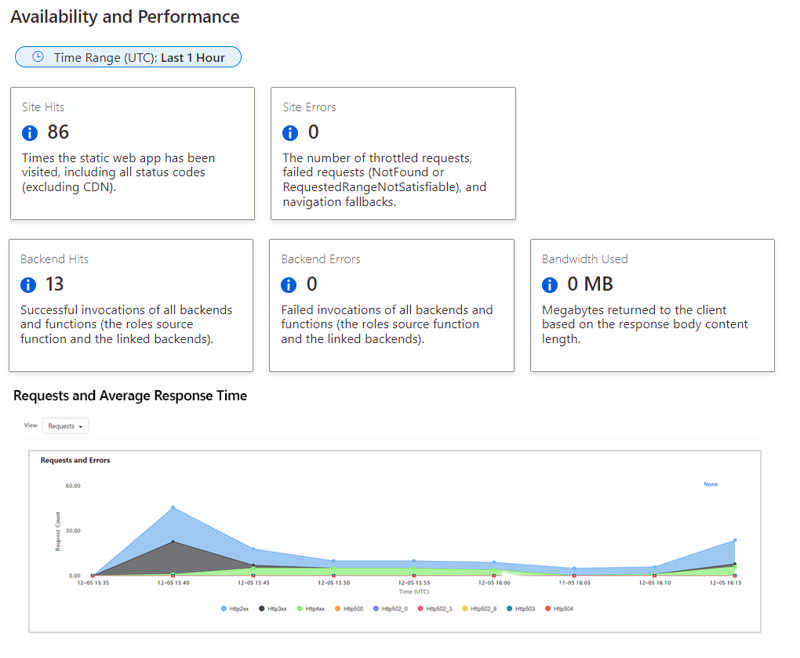

Generally available: Static Web Apps Diagnostics

Azure Static Web Apps Diagnostics is an intelligent tool to help you troubleshoot your static web app directly from the Azure Portal. When issues arise, Static Web Apps diagnostics will help you diagnose what went wrong and will show you how to resolve the issues. This guidance helps you improve the reliability of your site and track its performance.

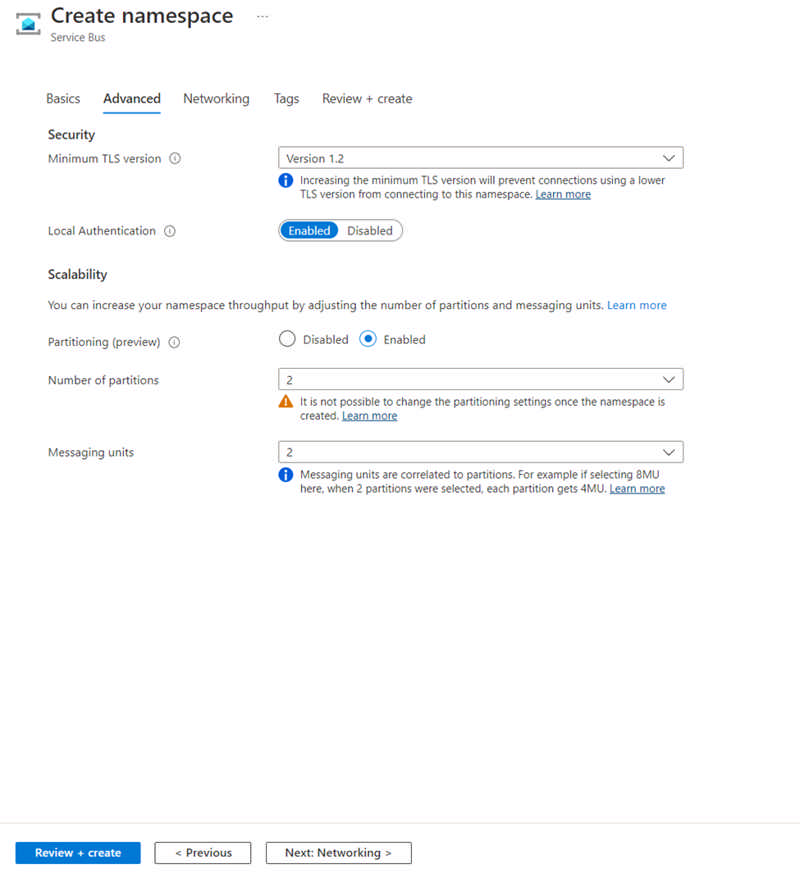

Enable higher throughput levels for Azure Service Bus premium via two new features in public preview today.

First, we are releasing scaling partitions, allowing the use of partitioning for the premium messaging tier. Service Bus partitions enable messaging entities to be partitioned across multiple message brokers. This means that the overall throughput of a partitioned entity is no longer limited by the performance of a single message broker. Additionally, a temporary outage of a message broker, for example during an upgrade, does not render a partitioned queue or topic unavailable, as messages will be retried on a different partition.

Second, we are making a change to our infrastructure, which will result in more consistent low latency. This is accomplished by switching our storage to a different implementation called local store. During public preview we will create partitioned namespaces using this new feature, but in the future all new namespaces will be created on local store.

Source: Public preview: Performance improving features for Azure Service Bus premium

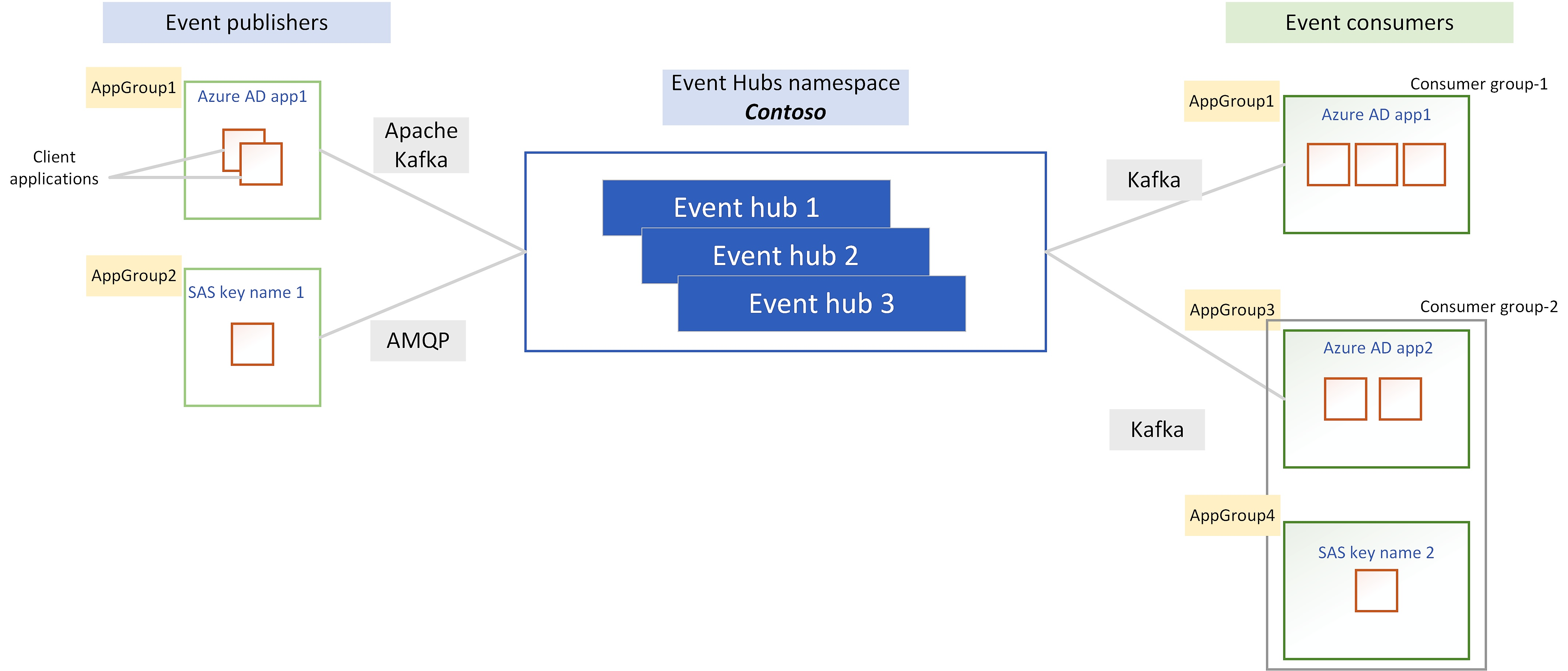

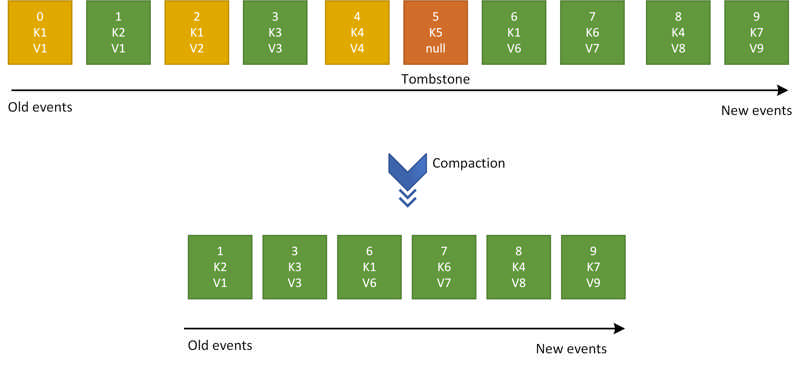

Log compaction is a way of retaining events in Event Hubs. Rather using time based retention, you can use key-based retention mechanism where Event Hubs retrains the last known value for each event key of an event hub or Kafka topic. Event Hubs service runs a compaction job internally and purges old events in a compacted event hub. The partition key that you set with each event is used as the compaction key and users can also mark events that needs to be deleted from the event log by publishing event with a key and null payload.

To learn more about log compaction, please check out Log Compaction documentation.

Source: Public preview: Log compaction support in Azure Event Hubs

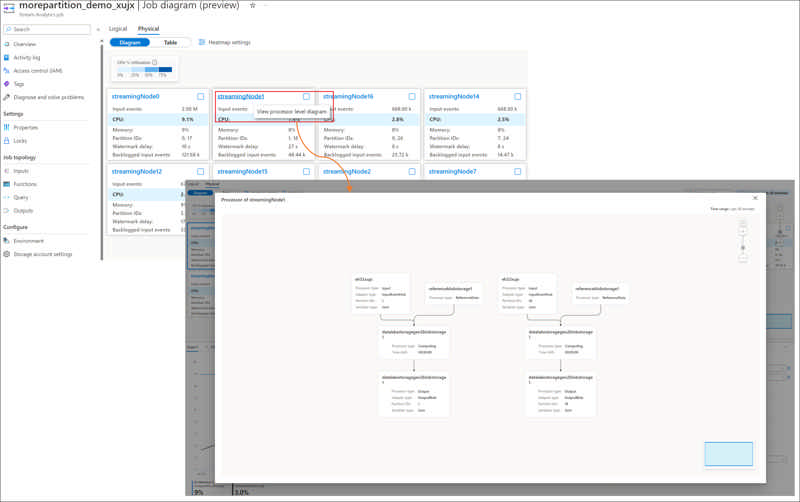

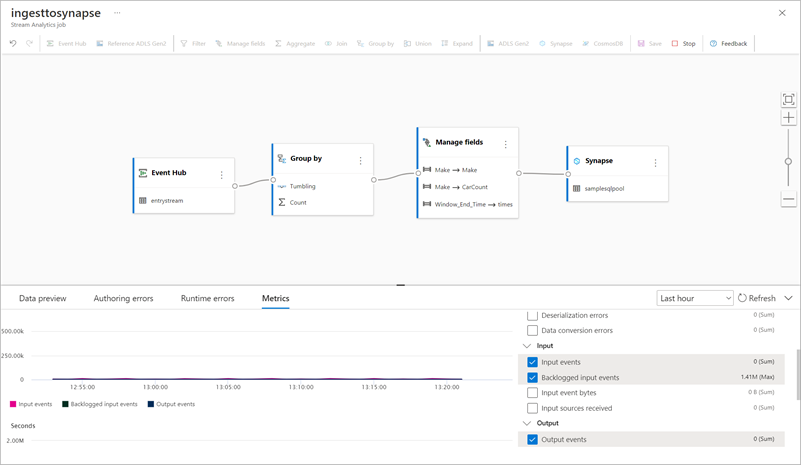

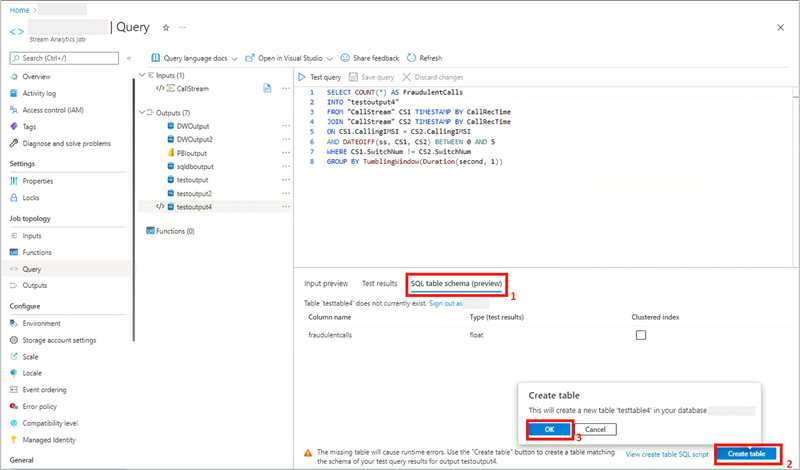

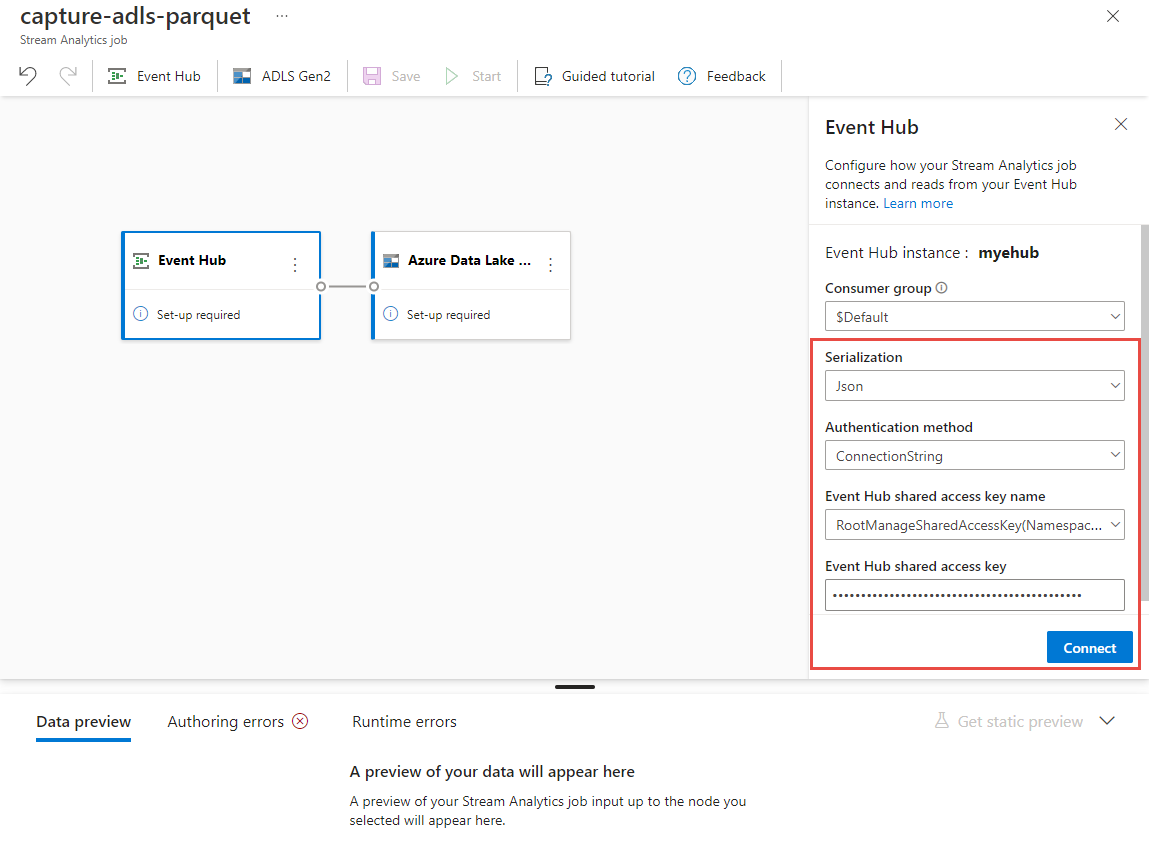

Inside each streaming node of an Azure Stream Analytics job, there are Stream Analytics processors available for processing the stream data. Each processor represents one or more steps in your query. The processor diagram in physical job diagram visualizes the processor topology inside the specific streaming node of your job. It helps you to identify if there is any bottleneck and where the bottleneck is in the streaming node of your job.

Source: Public preview: Processor diagram in Physical Job Diagram for Stream Analytics job troubleshooting

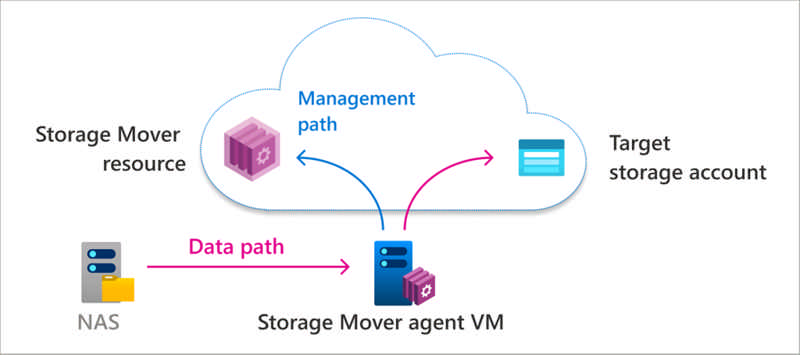

File storage is a critical part of any organization’s on-premises IT infrastructure. As organizations migrate more of their applications and user shares to the cloud, they often face challenges in migrating the associated file data. Having the right tools and services is essential to successful migrations.

Across workloads, there can be a wide range of file sizes, counts, types, and access patterns. In addition to supporting a variety of file data, migration services must minimize downtime, especially on mission-critical file shares.

Source: Azure Storage Mover–A managed migration service for Azure Storage

We are pleased to announce the general availability of RDP Shortpath for public networks. RDP Shortpath improves the transport reliability of Azure Virtual Desktop connections by establishing a direct UDP data flow between the Remote Desktop client and session hosts. This feature is enabled by default for all customers. We started deploying RDP Shortpath in September and now the feature is 100% rolled out.

What is RDP Shortpath for public networks?

Read more at Azure Daily 2022

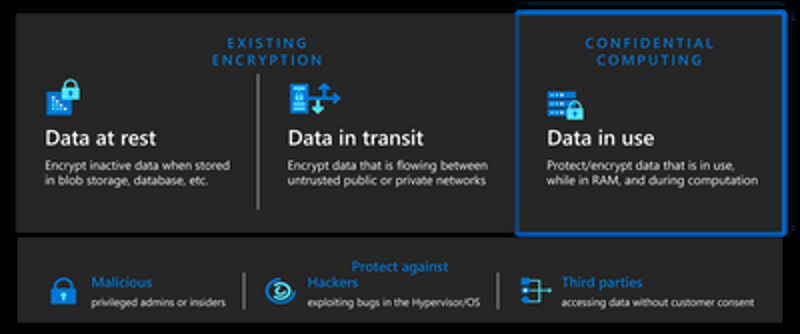

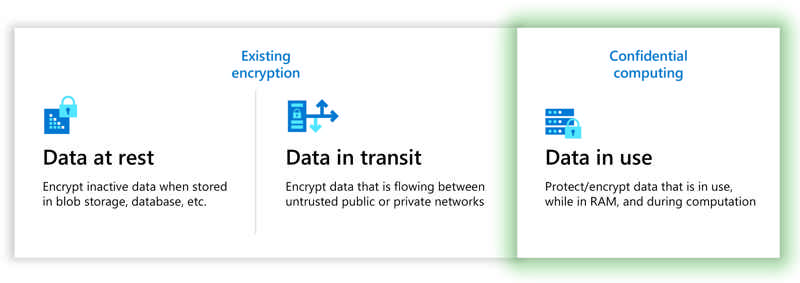

We’re announcing that Azure Virtual Desktop has public preview support for Azure Confidential Virtual Machines. Confidential Virtual Machines increase data privacy and security by protecting data in use. The Azure DCasv5 and ECasv5 confidential VM series provide a hardware-based Trusted Execution Environment (TEE) that features AMD SEV-SNP security capabilities, which harden guest protections to deny the hypervisor and other host management code access to VM memory and state, and that is designed to protect against operator access and encrypts data in use.

With this preview, support for Windows 11 22H2 has been added to Confidential Virtual Machines. Confidential OS Disk encryption and Integrity monitoring will be added to the preview at a later date. Confidential VM support for Windows 10 is planned.

Read more at Azure Daily 2022

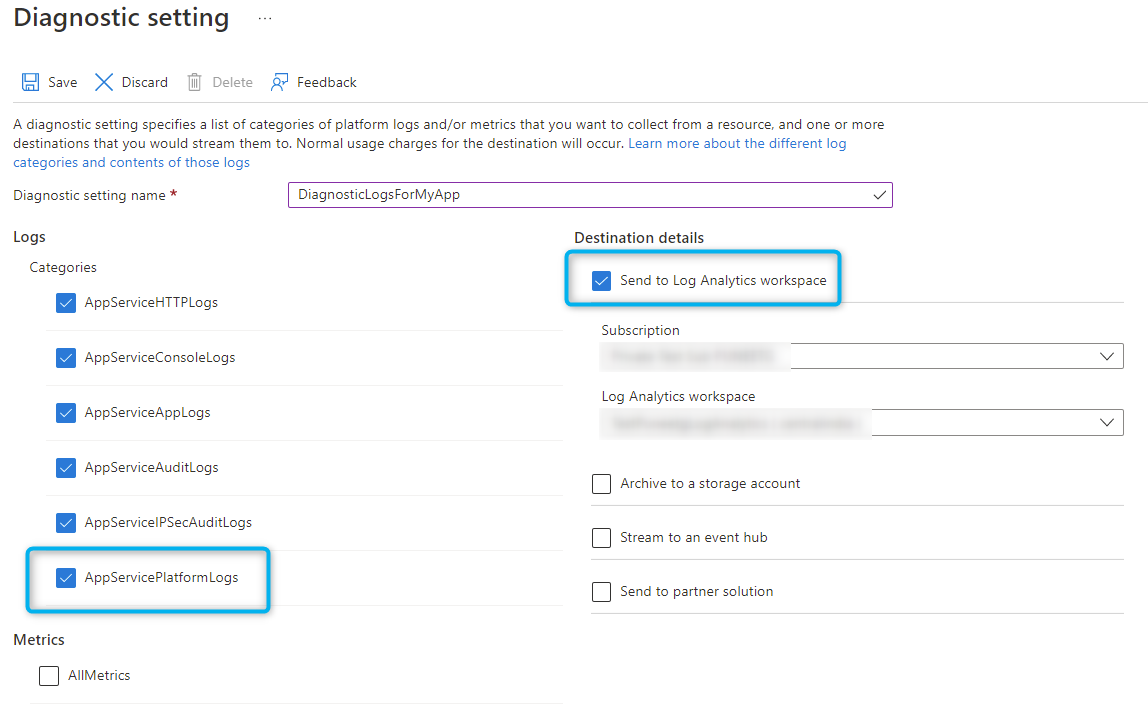

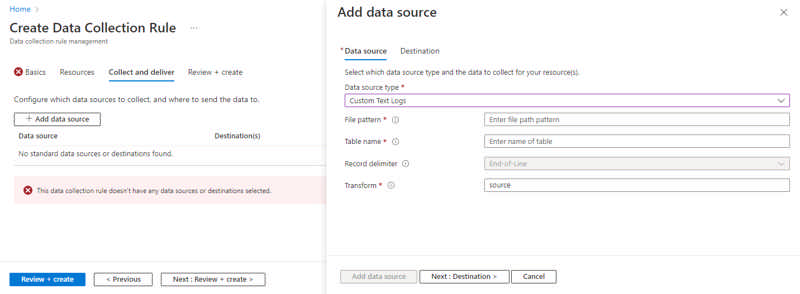

Azure Monitor agent is the way to collect text and IIS files for Log Analytics.

Today Microsoft is happy to introduce the long-awaited Custom Log and IIS Log collection capability. This new capability is designed to enable customers to collect their text-based logs generated in their service or application. Likewise, Internet Information Service (IIS) logs for a customers’ service can be collected and transferred into a Log Analytics Workspace table for analysis. These new collection types will enable customers to migrate from other competing data collection services to Azure Monitor.

Source: General availability: Azure Monitor agent custom and IIS logs

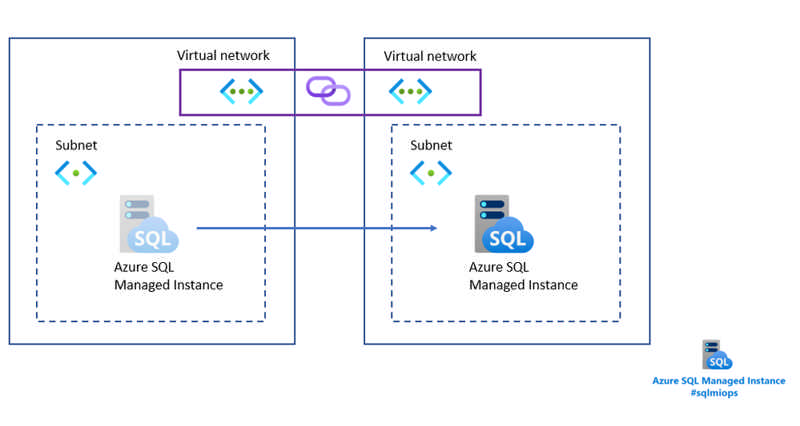

In this blog, we will present a feature for moving Azure SQL Managed Instance from one to another subnet located in a different virtual network. This capability comes as an enhancement of the existing capability for moving the instance to another subnet.

Read more at Azure Daily 2022

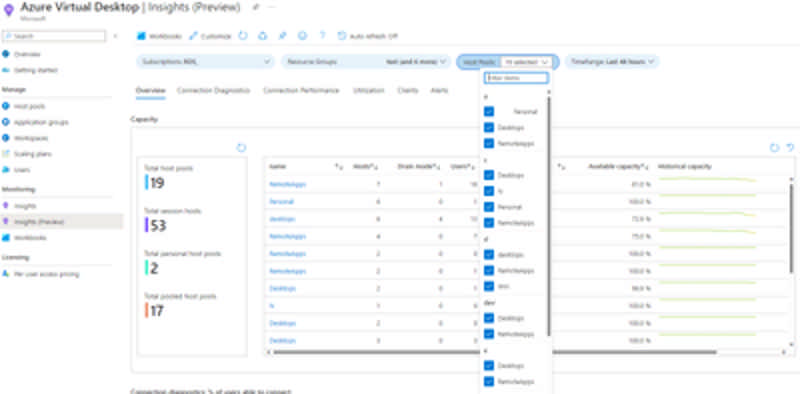

Microsoft is excited to announce the public preview of one of our most requested native monitoring features – Azure Virtual Desktop Insights at Scale. This update provides the ability to review performance and diagnostic information across multiple host pools in one view.

Previously, Azure Virtual Desktop Insights only supported the ability to review information related to a single host pool at a time. In many cases this limited visibility into issues that may have an impact across multiple host pools.

Read more at Azure Daily 2022

Azure App Service is regularly updated to provide new runtime versions to allow web app developers to take advantage of the latest runtime features and security fixes. We are now adding support for Python 3.10, PHP 8.1 and Node 18, giving them a choice of more versions of the latest and fastest growing web app development languages available.

Source: Generally Available: New versions supported for languages and frameworks in Azure App Service

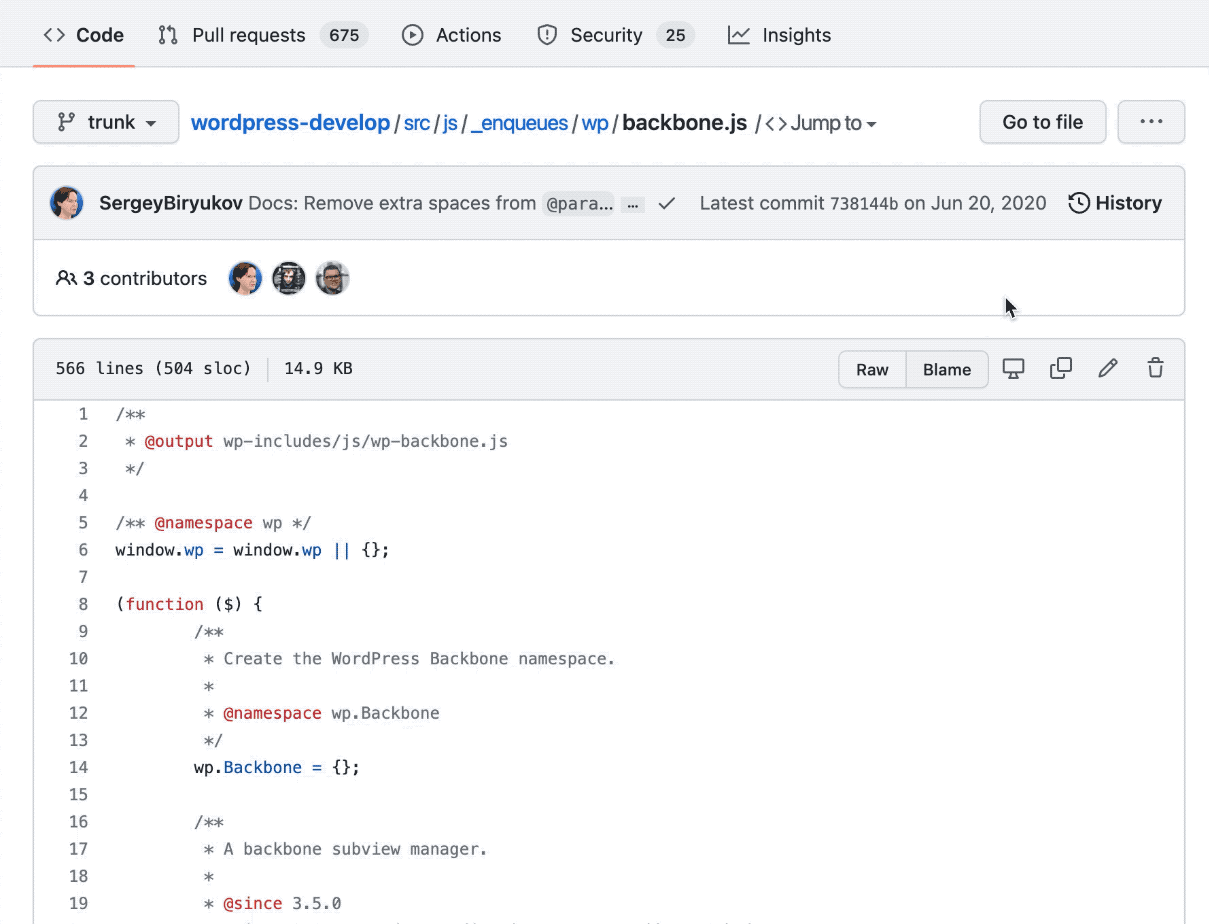

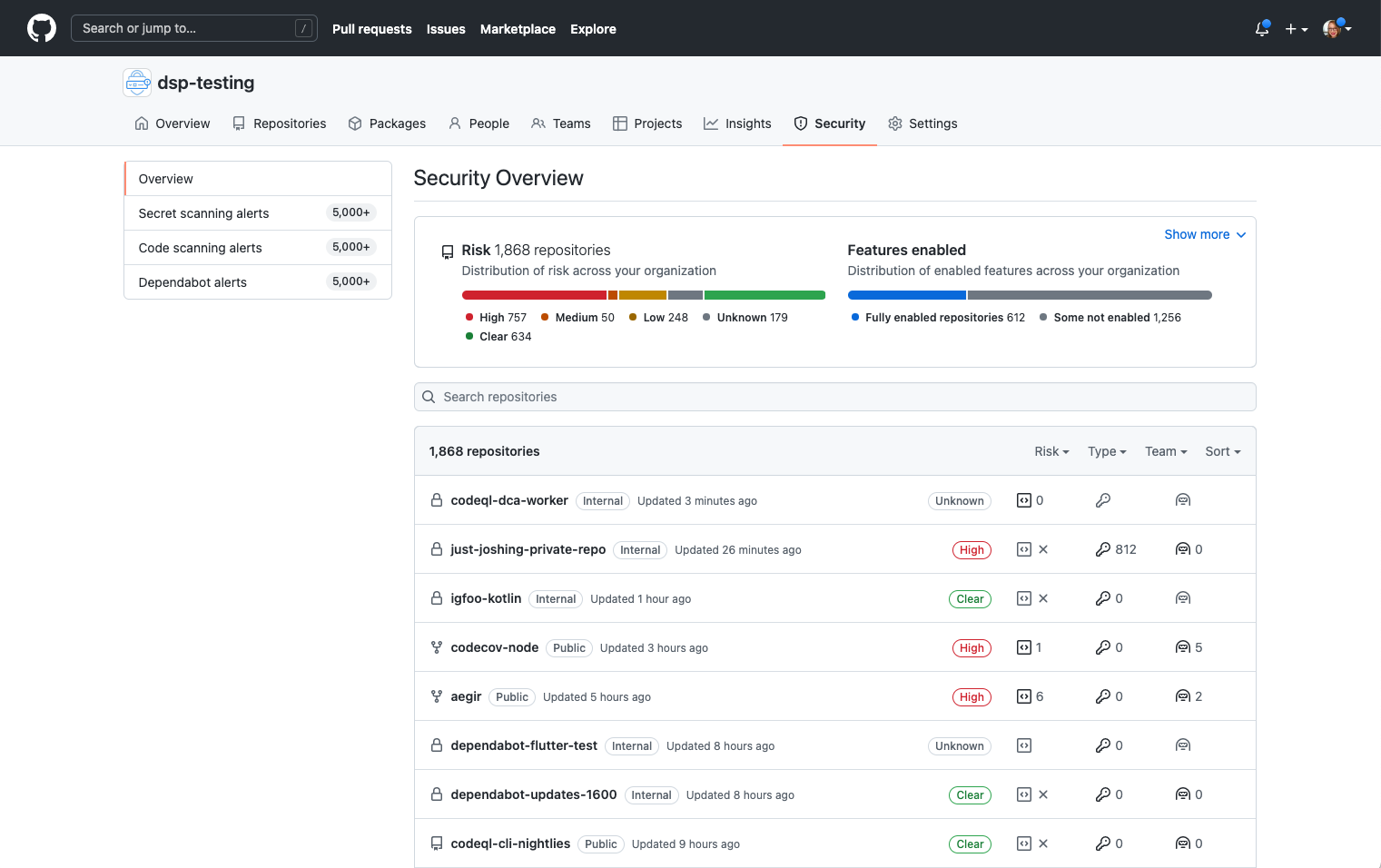

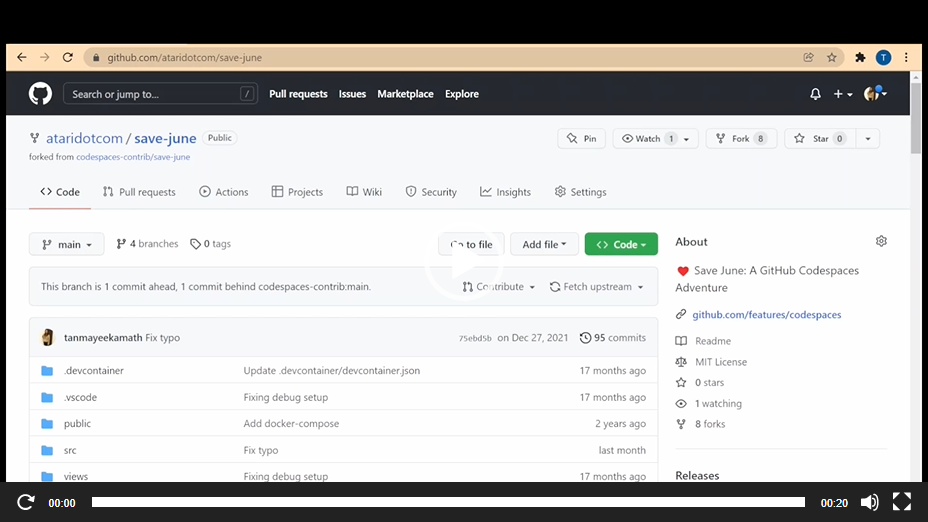

Starting today, GitHub code scanning includes beta support for analyzing code written in Kotlin, powered by the CodeQL engine.

Kotlin is a key programming language used in the creation of Android mobile applications, and is an increasingly popular choice for new projects, augmenting or even replacing Java. To help organisations and open source developers find potential vulnerabilities in their code, we’ve added Kotlin support (beta) to the CodeQL engine that powers GitHub code scanning. CodeQL now natively supports Kotlin, as well as mixed Java and Kotlin projects. Set up code scanning on your repositories today to receive actionable security alerts right on your pull-requests. To enable Kotlin analysis on a repository, configure the code scanning workflow languages to include java. If you have any feedback or questions, please use this discussion thread or open an issue if you encounter any problems.

Kotlin support is an extension of our existing Java support, and benefits from all of our existing CodeQL queries for Java, for both mobile and server-side applications. We’ve also improved and added a range of mobile-specific queries, covering issues such as handling of Intents, Webview validation problems, fragment injection and more.

CodeQL support for Kotlin has already been used to identify novel real-world vulnerabilities in popular apps, from task management to productivity platforms. You can watch the GitHub Universe talk on how CodeQL was used to identify vulnerabilities like these here.

Kotlin beta support is available by default in GitHub.com code scanning, the CodeQL CLI, and the CodeQL extension for VS Code. GitHub Enterprise Server (GHES) version 3.8 will include this beta release.

Source: CodeQL code scanning launches Kotlin analysis support (beta)

Day 0 support for .NET 7.0 on App Service means that developers are immediately unblocked to try, test, and deploy .NET apps targeting the version of .NET accelerating time-to-market on the platform they know and use today. It is expected to be available in Q2 FY23.

Please visit this QuickStart: Deploy an ASP.NET web app to try out .NET 7.0 on App Service.

Source: Generally available Day 0 support for .NET 7.0 on App Service

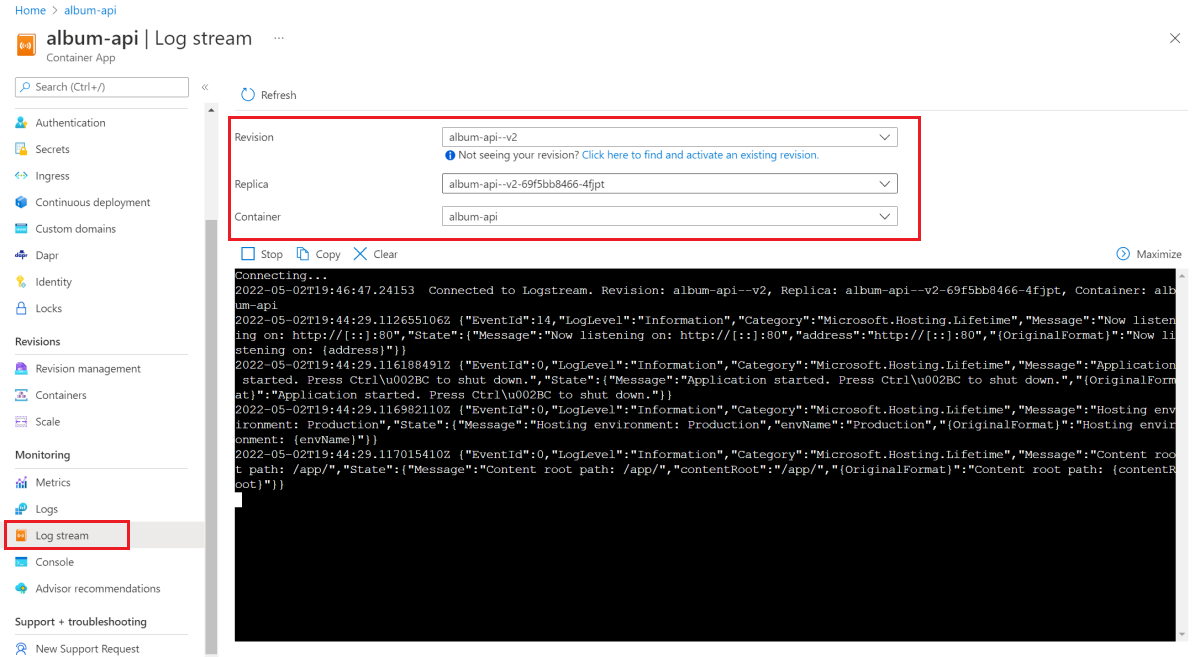

Public preview: Build and deploy to Azure Container Apps without a Dockerfile from the Azure CLI

Azure Container Apps is a serverless containers platform for microservices. It has a rich set of commands in the Azure CLI for managing and deploying container apps.

The “az containerapp up” command can build and deploy local source code to Azure Container Apps in a single command. Previously, “az containerapp up” required a Dockerfile to build a container image. "az containerapp up” now supports building container images from source code without a Dockerfile.

Popular languages and runtimes, including .NET, Python, and Node.js are supported.

This feature is currently in preview.

Source: Public preview: Build and deploy to Azure Container Apps without a Dockerfile from the Azure CLI

Azure offers a unique capability of mounting Blob Storage (or object storage) as a file system to a Kubernetes pod or application using BlobFuse or NFS 3.0 options. This allows you to use blob storage with a number of stateful Kubernetes applications including HPC, Analytics, image processing, and audio or video streaming. Not only that, if your application ingests data into Data Lake storage on Azure Blobs, you can now directly mount and use it with AKS. Previously, you had to manually install and manage the lifecycle of the open-source Azure Blob CSI driver including deployment, versioning, and upgrades.

You can now use the Azure Blob CSI driver as a managed addon in AKS with built in storage classes for NFS and BlobFuse, reducing the operational overhead and maximizing time to value.

Source: Generally available: Azure Blob CSI driver support in AKS

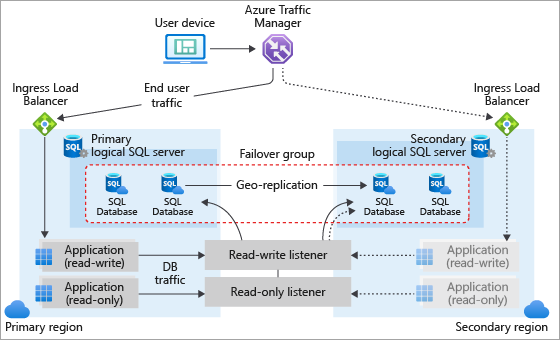

Azure Cosmos DB for PostgreSQL now supports cross-region asynchronous replication of data from one cluster to another cluster. This feature allows read-heavy workloads to scale out and load balance across independently configured read-only replicas which can also be promoted to independent read-write clusters. These features can provide you with increased read performance and more precise resource utilization for better cost efficiency and higher availability through support for cross-region disaster recovery.

Source: General availability: Cross-region read replicas for Azure Cosmos DB for PostgreSQL

For the first time ever, Go language (v1.18 and v1.19) is natively supported on Azure App Service, helping developers innovate faster using the best fully managed app platform for cloud-centric web apps. The language support is available as an experimental language release on Linux App Service in November 2022.

Source: Public preview: Go language support on Azure App Service

Generally available: Azure Blob Storage integration with Azure Cosmos DB for PostgreSQL

Using the pg_azure_storageextension, you can interact with Azure Blob Storage containers directly from Azure Cosmos DB for PostgreSQL. Container contents can be listed and fetched using the COPY command and a flexible API. Save time implementing custom data upload pipelines without requiring additional infrastructure and leverage efficient networking between Azure services using a flexible API to make complex data pipelines easier to automate. Currently supported formats include .tsv, .csv, binary, text, and transparent decompression of .gzip compressed file.

Source: Generally available: Azure Blob Storage integration with Azure Cosmos DB for PostgreSQL

Azure Daily 2022 - Nov 23, 2022

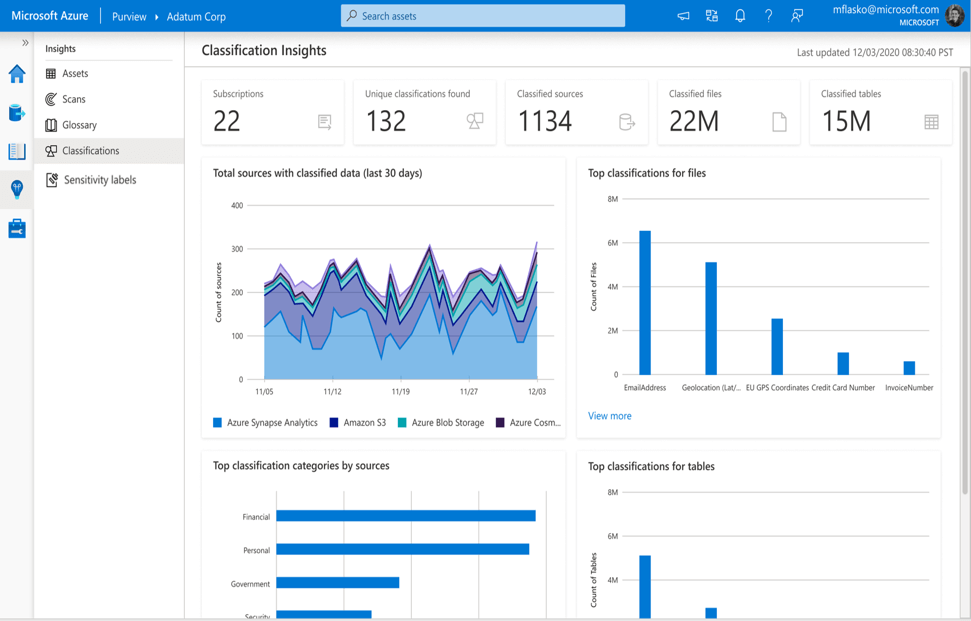

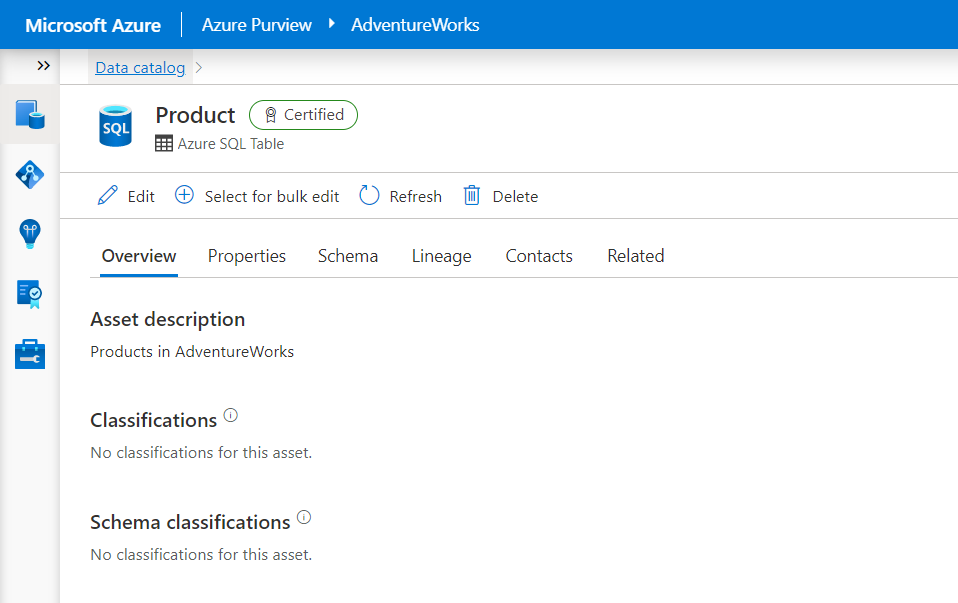

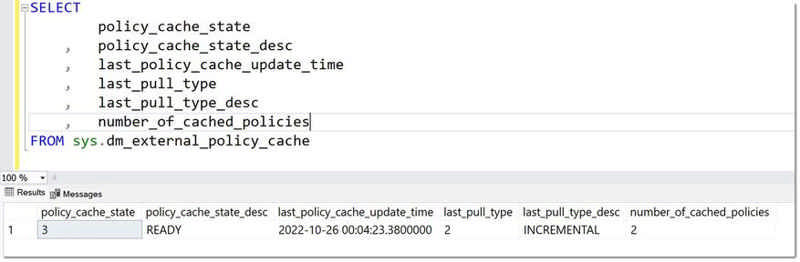

In this article you will find an overview of all the new metadata that was added to support customers using external data and access Policies from Microsoft Purview. You will find this interesting if you are in a technical role and have access to a SQL database, for example as a DBA or developer or need to create reports on who has access to your systems.

Read more at Azure Daily 2022

Today, GitHub is introducing calendar-based versioning for the REST API to give API integrators a smooth migration path and plenty of time to update their integrations when we need to make occasional breaking changes to the API.

You can learn more in today’s blog post and on the new “API Versions” page in our docs.

If you’re using the REST API, you don’t need to take any action right now. We’ll get in touch with plenty of notice before we drop support for any old versions.

A new version of Azure Quota REST API support for service limits (quotas) is now available in Public preview. Use this new feature to programmatically manage the service limits (quotas) of Azure Virtual Machines (cores/vCPU), Networking, Azure HPC Cache and Azure Purview services. Take advantage of this capability to query current usage and quotas for the supported resources and update these limits, when needed.

For the resources currently supported, the Quota API provides an easier way to quickly get current limits, current usage, and request quota increases.

Request quota increases and enumerate current quotas by subscription, provider, and location seamlessly.

Source: Public Preview: Use Azure Quota Rest APIs to manage service limits (quotas)

We are delighted to announce the preview of Cross Subscription Restore of Azure Virtual machines. Cross Subscription Restore allows you to restore Azure Virtual Machine, through create new or restore disks, to any subscription (honoring the RBAC capabilities) from the restore point created by Azure Backup. By default, Azure Backup restores to the same subscription where the restore points are available. With this new feature, you can gain the flexibility of restoring to any subscription under your tenant if restore permissions are available. You can trigger Cross Subscription Restore for managed Azure Virtual Machines only from vault and not from snapshots. Cross Subscription Restore is also supported for Restore with Managed System Identities (MSI). It is unsupported for Encrypted Azure VMs and Trusted Launch VMs.

Learn more about Cross Subscription Restore.

Source: Public preview: Cross Subscription Restore for Azure Virtual Machines

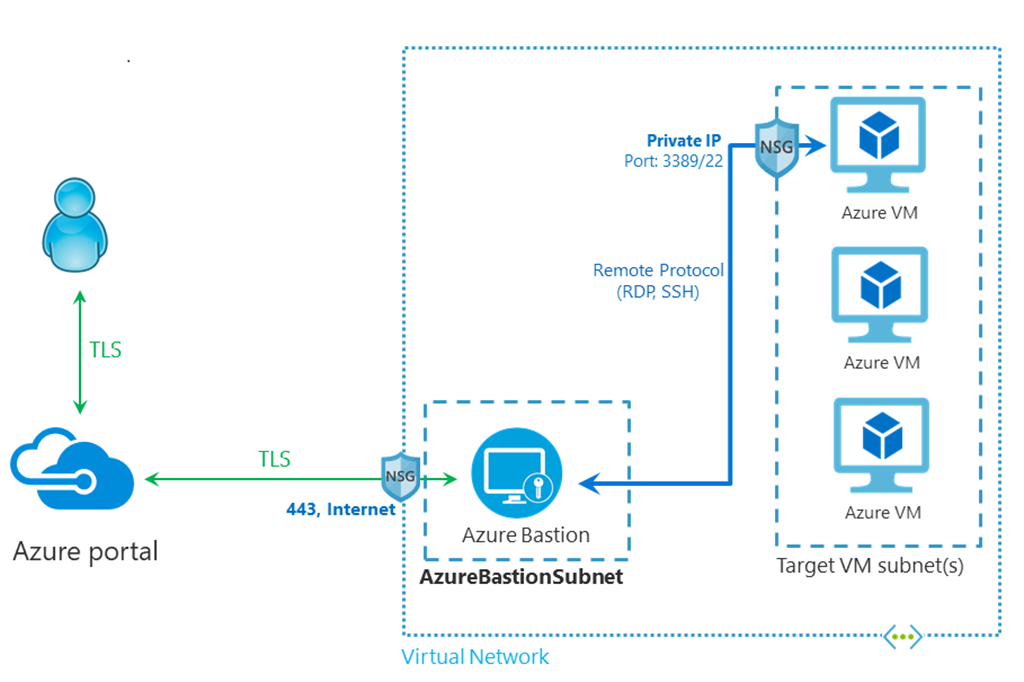

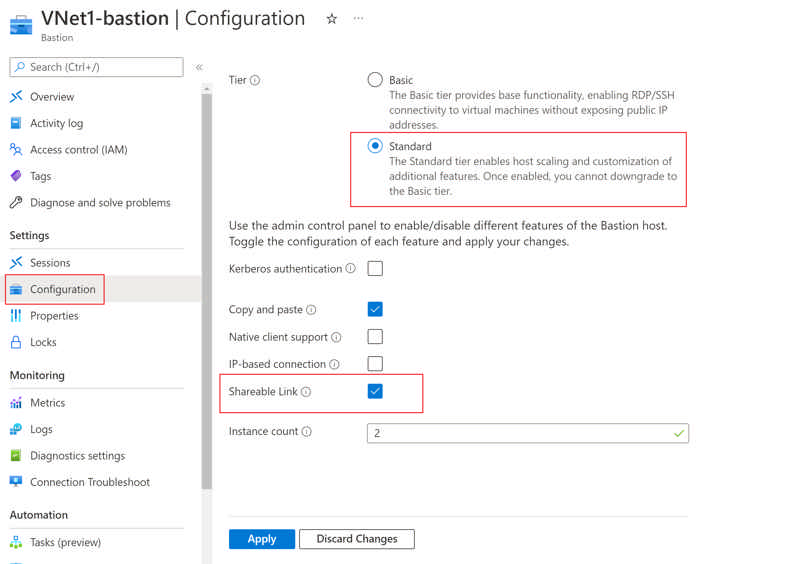

With the new Azure Bastion shareable links feature in public preview and included in Standard SKU, you can now connect to a target resource (virtual machine or virtual machine scale set) using Azure Bastion without accessing the Azure portal.

This feature will solve two key pain points:

- Administrators will no longer have to provide full access to their Azure accounts to one-time VM users—helping to maintain their privacy and security.

- Users without Azure subscriptions can seamlessly connect to VMs without exposing RDP/SSH ports to the public internet.

Source: Public preview: Azure Bastion now support shareable links

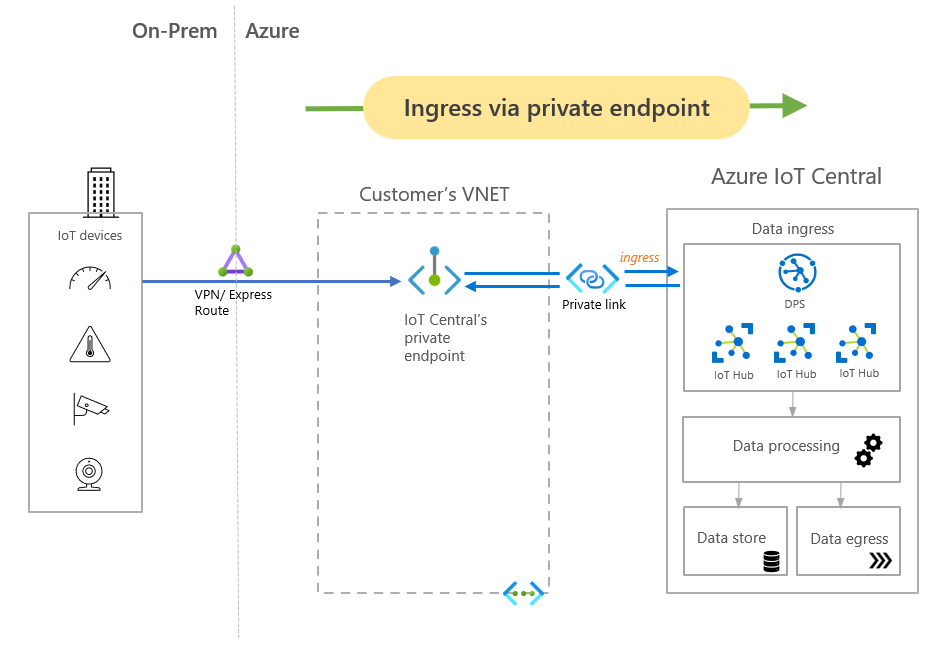

Azure IoT Hub now supports the ability to setup an Azure Cosmos DB account as a custom routing endpoint. This will help route device data from IoT Hub to Azure Cosmos DB directly. The feature also allows the configuration of Synthetic Partition Keys for writing data into Azure Cosmos DB which helps in optimized querying when working with large-scale data.

Many IoT solutions require extensive downstream data analysis and pushing data into hyperscale databases. For example, IoT implementations in manufacturing and intelligent transport systems require hyperscale databases with extremely high throughput to process the continuous stream of data. Traditional SQL based relational databases cannot scale optimally and also become expensive once data scale increases. Azure Cosmos DB is best suited for such cases where the data needs to be analyzed while it is being written.

Source: Public preview: Add an Azure Cosmos DB custom endpoint in IoT Hub

Earlier this year, we announced our vision to empower any developer to become a space developer through Azure. With over 90 million developers on GitHub, we have created a powerful ecosystem and we are focused on empowering the next generation of developers for space. Today, we are announcing a crucial step towards democratizing access to space development, with the preview release of Azure Orbital Space SDK (software development kit)—a secure hosting platform and application toolkit designed to enable developers to create, deploy, and operate applications on-orbit.

By bringing modern cloud-based applications to spacecrafts we not only increase the efficiency, value, and speed of insights from space data but also increase the value of that data through the optimization of ground communication.

Source: Any developer can be a space developer with the new Azure Orbital Space SDK

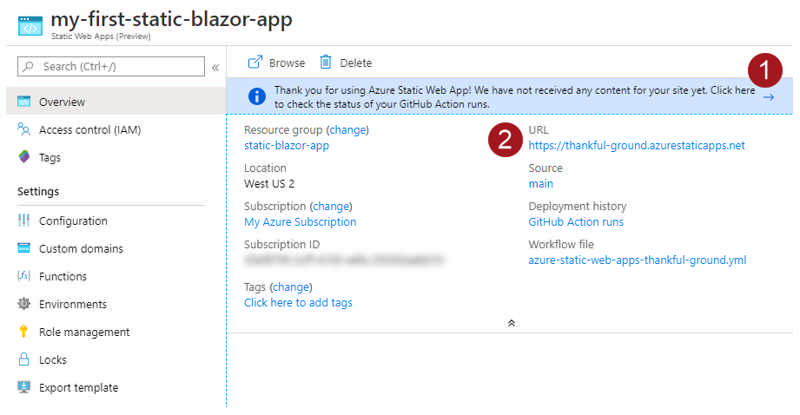

By using .NET 7 for your entire stack, you can leverage the latest language and runtime improvements in .NET, and you can seamlessly share code between your Blazor WebAssembly app, Azure Functions, and other .NET applications.

For your app’s frontend, Static Web Apps can now automatically build and deploy .NET 7.0 Blazor WebAssembly apps. For backend APIs, you can build and deploy .NET 7.0 Azure Functions with your static web apps.

Azure Static Web Apps support for .NET 7.0 follows the .NET 7.0 lifecycle. To learn more, please refer to the .NET support policy.

Source: Generally available: Azure Static Web Apps now fully supports .NET 7

Azure Quota REST API support for service limits (quota) is now available in preview for you to manage your quota programatically. Use Azure REST Quota APIs to manage service limits (quotas) for Azure Virtual Machines (cores/vCPU), Networking, Azure HPC Cache, and Azure Purview services.

For the resources currently supported, the Quota API provides an easier way to quickly get current limits, current usage, and request quota increases.

Request quota increases and enumerate current quotas by subscription, provider, and location seamlessly.

Source: Public preview: Use Azure Quota REST APIs to manage service limits (quotas)

With Static Web Apps, you can now configure Azure Pipelines to deploy your application to preview environments. The Azure DevOps task for Azure Static Web Apps intelligently detects and builds your app’s frontend and API and deploys the entire application to Azure. You can fully automate the testing and delivery of your software in multiple stages all the way to production.

Azure Static Web Apps provides globally distributed content hosting and serverless APIs powered by Azure Functions. It also includes everything you need to run a full-stack web app, including support for custom domains, free SSL certificates, authentication/authorization, and preview environments.

This feature is now generally available.

Source: Generally available: Static Web Apps support for preview environments in Azure DevOps

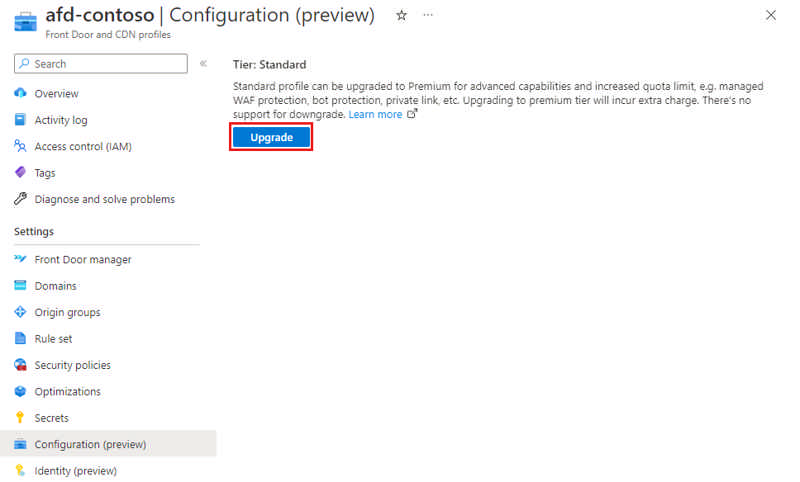

Azure Front Door supports upgrading from Standard to Premium tier without downtime. Azure Front Door Premium supports advanced security capabilities and has increased quota limit, such as managed Web Application Firewall rules and private connectivity to your origin using Private Link.

Source: Public preview: Upgrade from Azure Front Door Standard to Premium tier

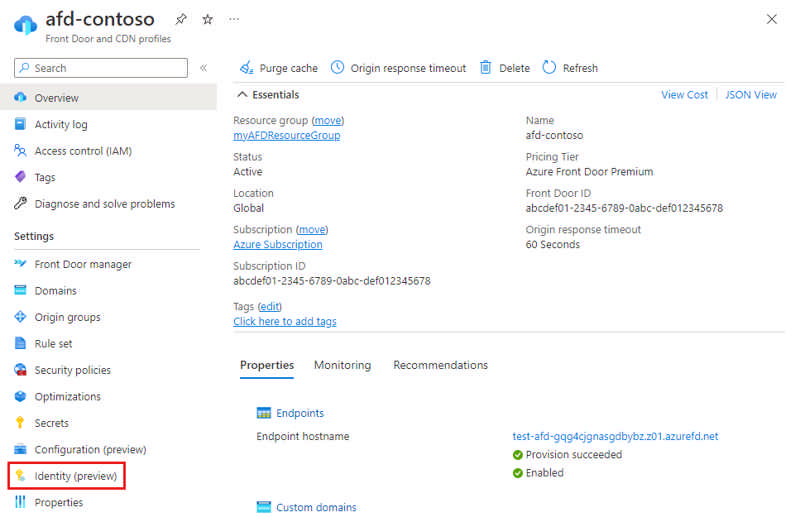

Azure Front Door now supports managed identities generated by Azure Active Directory to allow Front Door to easily and securely access other Azure AD-protected resources such as Azure Key Vault. This feature is in addition to the AAD Application access to Key Vault that is currently supported.

Source: Public preview: Azure Front Door integration with managed identities

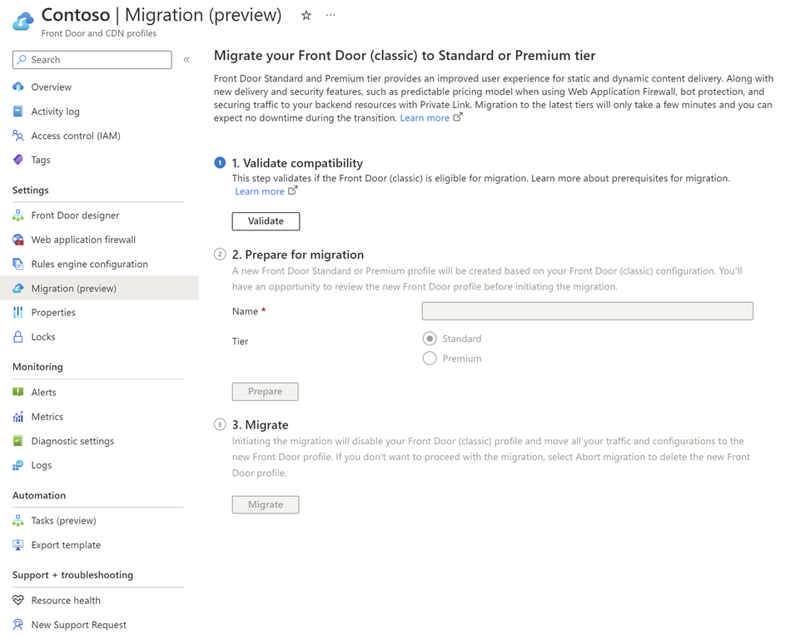

In March of this year, Microsoft announced the general availability of two new Azure Front Door tiers. Azure Front Door Standard and Premium are our native, modern cloud content delivery network (CDN) catering to both dynamic and static content delivery acceleration with built-in turnkey security and a simple and predictable pricing model.

The migration capability enables you to perform a zero-downtime migration from Azure Front Door (classic) to Azure Front Door Standard or Premium in just three simple steps or five simple steps if your Azure Front Door (classic) instance has custom domains with your own certificates. The migration will take a few minutes to complete depending on the complexity of your Azure Front Door (classic) instance, such as number of domains, backend pools, routes, and other configurations.

Source: Public preview: Azure Front Door zero downtime migration

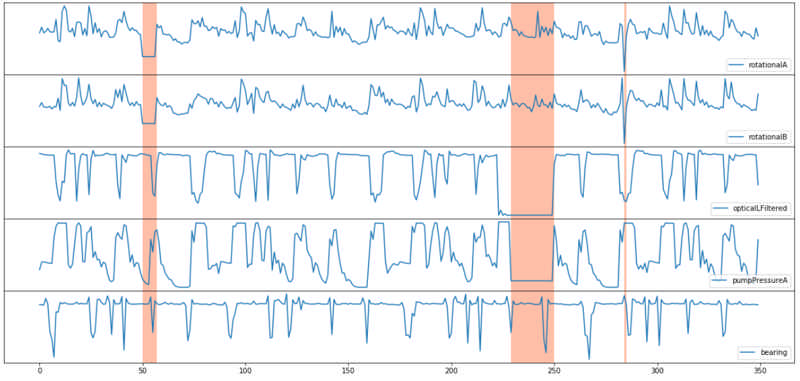

You will be able to usedependencies and inter-correlations between up to 300 different signals and now easily integrate the multivariate time series anomaly detection capabilities into predictive maintenance solutions, artificial intelligence for IT operation monitoring solutions for complex enterprise software, or business intelligence tools. Through the anomaly results that are detected by this feature, you will not only know when there is an anomaly before a disaster happens, but also get the contribution rank of anomalous variables, which will help save time and effort to analyze root cause.

Source: General availability: Multivariate Anomaly Detection

When you deploy a site to Azure Static Web Apps, each pull request against your production branch will generate a preview deployment available at a temporary URL. This can be configured in the GitHub Actions workflow by enabling deployment from branches or by specifying a deployment environment name.

To deploy non-production branches to a preview environment, you are required to update the GitHub workflow to run when a push is made to the specific branches and define the production_branch property in the build_and_deploy_job configuration.

Alternatively, you can push changes to a named preview environment by configuring a deployment_environment property in the workflow.

Source: Generally available: Static Web Apps support for stable URLs for preview environments

The new Virtual Machine software reservations enable savings on your Virtual Machine software costs when you make a one- to three-year commitment for plans offered by third-party publishers such as Canonical, Citrix, and Red Hat.

- You can choose to pay monthly or up-front

- You can change the size of the deployed Virtual Machine, and Microsoft handles the application of reservation benefits and overage charges

- You do not have to redeploy your workloads to use the reservation benefits

Source: General availability: Virtual Machine software reservations

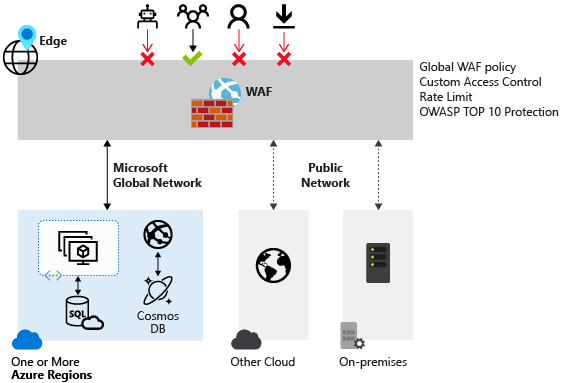

We are announcing the general availability of the Default Rule Set 2.1 (DRS 2.1) on Azure's global Web Application Firewall (WAF) running on Azure Front Door. This rule set is available on the Azure Front Door Premium tier.

DRS 2.1 is baselined off the Open Web Application Security Project (OWASP) Core Rule Set (CRS) 3.3.2 and includes additional proprietary protections rules developed by Microsoft Threat Intelligence team. As with previous DRS releases, DRS 2.1 rules are also tailored by Microsoft Threat Intelligence Center (MSTIC). The MSTIC team analyzes Common Vulnerabilities and Exposures (CVEs) and adapts the CRS ruleset to address those issues while also reducing false positives to our customers.

Source: General availability: Default Rule Set 2.1 for Azure Web Application Firewall

Logic Apps Standard VS Code Extension now allows you to export groups of logic apps workflows deployed to Azure, either in Consumption SKU or under an Integration Service Environment (ISE) as a local Logic Apps Standard project, allowing you to locally test the exported logic apps and either deploy directly to Azure or push the project to your preferred source control repository.

The tool will also generate ARM templates to support the deployment of a Logic App Standard application and any associated Azure connectors via script, parameterize your connections configuration – simplifying the move between environments, and deploy new instances to your Azure connections, so local testing don’t impact existing applications.

To learn more about the tool, including how to install and a walkthrough of the export process, follow one of the paths below:

- Exporting Consumption Workflows to Logic Apps Standard

- Exporting ISE workflows to Logic Apps Standard

Source: Public preview: Exporting ISE and Consumption Logic Apps to Standard SKU

As part of our commitment to delivering the best possible value for Azure confidential computing, we're announcing the support to create confidential VMs using Ephemeral OS disks. This enables customers using stateless workloads to benefit from the trusted execution environments (TEEs). Trusted execution environments protect data being processed from access outside the trusted execution environments.

Source: General availability: Ephemeral OS disk support for confidential virtual machines

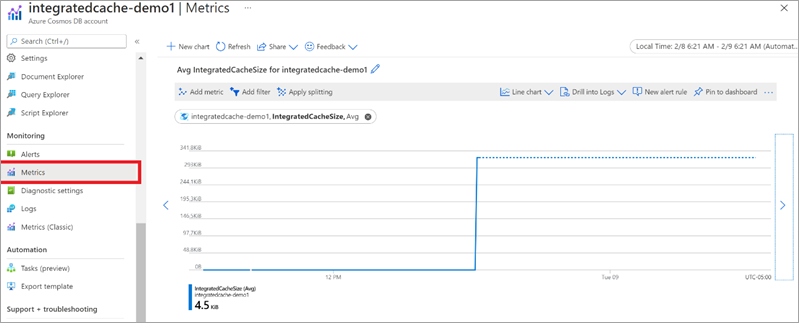

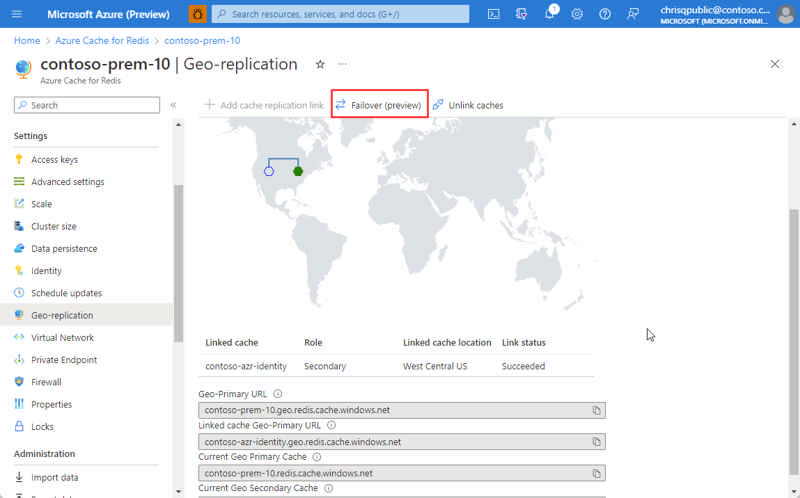

Several enhancements have been made to the passive geo-replication functionality offered on the Premium tier of Azure Cache for Redis. New metrics are available for you to better track the health and status of your geo-replication link, including statistics around the amount of data that is waiting to be replicated. With this feature, you can now initiate a failover between geo-primary and geo-replica caches with a single click or CLI command, eliminating the hassle of manually unlinking and relinking caches. A global cache URL is also now offered that will automatically update your DNS records after geo-failovers are triggered, allowing your application to only manage one cache address.

Source: Public preview: Improved passive geo-replication for Azure Cache for Redis

Customers can now deterministically restrict their workflows to run on a specific set of runners using the names of their runner groups in the runs-on key of their workflow YAML. This prevents the unintended case where your job runs on a runner outside your intended group because the unintended runner shared the same labels as the runners in your intended runner group.

Example of the new syntax to ensure a runner is targeted from your intended runner group:

runs-on:

group: my-group

labels: [ self-hosted, label-1 ]In addition to the workflow file syntax changes, there are also new validation checks for runner groups at the organization level. Organizations will no longer be able to create runner groups using a name that already exists at the enterprise level. A warning banner will display for any existing duplicate runner groups at the organization level. There's no restriction on the creation of runner groups at the enterprise level.

This feature change applies to enterprise plan customers as only enterprise plan customers are able to create runner groups.

Source: GitHub Actions: Restrict workflows to specific runners using runner group names

Beginning in November, Databricks is rolling out a new compute option called Databricks SQL Pro, joining the SQL product family of Classic and Serverless. Like Serverless SQL, SQL Pro includes performance and integration features that expand the SQL experience on the Lakehouse Platform. The primary difference is that SQL Pro keeps compute in the customer's account.

Azure Databricks SQL Pro’s features include:

- Predictive I/O - Predictive I/O boosts query performance by leveraging the years of experience Databricks has in building large AI/ML systems to make the lakehouse a more intelligent data warehouse

- Applying deep learning techniques to our data lakehouse

- Determining the most efficient access pattern to read data

- For select queries, Predictive I/O calculates probabilities to anticipate where the next row match will occur, only reading that data from cloud storage

- Native Geospatial Features - 30+ built-in H3 expressions for geospatial processing and analysis in Photon-enabled clusters, available in SQL, Scala, and Python

- Query federation - Databricks Warehouse now supports the ability to query live data from various databases through federation capability. Query federation allows BI applications to integrate data in the lakehouse and external data sources, providing a rich query experience

- Workflows integrations - Invoke DBSQL tasks like queries, alerts, and running dashboards from within Databricks Workflows

Many more feature and performance improvements are on the way, such as Materialized Views, and Python UDFs. SQL Pro is generally available everywhere Databricks SQL Classic is available.

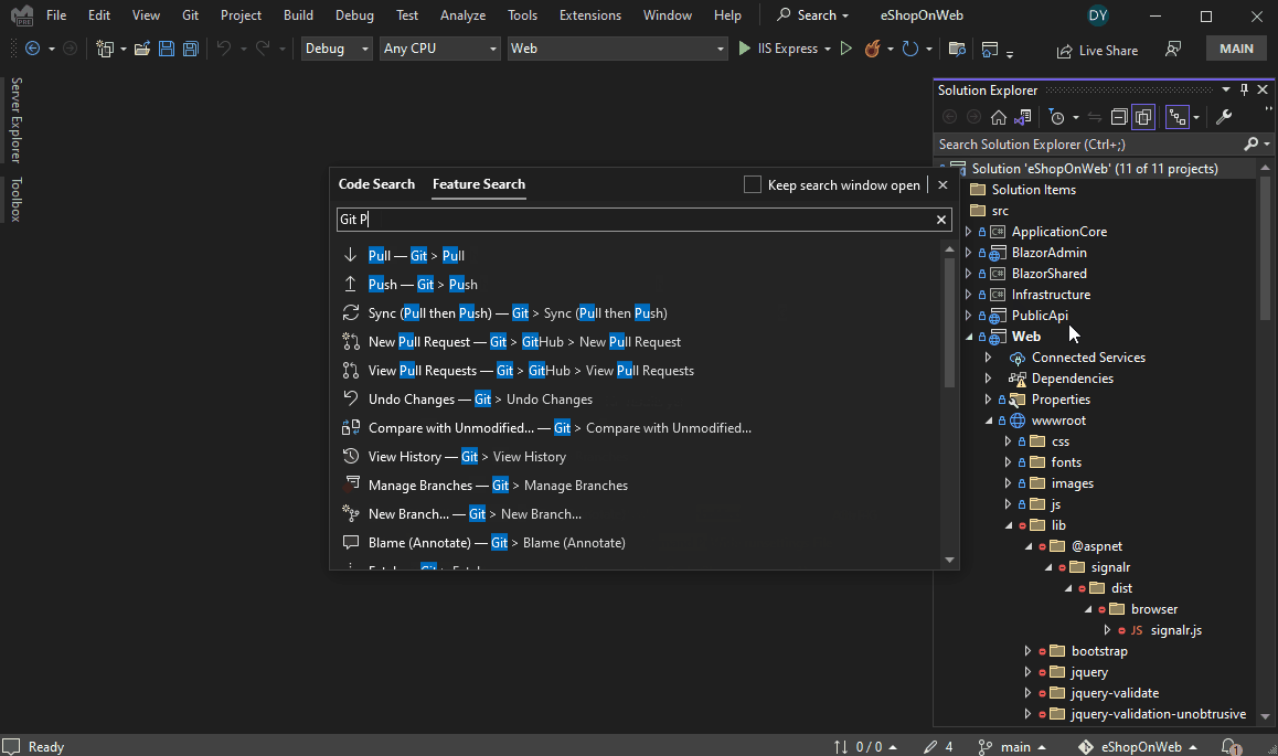

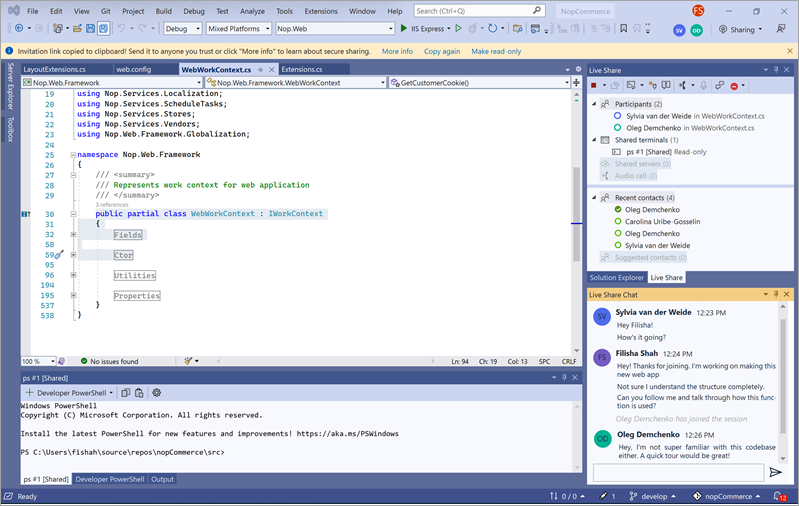

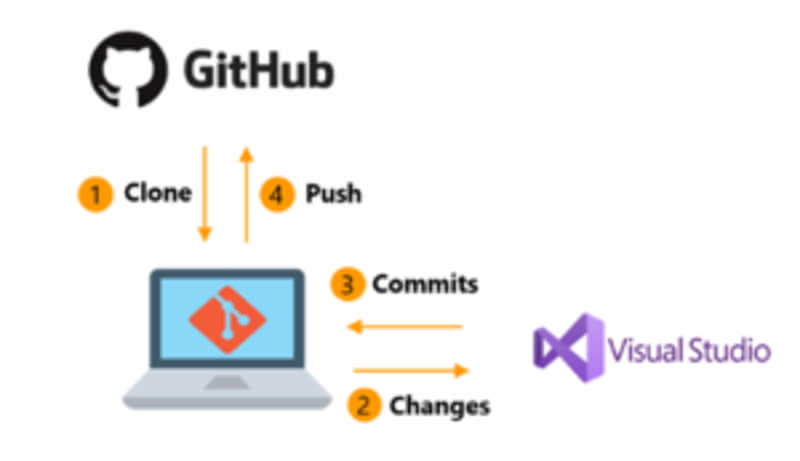

We’re introducing a new way to learn about Git, GitHub, and version control in Visual Studio – an email learning series with actionable challenges and a repository to practice your skills! We found from our Happiness Tracking Survey that 34% of our VS developers aren’t using any form of version control. While GitHub makes collaboration easy, even smaller teams or solo developers can boost their productivity and code management with version control. We’ll teach you how to back up your code, sync across devices, rollback breaking changes, and more within the IDE. Sign up for the new and improved Getting Started with GitHub in Visual Studio series and master GitHub in short lessons over the next four weeks.

Source: Learning Series: Get started with GitHub in Visual Studio -

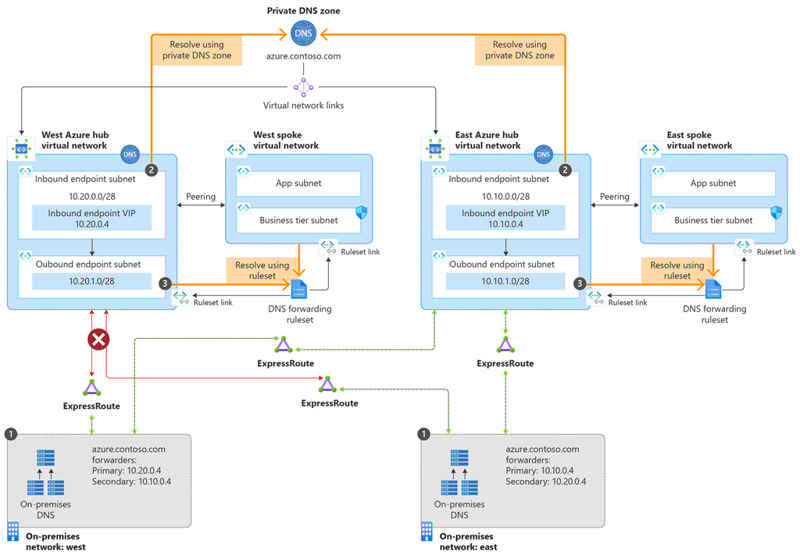

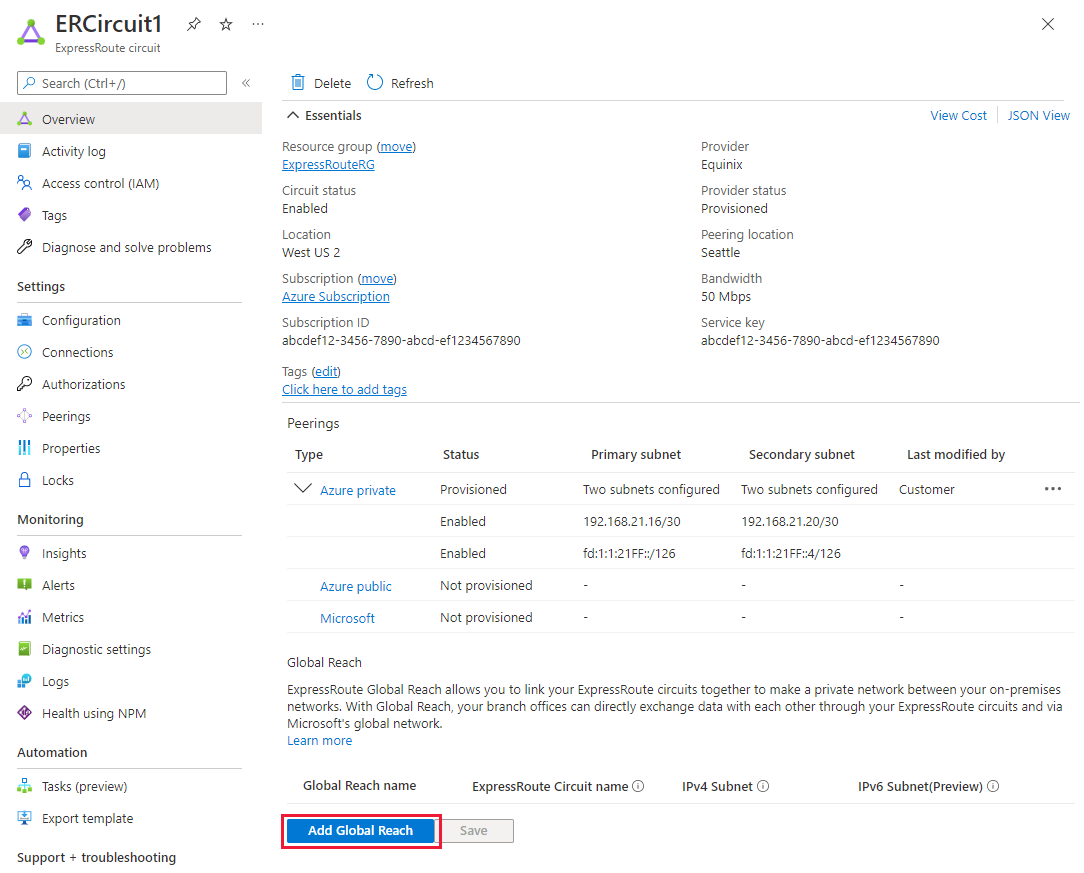

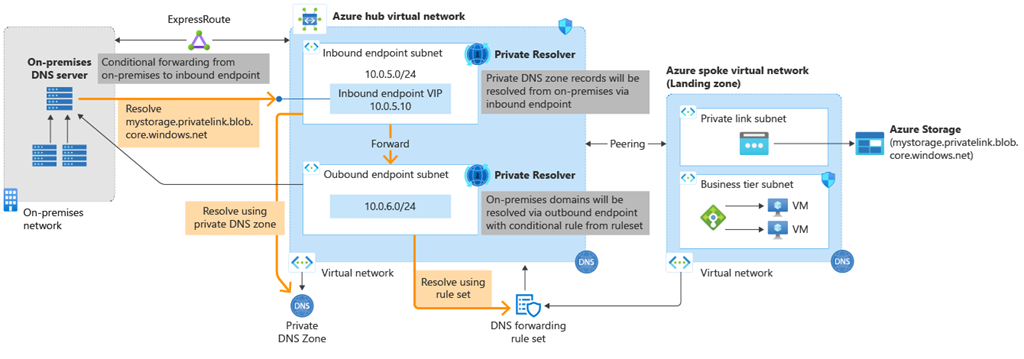

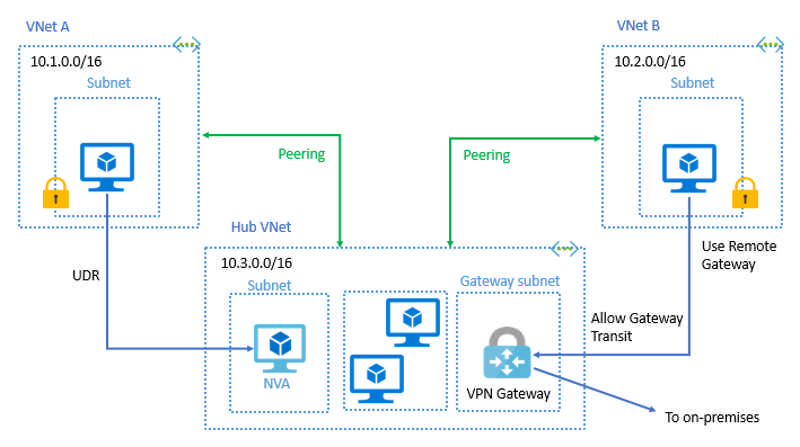

A successful hybrid networking strategy demands DNS services that work seamlessly across on-premises and cloud networks. Azure DNS Private Resolver now provides a fully managed recursive resolution and conditional forwarding service for Azure virtual networks. Using this service, you will be able to resolve DNS names hosted in Azure DNS private zones from on-premises networks as well as DNS queries originating from Azure virtual networks that can be forwarded to a specified destination server to resolve them.

This service will provide a highly available and resilient DNS infrastructure on Azure for a fraction of the price of running traditional IaaS VMs running DNS servers in virtual networks. You will be able to seamlessly integrate with Private DNS Zones and unlock key scenarios with minimal operational overhead.

We are excited to share that Azure DNS Private Resolver is now in general availability.

A quick overview of Azure DNS

We offer two types of Azure DNS Zones—private and public—for hosting your private DNS and public DNS records. In the preceding illustration, multi-region workloads running on Azure with Azure DNS Private Resolver are provisioned in two regional, centralized virtual networks with one or more spokes peered to each centralized virtual network. These virtual networks have inbound and outbound endpoints provisioned. From on-premises, there are two distinct locations (East and West) and each location connects via Express Route to the centralized virtual network where Private Resolver is provisioned. These on-premises locations have one or more local DNS servers configured to do conditional forwarding to the inbound endpoint of Private Resolver. The local DNS servers in East have the IP address of the East inbound endpoint as the primary DNS target, and the West inbound endpoint as secondary. Alternatively, the local DNS servers in West have the IP address of the West inbound endpoint as the primary DNS target, and the East inbound endpoint as secondary. There is a single private DNS zone linked to both regions and both on-premises locations can resolve names from this zone even in the event of a regional failure.

- Azure Private DNS: Azure Private DNS provides a reliable and secure DNS service for your virtual network. Azure Private DNS manages and resolves domain names in the virtual network without the need to configure a custom DNS solution. By using private DNS zones, you can use your own custom domain name instead of the Azure-provided names during deployment.

- Azure Public DNS: DNS domains in Azure DNS are hosted on Azure's global network of DNS name servers. Azure DNS uses anycast networking. Each DNS query is answered by the closest available DNS server to provide fast performance and high availability for your domain.

Source: Announcing Azure DNS Private Resolver general availability

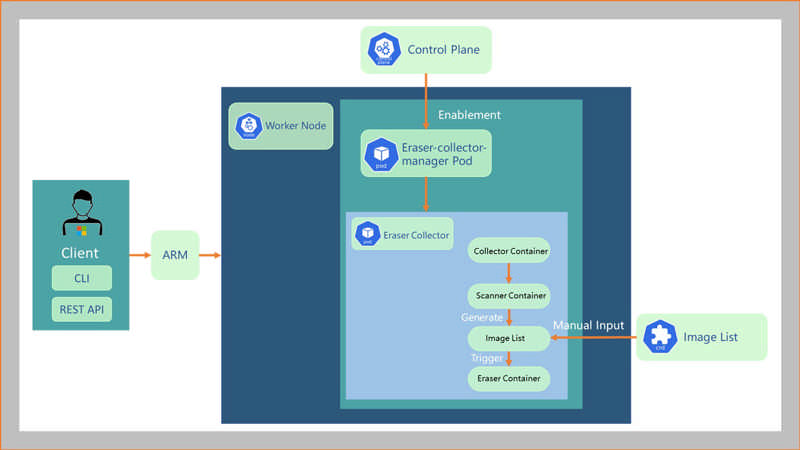

It's common to use pipelines to build and deploy images on Azure Kubernetes Service (AKS) clusters. This process often doesn't account for the stale images left behind and can lead to image bloat on cluster nodes. These images can present security issues as they may contain vulnerabilities.

With image cleaner, we can detect and automatically remove all unused and vulnerable images cached on AKS nodes keeping the nodes cleaner and safer.

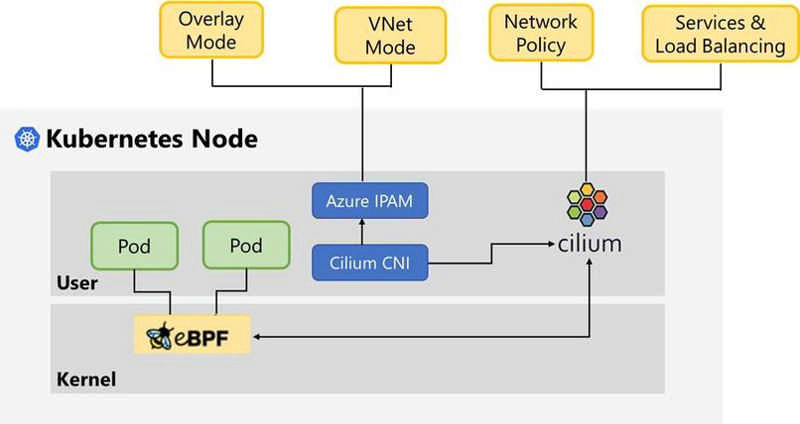

Public preview: Azure CNI Powered by Cilium

Azure CNI powered by Cilium provides native support for the next-generation Cilium eBPF data plane in AKS clusters running Azure CNI. It offers Pod networking, basic Kubernetes Network Policies, and high-performance service load balancing. The eBPF data plane is available in both VNet mode and Overlay mode of Azure CNI.

Mariner is an open-source Linux distribution created by Microsoft and is now available for preview as a container host on Azure Kubernetes Service (AKS).

Optimized for AKS, the Mariner container host provides reliability and consistency from cloud to edge across the AKS, AKS-HCI, and Arc products. You can deploy Mariner node pools in a new cluster, add Mariner node pools to your existing Ubuntu clusters, or migrate your Ubuntu nodes to Mariner nodes. To learn more about Mariner, see the Mariner documentation.

Why use Mariner

The Mariner container host on AKS uses a native AKS image that provides one place to do all Linux development. Every package is built from source and is validated, ensuring your services run on proven components. Mariner is lightweight, only including the necessary set of packages needed to run container workloads. It provides a reduced attack surface and eliminates patching and maintenance of unnecessary packages. At Mariner's base layer, it has a Microsoft hardened kernel tuned for Azure.

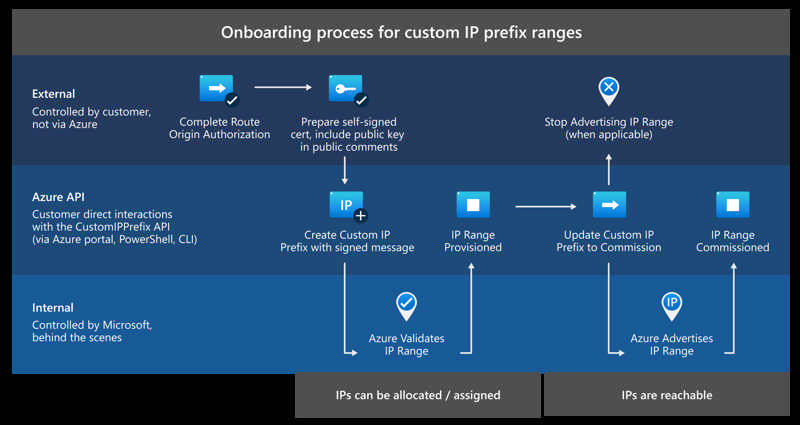

The ability to bring your own public IP ranges is now available in all US Government regions.

Additionally:

- You can now bring your own IPv6 ranges to Azure. These ranges must be a /48 size and can be split into multiple regional /64 ranges, of which a subset of IPs can be used as Public IP Prefixes.

- A regional commissioning feature now allows you to advertise a range internally within an Azure region prior to full global advertisement to the Internet, easing the migration process for a range that is already live outside of Azure.

Source: Generally available: Custom IP Prefixes (BYOIP) now available in US Government regions

Enterprises and hobbyists alike have been using Azure Computer Vision’s Image Analysis API to garner various insights from their images. These insights help power scenarios such as digital asset management, search engine optimization (SEO), image content moderation, and alt text for accessibility among others.

Newly improved features including read (OCR)

We are thrilled to announce the preview release of Computer Vision Image Analysis 4.0 which combines existing and new visual features such as read optical character recognition (OCR), captioning, image classification and tagging, object detection, people detection, and smart cropping into one API. One call is all it takes to run all these features on an image.

The OCR feature integrates more deeply with the Computer Vision service and includes performance improvements that are optimized for image scenarios that make OCR easy to use for user interfaces and near real-time experiences. Read now supports 164 languages including Cyrillic, Arabic, and Hindi.

Tested at scale and ready for deployment

Microsoft’s own products from PowerPoint, Designer, Word, Outlook, Edge, and LinkedIn are using Vision APIs to power design suggestions, alt text for accessibility, SEO, document processing, and content moderation.

You can get started with the preview by trying out the visual features with your images on Vision Studio. Upgrading from a previous version of the Computer Vision Image Analysis API to V4.0 is simple with these instructions.

We will continue to release breakthrough vision AI through this new API over the coming months, including capabilities powered by the Florence foundation model featured in this year’s premiere computer vision conference keynote at CVPR.

Additional Computer Vision services

Spatial Analysis is also in preview. You can use the spatial analysis feature to create apps that can count people in a room, understand dwell times in front of a retail display, and determine wait times in lines. Build solutions that enable occupancy management and social distancing, optimize in-store and office layouts, and accelerate the checkout process. By processing video streams from physical spaces, you're able to learn how people use them and maximize the space's value to your organization.

The Azure Face service provides AI algorithms that detect, recognize, and analyze human faces in images. Facial recognition software is important in many different scenarios, such as identity verification, touchless access control, and face blurring for privacy. Face service access is limited based on eligibility and usage criteria in order to support our Responsible AI principles. Face service is only available to Microsoft managed customers and partners. Use the Face Recognition intake form to apply for access. For more information, see the Face limited access page.

Computer Vision and Responsible AI

We are excited to see how our customers use Computer Vision’s Image Analysis API with these new and updated features. Our technology advancements are also guided by Microsoft’s Responsible AI process, and our principles of fairness, inclusiveness, reliability and safety, transparency, privacy and security, and accountability. We put these ethical standards into practice through the Office of Responsible AI (ORA)—which sets our rules and governance processes, the AI Ethics and Effects in Engineering and Research (Aether) Committee—which advises our leadership on the challenges and opportunities presented by AI innovations, and Responsible AI Strategy in Engineering (RAISE)—a team that enables the implementation of Microsoft Responsible AI rules across engineering groups.

Get started

Start improving how you analyze images with Image Analysis 4.0 with a unified API endpoint and a new OCR Model.

- Computer Vision documentation.

- Image Analysis documentation.

- Quick Start for Image Analysis.

- Vision Studio for demoing product solutions.

Source: Image Analysis 4.0 with new API endpoint and OCR model in preview

Azure regions and availability zones (AZ) are designed to help you achieve resiliency and reliability for your business-critical workloads.This Azure NetApp Files availability zone volume placement feature lets you deploy new volumes in the logical availability zone of your choice to support enterprise, mission-critical high availability (HA) deployments across multiple availability zones.This public preview of the feature is available in all availability zone-enabled regions with Azure NetApp Files presence.

Source: Public preview: Availability zone volume placement for Azure NetApp Files

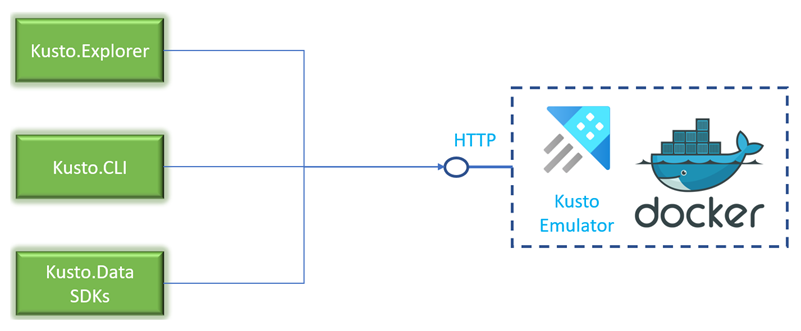

Azure Data Explorer now supports the ingestion of data from many receivers via the OpenTelemetry exporter.

OpenTelemetry (OTel) is a vendor-neutral open-source observability framework for instrumenting, generating, collecting, and exporting telemetry data such as traces, metrics, logs.

We are releasing Azure Data Explorer OpenTelemetry exporter, which supports ingestion of data from many receivers into Azure Data Explorer, allowing you to instrument, generate, collect, and store data using a vendor-neutral open-source framework.

Source: General availability: OpenTelemetry exporter for Azure Data Explorer

SSH File Transfer Protocol (SFTP) support for Azure Blob Storage is now generally available.

Azure Blob Storage now supports SFTP, enabling you to leverage object storage economics and features for your SFTP workloads. With just one click, you can provision a fully managed, highly scalable SFTP endpoint for your storage account. This expands Blob Storage’s multi-protocol access capabilities and eliminates data silos – meaning you can run different applications, requiring different protocols, on a single storage platform with no code changes.

Source: Generally available: SFTP support for Azure Blob Storage

The option to store the backup of the workloads protected by Azure Backup in zone redundant vaults is generally available. When you configure the protection of a resource with the zone-redundant storage (ZRS) vault, the backups replicate synchronously across three availability zones in a region. It enables you to perform successful restores and recover your data even if a zone goes down. For organizations governed by the compliance requirement of data not crossing the regional boundary, zone-redundant storage is the right and preferred choice for backups.

With the general availability of this feature, you have a broader set of redundancy or storage replication options to choose from for your backup data. Based on your data residency, data resiliency, and total cost of ownership (TCO) requirements, you can select either locally redundant storage (LRS), zone-redundant storage (ZRS), or geo-redundant storage (GRS).

Azure Backup currently supports ZRS in these regions.

Source: General availability: Zone-redundant storage support by Azure Backup

Azure Kubernetes Service is increasing the maximum node limit per cluster from 1,000 nodes to 5,000 nodes for customers using the uptime-SLA feature. The default limit for all AKS clusters will continue to be 1,000 nodes. However, AKS clusters using the uptime SLA feature can now request an increase in the AKS service quota up to a maximum of 5,000 nodes across all node pools in a cluster by creating a support request.

Workloads that need large amount of compute resources can now scale beyond 1,000 virtual machines (nodes) within the same cluster removing the operational overhead of managing cross-cluster deployments and workloads. You can scale your clusters up to 5,000 nodes using both manual and cluster autoscaler.

This feature is available for clusters using uptime-SLA and Azure CNI Network plugin only.

Stream Analytics now supports end-to-end exactly once semantics when writing to Azure Data Lake Storage Gen2. Your jobs now guarantee no data loss and no duplicates being produced as output. This simplifies your streaming pipeline by not having to monitor, implement, and troubleshoot deduplication logic.

Source: Public preview: Exactly once delivery for Azure Data Lake Storage Gen2

Azure savings plan for compute is an easy and flexible way to save significantly on compute services, compared to pay-as-you-go prices. The savings plan unlocks lower prices on select compute services when customers commit to spend a fixed hourly amount for one or three years. Choose whether to pay all upfront or monthly at no extra cost. As you use select compute services across the world, your usage is covered by the plan at reduced prices, helping you get more value from your cloud budget. During the times when your usage is above your hourly commitment, you'll be billed at your regular pay-as-you-go prices. With savings automatically applying across compute usage globally, you'll continue saving even as your usage needs change over time.

Source: General availability: Azure savings plan for compute

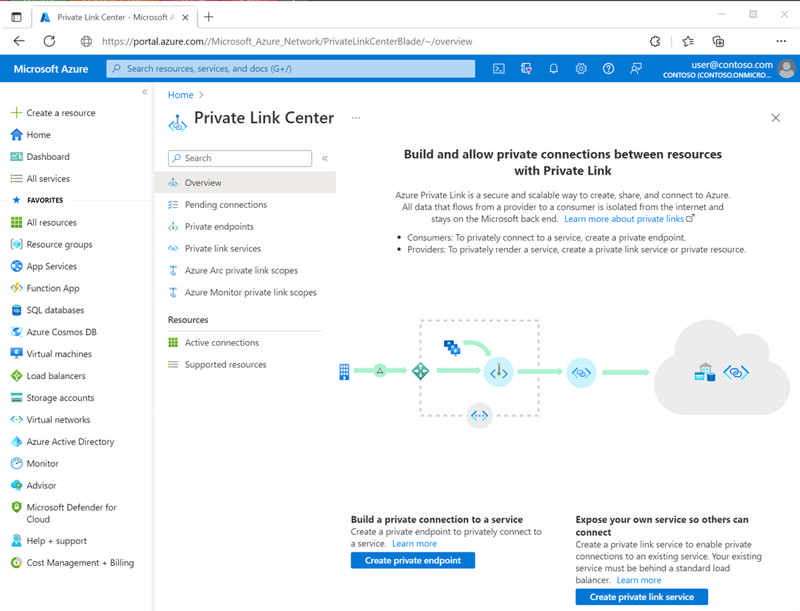

Private endpoint support for statically defined IP addresses is generally available. This feature allows you to add customizations to your deployments. Leverage already reserved IP addresses and allocate them to your private endpoint without relying on the randomness of Azure's dynamic IP allocation. In doing so, you can account for a consistent IP address to the private endpoint to use alongside IP based security rules and scripts.

Source: General availability: Static IP configurations of private endpoints

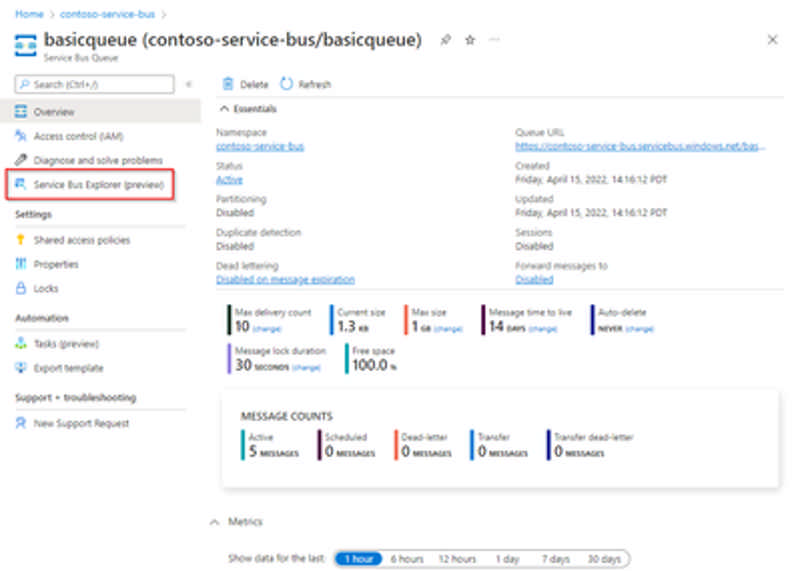

Thanks to your support during the preview of the completely revamped Service Bus Explorer tool on the Azure portal, this tool is now generally available. Azure Service Bus has two types of operations which can be performed against it:

- Management operations: Create, update, delete of Service Bus namespace, queues, topics, and subscriptions.

- Data operations: Send to and receive messages from queues, topics, and subscriptions.

While we have offered a portal-based Service Bus Explorer for data operations for a while now, you have provided us with feedback that the experience was still lacking compared to the community managed Service Bus Explorer OSS tool.

We have released a new version of Service Bus Explorer, which brings many new capabilities to the portal for working with your messages, right from the portal. For example, it is now possible to send, receive, and peek messages on queues, topics, and subscriptions, including dead-letter sub-queues. The tool allows you to perform operations such as complete, re-sending, and deferral. This can be done on a single message or for multiple messages at once.

To access the tool:

- Navigate to the namespace.

- Select the specific queue or topic that you want to perform your data operations on.

- Open "Service Bus Explorer (preview)" from the left menu navigation pane. When working with a topic, it's also possible to select a specific subscription within.

For all information about the tool and step-by-step guidance for the different operations, check the documentation.

Source: Generally available: Service Bus Explorer for the Azure portal

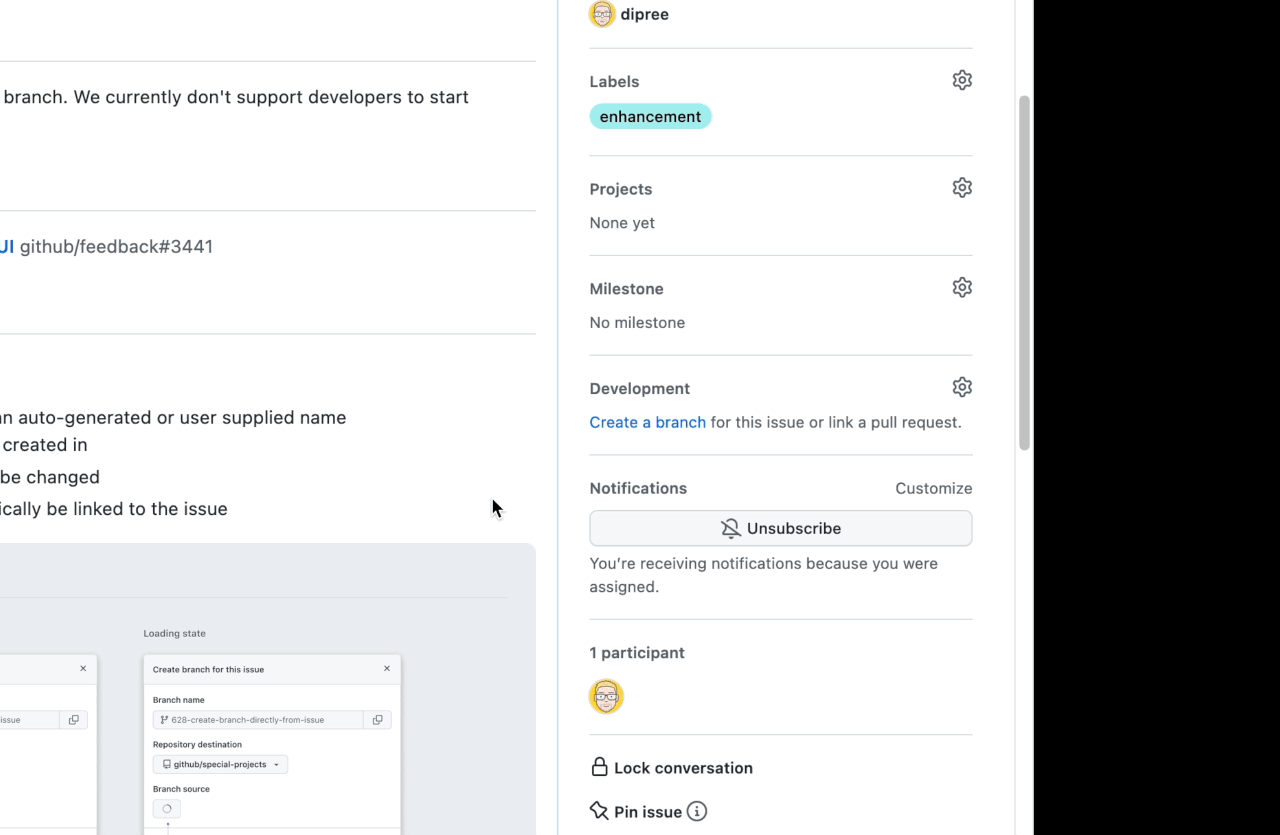

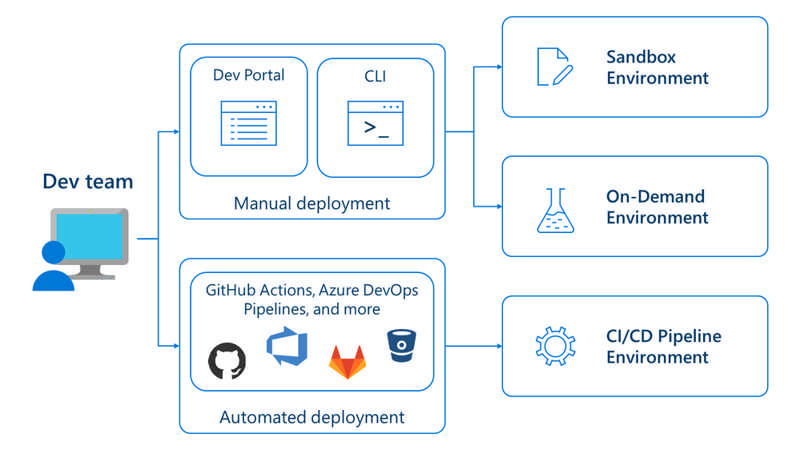

Azure Deployment Environments has entered public preview.

Azure Deployment Environments help dev teams create and manage all types of environments throughout the application lifecycle with features like:

On-demand environments enable developer to spin up environments with each feature branch to enable higher quality code reviews and ensure devs can view and test their changes in a prod-like environment.

Sandbox environments can be used as greenfield environments for experimentation and research.

CI/CD pipeline environments integrate with your CI/CD deployment pipeline to automatically create dev, test (regression, load, integration), staging, and production environments at specified points in the development lifecycle.

Environment types enable dev infra and IT teams to create preconfigured mappings that automatically apply the right subscriptions, permissions, and identities to environments deployed by developers based on their current stage of development.

Template catalogues housed in a code repo that can be accessed and edited by developers and IT admins to propagate best practices while maintaining security and governance.

For more information about Azure Deployment Environments, visit the announcement blog.

Source: Public preview: Microsoft Azure Deployment Environments

We’re announcing the general availability of the intent feature in Azure proximity placement groups. Proximity placement groups are a popular logical construct among customers running very latency sensitive workloads such as SAP and HPC. Proximity placement groups are used to physically locate Azure compute resources close to each other to provide best possible latencies.

With the addition of the new optional parameter, intent, you can now specify the VM sizes intended to be part of a proximity placement group when it is created. An optional zone parameter can be used to specify where you want to create the proximity placement group. This capability allows the proximity placement group allocation scope (datacenter) to be optimally defined for the intended VM sizes, reducing deployment failures of compute resources due to capacity unavailability. The new intent feature can now be used across all regions and it is supported through CLI and PowerShell interfaces.

To learn more about the new proximity placement groups' intent feature, refer to the documentation proximity placement groups - Azure Virtual Machines | Microsoft Learn.

Source: General availability: New Azure proximity placement groups feature

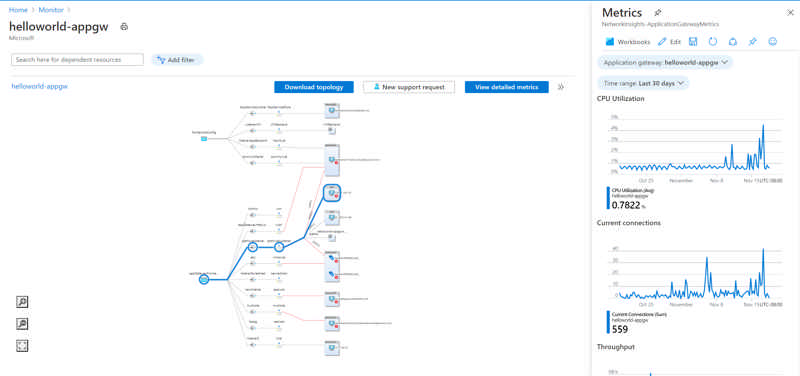

Public preview: Azure Resource Topology

Azure Resource Topology (ART) allows visualizing the resources in a network, acquire system context, understand state and debug issues faster. It provides a visualized connected experience for inventory management and monitoring.

This unified topology leads to upgrading the network monitoring and management experience in Azure. Replacing the Network Watcher topology, this topology will allow the users to draw a unified and dynamic topology across multiple subscription, regions, and resource groups (RGs) comprising of multiple resources.

Allowing deep dive into your environment, ART provides the capability for users to drill down from regions, VNETs to subnets, and resource view diagram of resources supported in Azure. It also stitches the end-to-end monitoring and diagnostics story with the capability to run next hop directly from a VM selected in the topology after specifying the destination IP address.

Selecting a resource in the topology highlights the node and all other nodes/resources connected to it via edges. These edges define the connections among regions which can be done through VNET peering, VNET Gateways, etc. The side pane shows extensive resource details and properties for selected node/resource.

Features available in public preview:

- Multi-region and multi-subscription visualization

- Drilldown from Azure region to resources inside subnet

- Side-panel showing resource properties and metrics

- Highlighting of nodes connected to a selected resource

- Automatic grouping of resources of the same type

- Side-by-side visualization of two regions/VNETs/Subnets

- Search based on resource name

- Scope selector based on subscription and resource-type filtering

- Health status of resources using resource health (RHC) status

- Diagnostics tool next hop integration.

- Resource view diagram for all supported resources

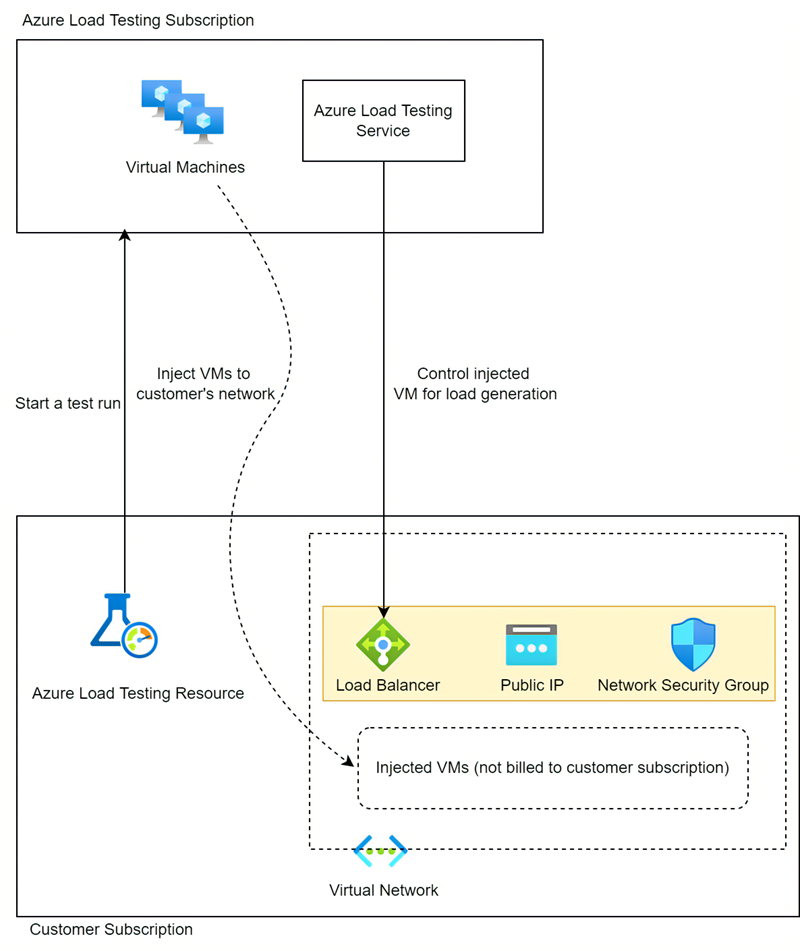

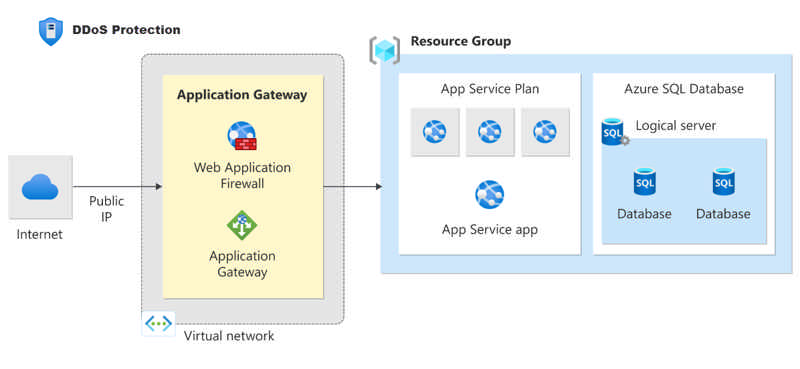

IP Protection is designed with SMBs in mind and delivers enterprise-grade, cost-effective DDoS protection.

Instead of enabling DDoS protection on a per virtual network basis, including all public IP resources associated with resources in those virtual networks, you now have the flexibility to enable DDoS protection on an individual public IP.

The existing standard SKU of Azure DDoS Protection will now be known as Network Protection.

IP Protection includes the same features as Network Protection, but Network Protection will have in the following value-added services: DDoS Rapid Response support, cost protection, integration with Azure Firewall Manager, and discounts on Azure Web Application Firewall.

Billing for IP Protection will be effective starting February 1, 2023.

Source: Public preview: IP Protection SKU for Azure DDoS Protection

Classic resource providers that use Azure Service Manager (classic deployment model) will be retired on 31 August 2024.

Required action

Your access to the classic resource provider’s endpoint will be revoked and the resource provider will be disabled on 31 August 2024.

-

To take advantage of advanced capabilities offered by Azure Resource Manager and avoid service disruptions, migrate your resources that use Classic (ASM) to Azure Resource Manager by 31 August 2024.

-

Additionally, to manage service expectations of your classic resource provider, notify your end customers and coordinate with them for completing migration before the retirement date of 31 August 2024.

Source: Azure classic resource providers will be retired on 31 August 2024

Azure Daily 2022 - Oct 10, 2022

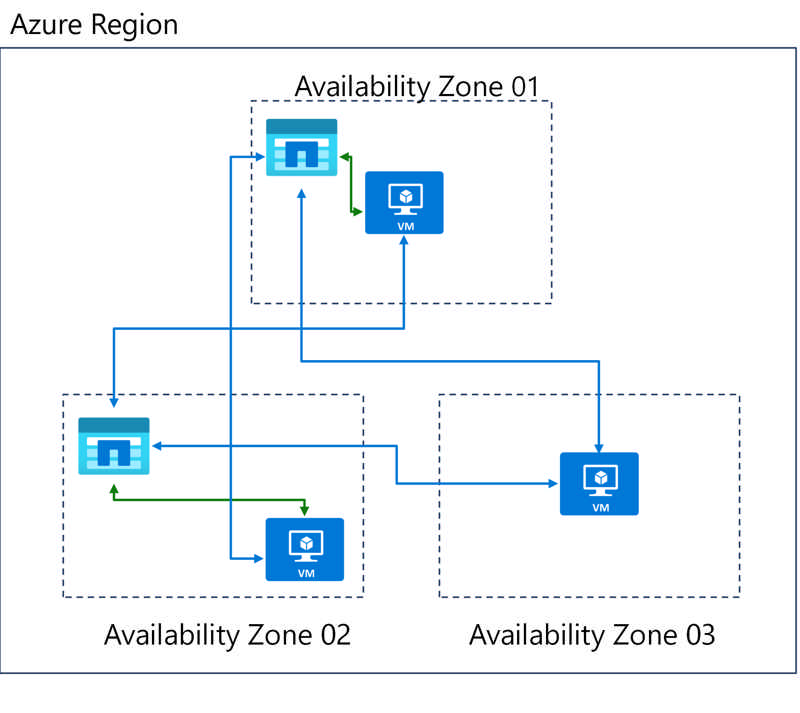

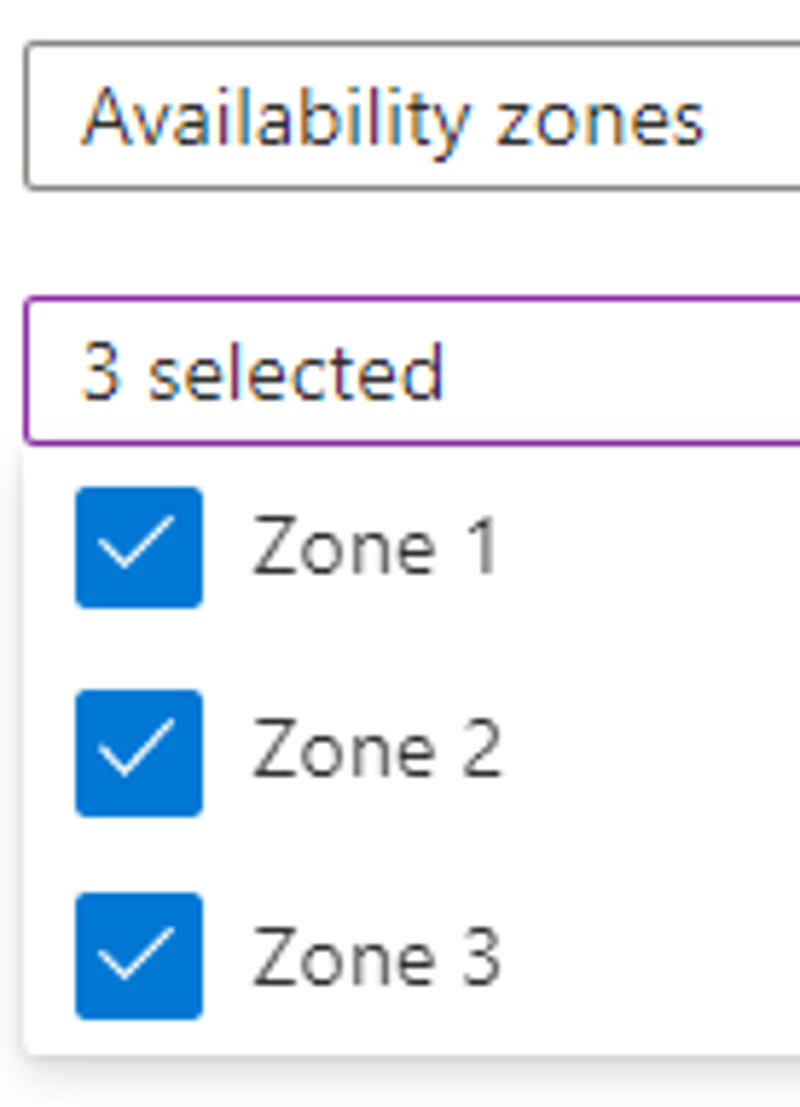

Microsoft is pleased to announce that you can now automatically distribute your session hosts across any number of availability zones. This enables you to take full advantage of the built-in Azure resiliency options from within the same deployment process.

This has been a feature request from many of our customers, and I'm pleased to announce the host pool deployment process has been improved so it now supports deploying into up to three availability zones in Azure regions that support them.

Read more at Azure Daily 2022

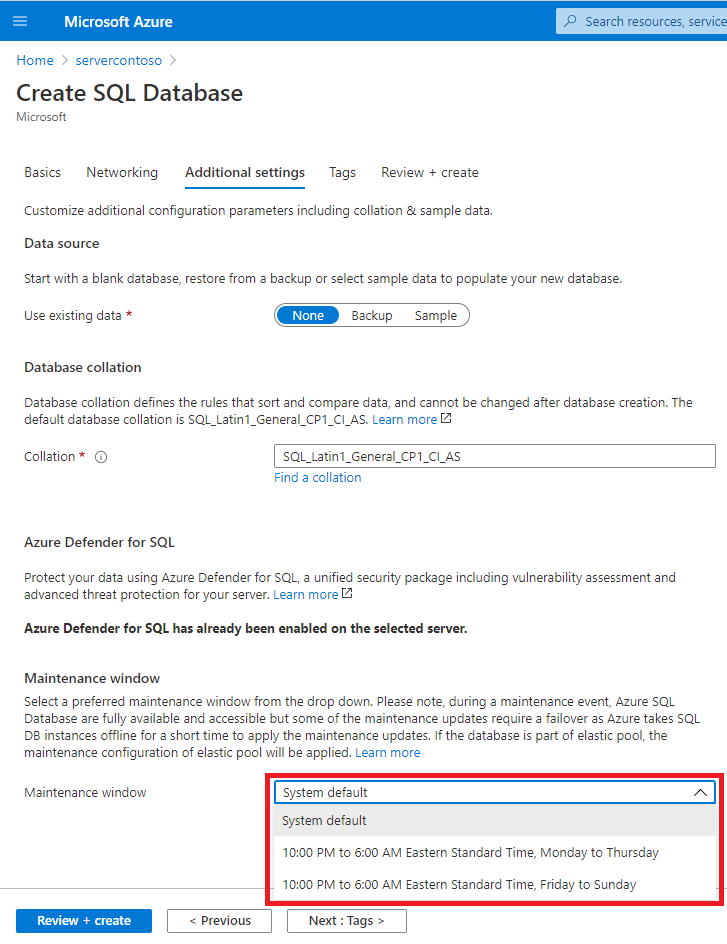

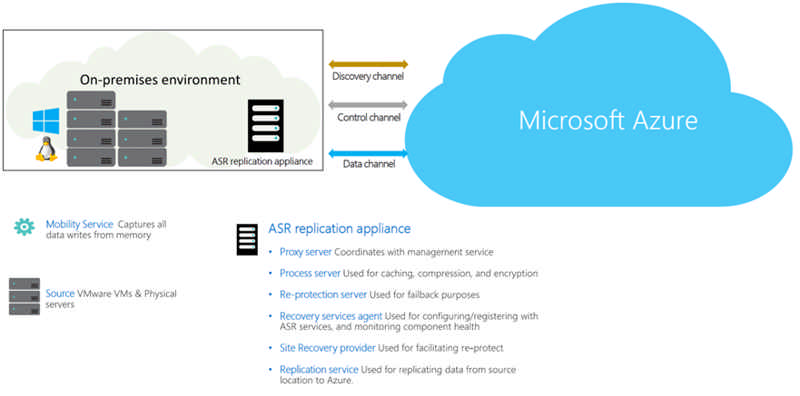

Today Microsoft officially announced the general availability of a simpler, more reliable, and modernized way to protect your VMware virtual machines using Azure Site Recovery, for recovering quickly from disasters. We are now offering these enhancements:

- Stateless ASR replication appliance

- Automatic upgrades for ASR replication appliance and mobility agent

- Easier scale management

- High availability for appliances

Learn more about the modernized architecture and move to the modernized experience now.

Source: General availability: Simplified disaster recovery for VMware machines using Azure Site Recovery

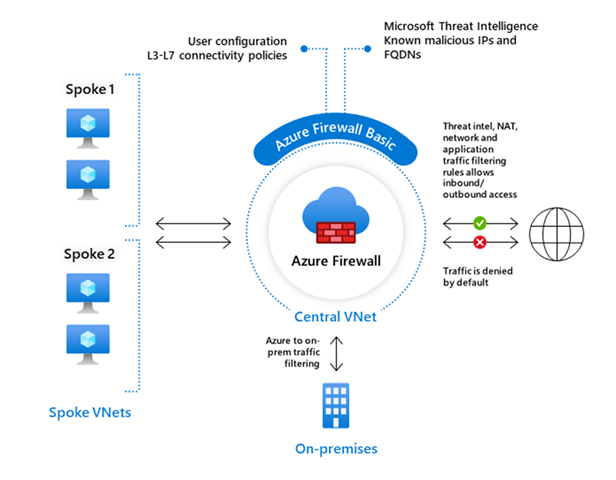

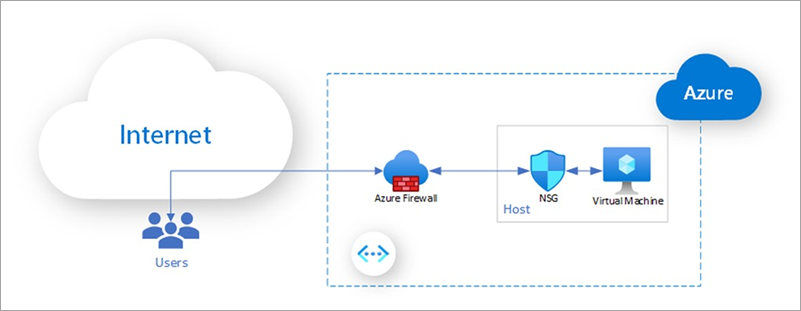

Azure Firewall Basic is a new SKU for Azure Firewall designed for small and medium-sized businesses.

The main benefits are:

Comprehensive, cloud-native network firewall security:

- Network and application traffic filtering

- Threat intelligence to alert on malicious traffic

- Built-in high availability

- Seamless integration with other Azure security services

Simple setup and easy-to-use:

- Setup in just a few minutes

- Automate deployment (deploy as code)

- Zero maintenance with automatic updates

- Central management via Azure Firewall Manager

Cost-effective:

- Designed to deliver essential, cost-effective protection of your resources within your virtual network

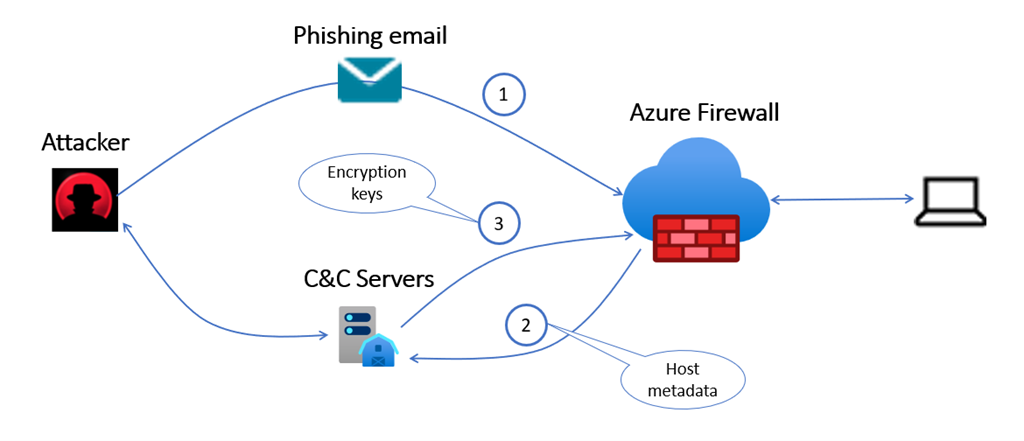

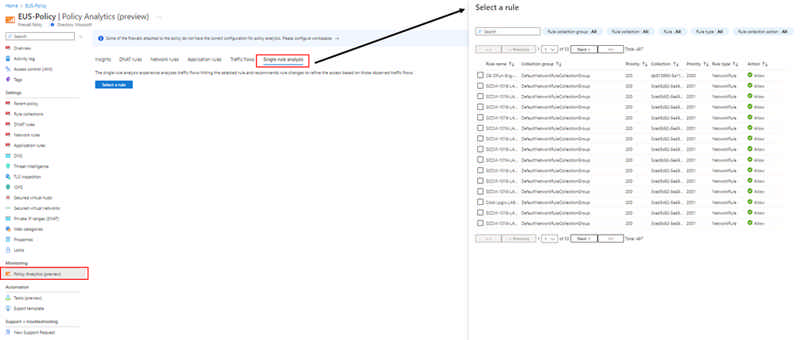

Policy analytics for Azure Firewall, now in public preview, provides enhanced visibility into traffic flowing through Azure Firewall, enabling the optimization of your firewall configuration without impacting your application performance.

As application migration to the cloud accelerates, it’s common to update Azure Firewall configuration daily (sometimes hourly) to meet the growing application needs and respond to a changing threat landscape. Frequently, changes are managed by multiple administrators spread across geographies.

Over time, the firewall configuration can grow sub optimally impacting firewall performance and security. It’s a challenging task for any IT team to optimize firewall rules without impacting applications and causing serious downtime. Policy analytics help address these challenges faced by IT teams by providing visibility into traffic flowing through the firewall with features such as firewall flow logs, rule to flow match, rule hit rate, and single rule analysis. IT admins can refine Azure Firewall rules in a few simple steps through the Azure portal.

Read the blog and Azure Firewall documentation to learn more.

Follow these instructions to enable policy analytics on your subscriptions.

In Azure App Service, you can easily create on-demand custom backups and automatic backups. You can easily restore these backups by overwriting an existing app or by restoring it to a new app or slot.

Automatic backup and restore is now in preview for isolated pricing tier for App Service Environment V2 and V3.

For more information about Azure App Services backups and restore, visit: Back up an app - Azure App Service | Microsoft Docs

Source: Public preview: Automatic backup for App Service Environment V2 and V3

Generally available: Azure Functions .NET Framework support in the isolated worker model

You can now build production Serverless Apps with Azure Functions v4 in isolated worker model with .NET Framework 4.8. This allows apps with .NET Framework dependencies to explore taking advantage of the latest versions of Azure Functions host.

If you are on .NET Framework on v1, it is recommended to migrate to .NET 6 or .NET 7 on v4 host. If your apps have .NET Framework dependencies, please migrate to .NET framework on v4 and provide feedback on our Azure Functions .NET Worker GitHub repository.

Apps built using this capability will follow the same patterns as any isolated .NET worker project in Functions, but they will specify .NET Framework 4.8 as the target framework. Please provide feedback through the Azure Functions .NET Worker GitHub repository.

Source: Generally available: Azure Functions .NET Framework support in the isolated worker model

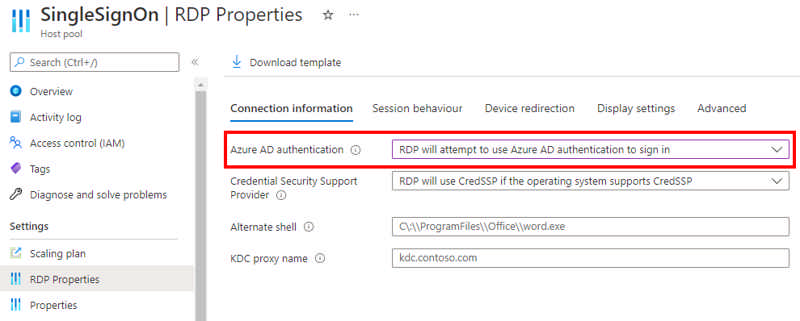

Today we’re announcing the public preview for enabling an Azure AD-based single sign-on experience and support for passwordless authentication, using Windows Hello and security devices (like FIDO2 keys). With this preview, you can now:

- Enable a single sign-on experience to Azure AD-joined and Hybrid Azure AD-joined session hosts when using the Windows and the web clients

- Use passwordless authentication to sign in to the host using Azure AD

- Use passwordless authentication inside the session when using the Windows client

- Use third-party Identity Providers (IdP) that integrate with Azure AD to sign in to the host

Read more at Azure Daily 2022

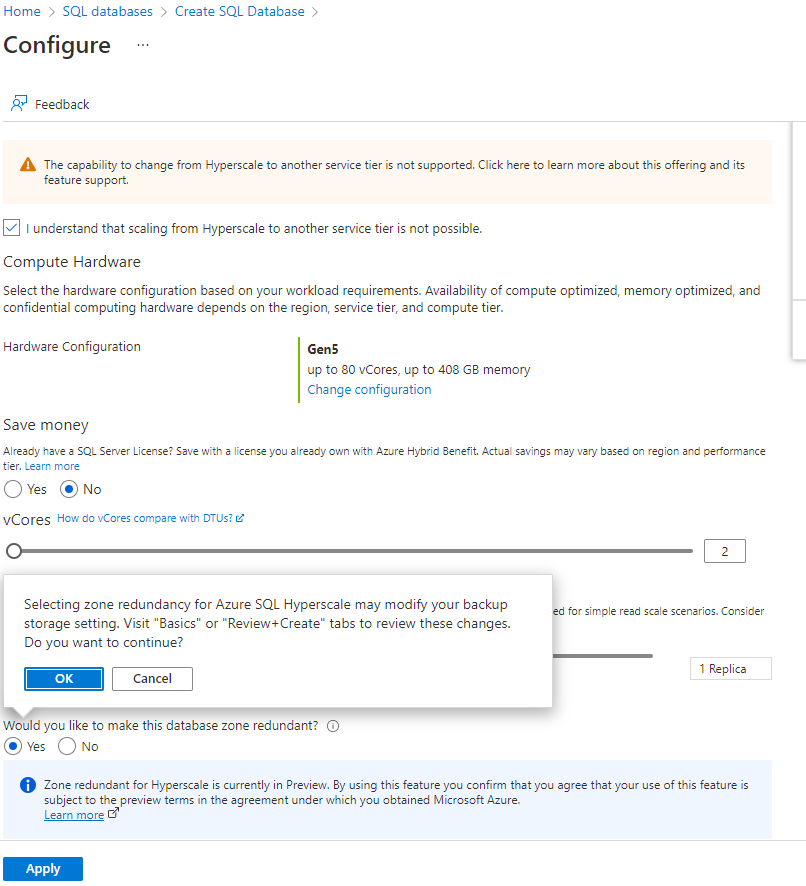

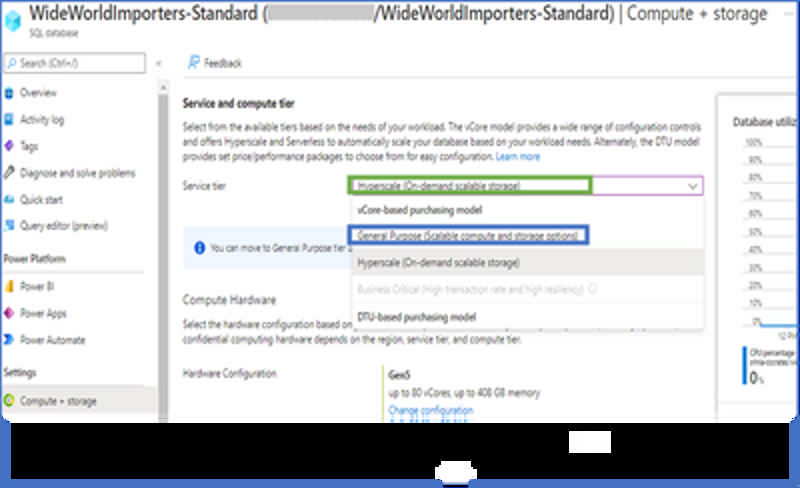

We are happy to announce General Availability(GA) of the ability to reverse migrate an Azure SQL Database from Hyperscale tier to General Purpose tier. Previously, migration into the Hyperscale tier was a one-way migration with no easy way to move back to any non-Hyperscale tier. Reverse Migration to the General Purpose service tier now allows customers who have recently migrated an existing Azure SQL DB database to the Hyperscale service tier to move back, should Hyperscale not meet their needs. This provides additional mobility for their SQL Database data. Once in the General Purpose tier, they have the flexibility to remain on that tier or move their database to other SQL Database tiers including coming back to Hyperscale tier.

Read more at Azure Daily 2022

Public preview: Customer initiated storage account conversion

Today Azure Storage is announcing the public preview of a self-service option to convert storage accounts from non-zonal redundancy (LRS/GRS) to zonal redundancy (ZRS/GZRS). This allows you to initiate the conversion of storage accounts via the Azure portal without the necessity of creating a support ticket.

Source: Public preview: Customer initiated storage account conversion

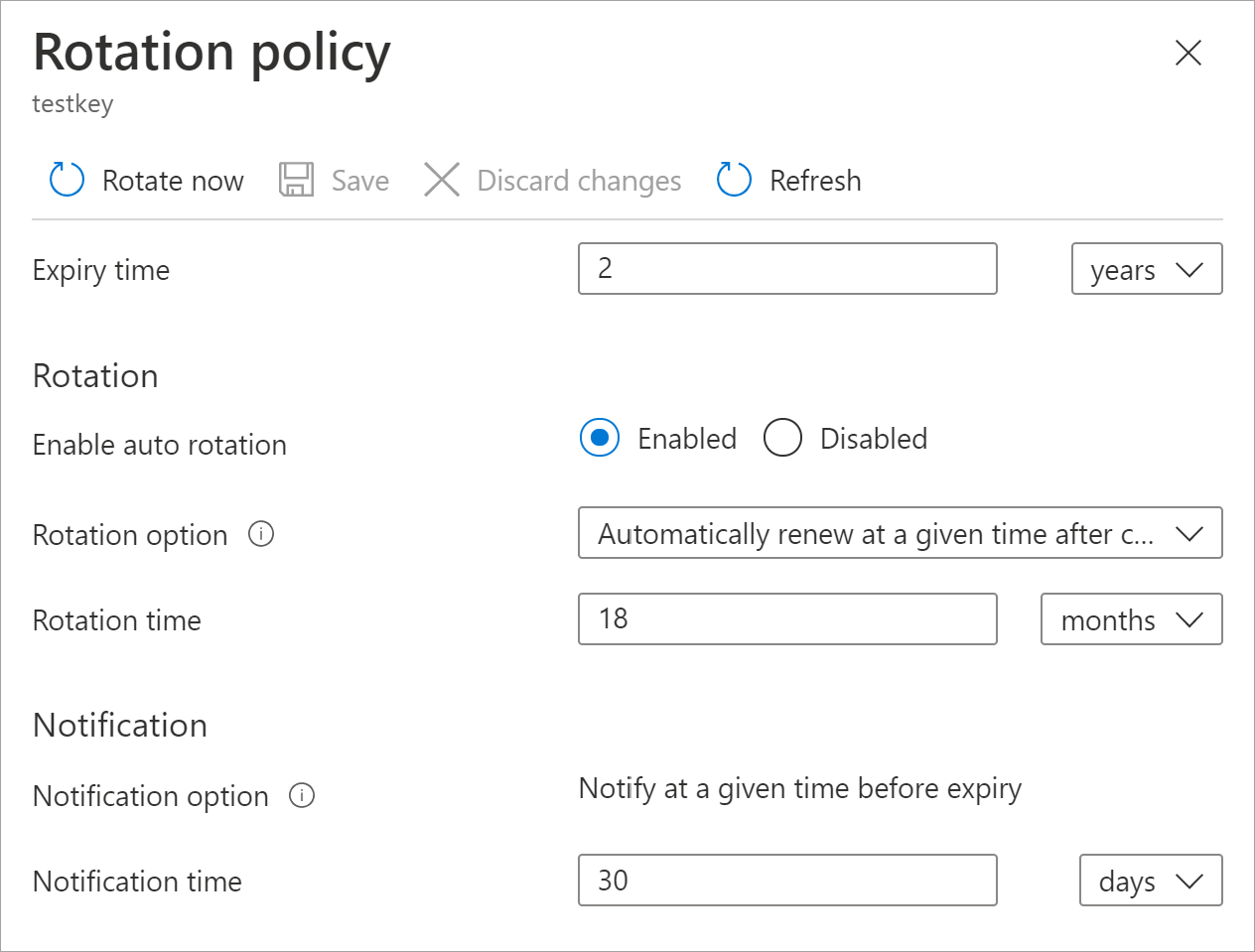

Encryption scopes introduce the option to provision multiple encryption keys in a storage account with hierarchical namespace. Using encryption scopes, you now can provision multiple encryption keys and choose to apply the encryption scope either at the container level (as the default scope for blobs in that container) or at the blob level. The preview is available for REST, HDFS, NFSv3, and SFTP protocols in an Azure Blob / Data Lake Gen2 storage account.

The key that protects an encryption scope may be either a Microsoft-managed key or a customer-managed key in Azure Key Vault. You can choose to enable automatic rotation of a customer-managed key that protects an encryption scope. When you generate a new version of the key in your Key Vault, Azure Storage will automatically update the version of the key that is protecting the encryption scope, within a day.

Source: Public preview: Encryption scopes on hierarchical namespace enabled storage accounts

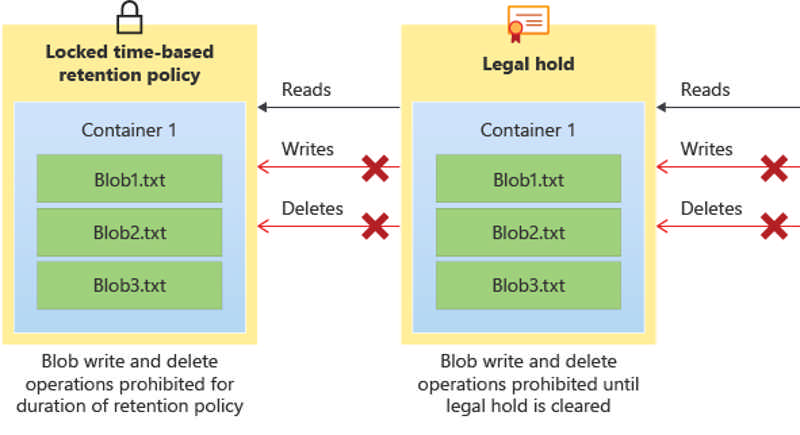

Immutable storage for Azure Data Lake Storage is now generally available. Immutable storage provides the capability to store data in a write once, read many (WORM) state. Once data is written, the data becomes non-erasable and non-modifiable and you can set a retention period so that files can't be deleted until after that period has elapsed. Additionally, legal holds can be placed on data to make that data non-erasable and non-modifiable until the hold is removed.

This release includes the new “allow append writes for block and append blobs” flag, which allows users to set up immutable policies for block and append blobs to keep already written data in a WORM state and continue to add new data.

If you are using NFS 3.0 protocol or SFTP support on an account, immutable storage is not supported.

Source: Generally available: Immutable storage for Azure Data Lake Storage

Immutable storage for Blob Storage on containers (which has been generally available since September 2018) now includes a new append capability. This capability, titled “Allow Protected Appends for Block and Append Blobs,” allows you to set up immutable policies for block and append blobs to keep already written data in a WORM state and continue to add new data. This capability is available for both legal holds and time-based retention policies.

This capability is supported in all public regions and it is available to new and existing accounts. To learn more, read the documentation on immutable storage.

Source: General availability (update): Improved Append Capability on Immutable Storage for Blob Storage

Azure Database for PostgreSQL – Flexible Server performs automatic snapshot backups and allows you to restore to any point in time within the retention period. The overall time to restore and recover may take several minutes depending on the amount of recovery to perform from the previous backup.

In use cases like testing, development, and data verifications at backup that don’t require the latest data but need to spin up a server quickly, Azure Database for PostgreSQL – Flexible Server now supports the fastest restore feature to address these use cases. This feature lists all the available automatic backups and you can choose a specific backup to restore. This feature then provisions a new server and restores the backup from the snapshot. Since no recovery is involved, this feature provides a fast and predictable restore experience.

Source: Generally available: Fast restore for Azure Database for PostgreSQL – Flexible Server

In less than two years, Bicep’s VS Code extension has grown from zero users to more than 15 thousand a month. In addition to the Bicep extension’s success, millions of resources are now deployed with Bicep files via Azure CLI and Azure PowerShell. Our incredible community has not only shaped the suite of Bicep features we know and love today, but they also made it abundantly clear how important Visual Studio was to their daily workflow. We heard you, no more switching back and forth between editors!

New operators on regional WAF custom rules:

Azure regional Web Application Firewall (WAF) with Application Gateway now supports creating custom rules using the operators "Any" and "GreaterThanOrEqual". Custom rules allow you to create your own rules to customize how each request is evaluatedas it passes through the WAF engine.

To learn more about creating custom rules, please visit the regional WAF documentation.

Geo filtering using socket address on global WAF:

Azure global Web Application Firewall (WAF) with Azure Front Door now supports custom geo-match filtering rules using socket addresses. Filtering by socket address allows you to restrict access to your web application by country/region using the source IP that the WAF sees. If your user is behind a proxy, socket address is often the proxy server address.

To learn more about geo filtering, please visit the global WAF documentation..

Source: General availability: Improvements to Azure Web Application Firewall (WAF) custom rules

Azure Media Services is announcing the general availability of low-latency live streaming (LL-HLS). This offers glass-to-glass latency as low as 4 seconds with any player capable of supporting Apple's low-latency HLS (LL-HLS) specification. With low-latency in the 4-7 second range, you can build a variety of interactive applications that allow you to engage seamlessly with your audiences at scale.

What kinds of applications can you build with low-latency live streaming?

Low-latency support can enable you to stream a variety of interactive scenarios including:

- Retail live shopping.

- Fitness and educational instruction classes.

- Social streaming and user generated live where audiences can chat and react with emojis.

What are the key features of low-latency live streaming in Media Services?

- Latencies in the 4-7 second range (upper end latency can vary by network conditions and geography).

- Automatic live transcription in over 50 languages.

- Dynamic encryption and digital rights management (DRM) support for PlayReady, Widevine, and FairPlay.

- Player friendly - Works natively with Apple devices (MacOS 12 and iOS15 and above) with no additional player work needed. Playback has been evaluated with the latest version of HLS.js, Video.js, Shaka, and more.

- No additional costs over existing encoding live events.

- Major improvements in latency for DASH delivery when not using LL-HLS playback (LL-DASH support is not available at this time).

Source: General availability: Azure Media Services low-latency live streaming

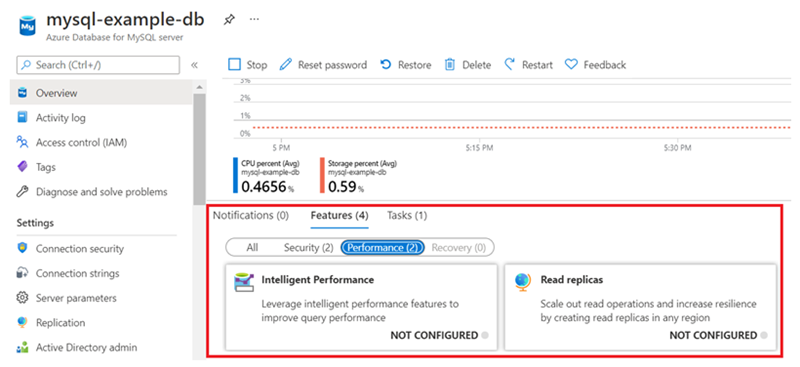

The new read replica feature allows you to replicate your data from an instance of Azure Database for MySQL Flexible Server to a read-only server. You can use this feature to replicate the source server to up to a total of 10 replicas. This functionality is now extended to support high availability (HA) enabled servers within the same region.

Source: General availability: Read replica for Azure Database for MySQL - Flexible Server

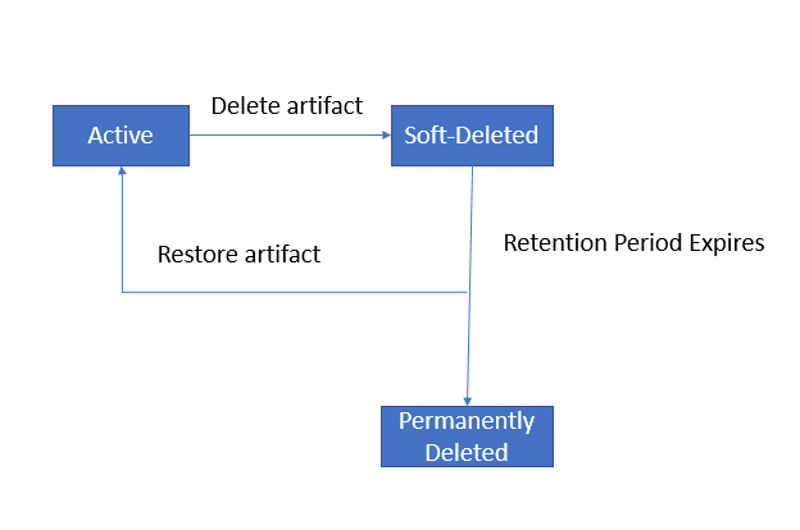

Restore artifacts you may have deleted by mistake using the Azure Container Registry (ACR) soft delete feature.

After the feature is enabled and an artifact is deleted, the deleted artifact is stored in a recycle bin for a number of days (user configurable setting). You can restore the artifact while it is still available in the recycle bin and build containers from it right away. Once an artifact hits the configured recycle days limit, it is purged from the Azure Container Registry permanently.

Source: Public preview: Soft delete in Azure Container Registry

Updating the address space for peered virtual networks now is now generally available. This feature allows you to update the address space or resize for a peered virtual network without removing the peering.

Users often want to resize or update the IP address of their virtual networks as they grow their footprint in Azure. Users can now resize their virtual networks to meet their needs without downtime. This feature allows you to easily resize your virtual networks without the need to remove the peering in advance.

Source: Generally available: Resizing of peered virtual networks

An Azure Kubernetes Service (AKS) cluster with API Server VNet Integration configured projects the API server endpoint directly into a delegated subnet in the VNet where AKS is deployed. This enables network communication between the API server and the cluster nodes without any required private link or tunnel. The API server will be available behind an Internal Load Balancer VIP in the delegated subnet, which the nodes will be configured to utilize.

Source: Public preview: API Server VNET Integration for AKS private cluster

Generally available: Multi-instance GPU support in AKS

Multi-instance GPU (MIG) for the A100 GPU is now generally available in AKS. Multi-instance GPU provides a mechanism for you to partition up the GPU for Kubernetes workloads on the same VM. You can now run your production workloads using the A100 GPU SKU and benefit from its higher performance.

Source: Generally available: Multi-instance GPU support in AKS

You can easily configure an Azure Database for PostgreSQL instance as output to your Stream Analytics job with zero code. This functionality is now generally available.

Source: General availability: Azure Database for PostgreSQL output in Stream Analytics

Currently, virtual machines (VMs) running on Azure Dedicated Host support the use of standard and premium disks as data disks. We are introducing support for ultra disks on dedicated host.

Ultra disks are highly performant disks on Azure that offer high throughput (maximum of 4000 MBps per disk) and high IOPS (maximum of 160,00 IOPS per disk) depending on the disk size. If you are running IaaS workloads that are data intensive and latency sensitive, such as Oracle DB, MySQL DB, other critical databases, and gaming applications, you will benefit from using ultra disks as data disks on VMs hosted on dedicated host.

Source: Generally available: Azure Dedicated Host support for Ultra Disk Storage

Save up to 24 percent on your usage of Azure Backup Storage by purchasing reserved capacity storage. The reservation discount will automatically apply to your matching Backup Storage and the process of purchasing a reservation is streamlined. Reservations are available on a one-year basis for up to a 16 percent discount or on a three-year basis for a 24 percent discount.

Source: Generally available: Reserved capacity for Azure Backup Storage

We are announcing the general availability of standard network features for Azure NetApp Files volumes. Standard network features provide you with an enhanced, and consistent virtual networking experience along with security posture for Azure NetApp Files.

You are now able to choose between standard or basic network features while creating a new Azure NetApp Files volume:

- Basic provide the current functionality, limited scale, and features.

- Standard provides the following new features for Azure NetApp Files volumes or delegated subnets:

- Increased IP limits for Vnets with Azure NetApp Files volumes. This is at par with VMs to enable you to provision Azure NetApp File volumes in your existing topologies or architectures. This eliminates the need to rearchitect network topologies to use Azure NetApp Files for workloads like VDI, AVD, or AKS.

- Enhanced network security with support for network security groups (NSG) on the Azure NetApp Files delegated subnet.

- Enhanced network control with support for user-defined routes (UDR) to and from Azure NetApp Files delegated subnets. You can now direct traffic to and from Azure NetApp Files via your choice of network virtual appliances for traffic inspection.

- Connectivity over active or active VPN gateway setup for highly available connectivity to Azure NetApp Files from on-premises network.

- ExpressRoute FastPath connectivity to Azure NetApp Files. FastPath improves the data path performance between on-premises network and Azure Virtual Network.

This general availability for standard network features is currently in20 regions and will rollout to other regions.

Source: General availability: Standard network features for Azure NetApp Files

Azure Daily 2022 - Sep 08, 2022

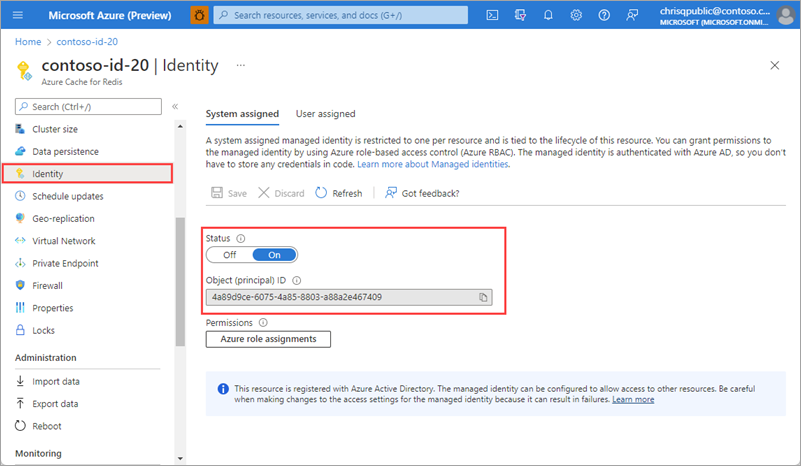

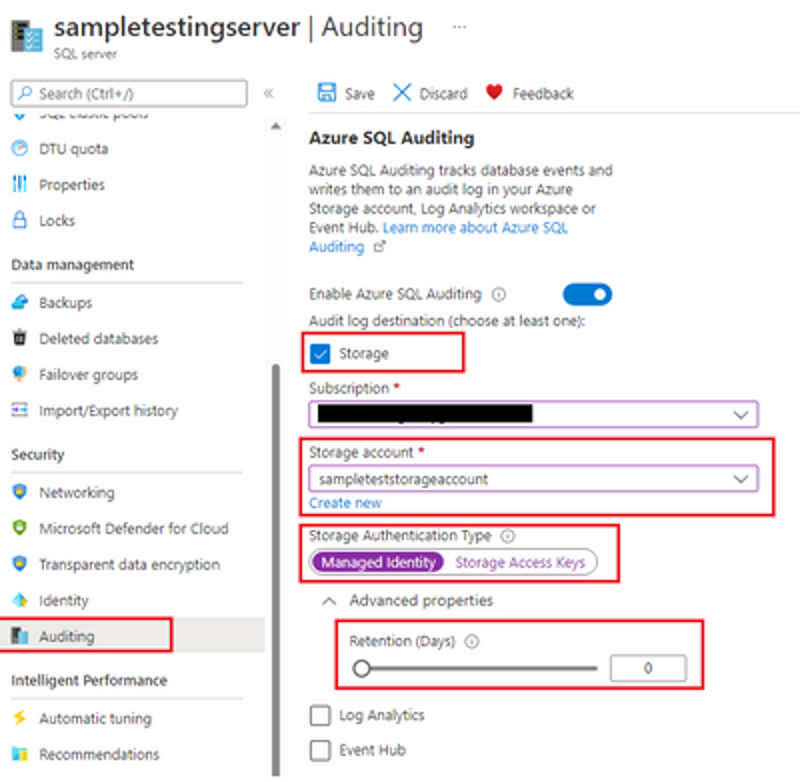

Auditing for Azure SQL database has started supporting User Managed Identity. Auditing can be configured to Storage account using two authentication methods, managed identity and storage access keys. For managed identity you can use system managed identity or user managed identity. To know more about UMI in azure refer here

To configure writing audit logs to a storage account, select Storage when you get to the Auditing section. Select the Azure storage account where logs will be saved, you can use two storage authentication types i.e., managed identity and storage access keys.

Read more at Azure Daily 2022

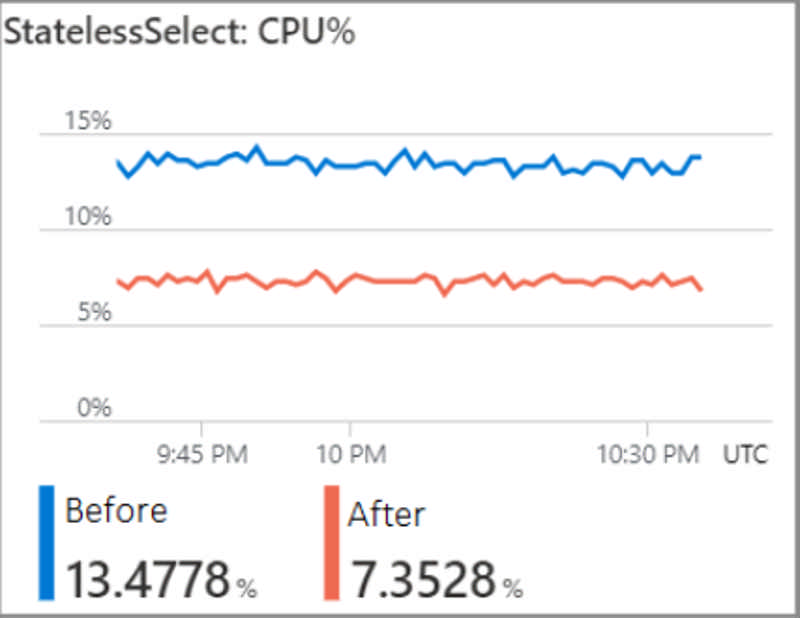

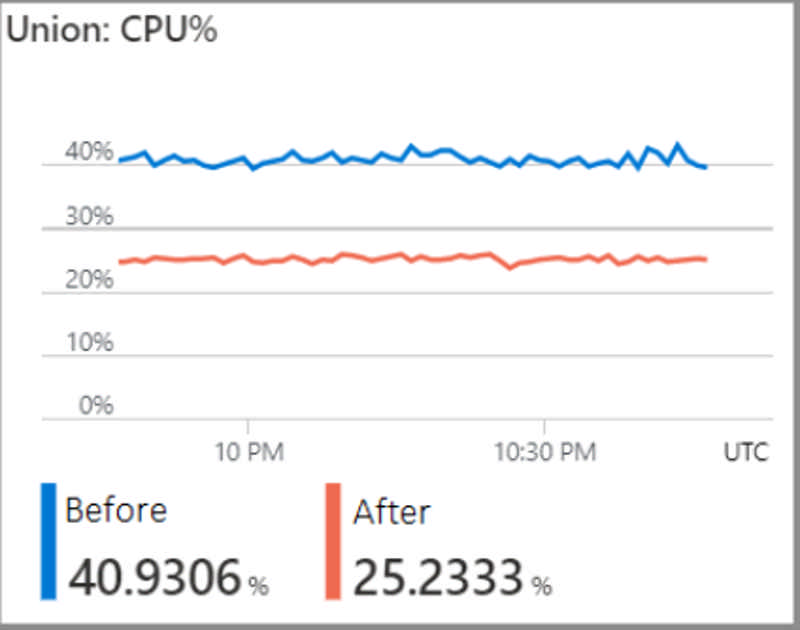

General availability: Up to 45% performance gains in stream processing

Announcing an up to 45% performance boost for CPU intensive jobs by default. This improvement allows you to reduce the number of streaming units assigned to such jobs and save on costs without impacting performance.

Source: General availability: Up to 45% performance gains in stream processing

Resource instance rules enable secure connectivity to a storage account by restricting access to specific resources of select Azure services.

Azure Storage provides a layered security model that enables you to secure and control access to your storage account. You can configure network access rules to limit access to your storage account from select virtual networks or IP address ranges. Some Azure services operate on multi-tenant infrastructure, so resources of these services cannot be isolated to a specific virtual network.

With resource instance rules, you can now configure your storage account to only allow access from specific resource instances of such Azure services. For example, Azure Synapse offers analytic capabilities that cannot be deployed into a virtual network. If your Synapse workspace uses such capabilities, you can configure a resource instance rule on a secured storage account to only allow traffic from that Synapse workspace.

Resource instances must be in the same tenant as your storage account, but they may belong to any resource group or subscription in the tenant.

Source: Generally available: Resource instance rules for access to Azure Storage

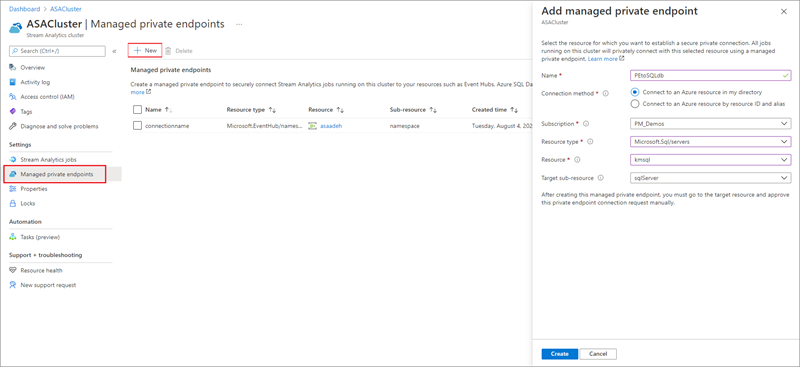

You can now use Stream Analytics clusters to securely connect your jobs to write to dedicated synapse SQL pools using managed private endpoints. Setting this up is a simple, two step operation. First add synapse SQL output to your job. Then go to your Stream Analytics cluster to add a managed private endpoint that establishes a secure, private connection between your resources. Learn how to configure managed private endpoints in your Stream Analytics cluster.

Source: General availability: Managed private endpoint support to Synapse SQL output

The general purpose Dps v5 and Dpds v5 Azure Virtual Machines series can run popular Linux enterprise workloads such as web and application servers, open-source databases, Java and .Net applications, gaming, and media servers, and more. The new VMs provide up to 4GiBs of memory per vCPU in sizes with up to 64 vCPUs, 208GiB of memory, and 40Gbps networking, with and without local temporary storage.