Azure Daily 2023

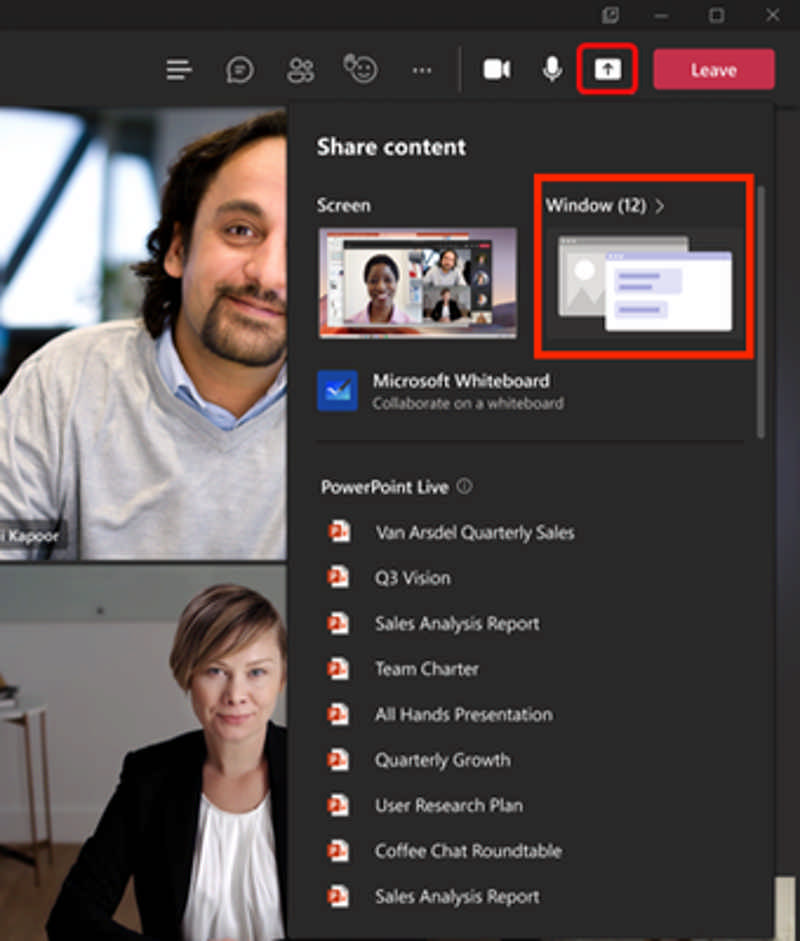

Microsoft has announced that window sharing of the Microsoft Teams application is now generally available on the Azure Virtual Desktop for Windows users.

Application window sharing now allows users to select a specific window from their desktop screen to share. Previously, users could only share their entire desktop window or a Microsoft PowerPoint Live presentation. Application window sharing helps reduce the risk of showing sensitive content during meetings/calls and keeps meetings focused by directing participants to specific content.

Read more at Azure Daily 2022

As a central authentication repository used by Azure, Azure Active Directory allows you to store objects such as users, groups, or service principals as identities. Azure AD also allows you to use those identities to authenticate with different Azure services. Azure AD authentication is supported for Azure SQL Database, Azure SQL Managed Instance, SQL Server on Windows Azure VMs, Azure Synapse Analytics, and now we are bringing it to SQL Server 2022.

Source: Generally available: Azure Active Directory authentication for SQL Server 2022

General Availability: Azure Active Directory authentication for exporting and importing Managed Disks

Azure already supports disk import and export locking only from a trusted Azure Virtual Network (VNET) using Azure Private Link. For greater security, we are launching the integration with Azure Active Directory (AD) to export and import data to Azure Managed Disks. This feature enables the system to validate the identity of the requesting user in Azure AD and verify that the user has the required permissions to export and import that disk.

This feature is now generally available, to learn more, read the documentation Download VHD or Upload a VHD to a managed disk.

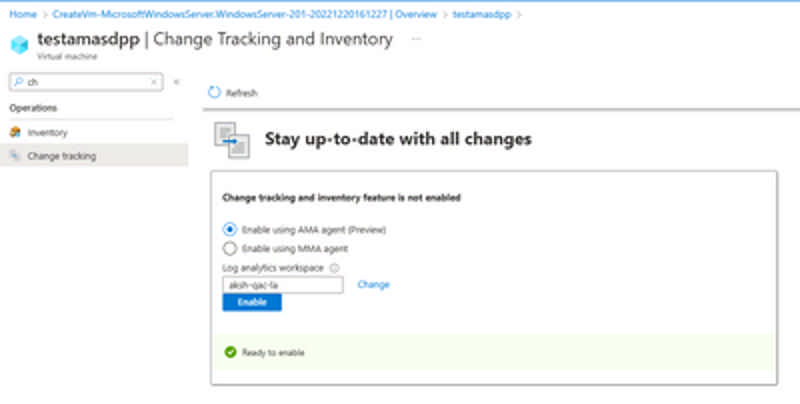

The Change Tracking and Inventory service tracks changes to Files, Registry, Software, Services and Daemons and uses the MMA (Microsoft Monitoring Agent)/OMS (Operations Management Suite) agent. This preview supports the new AMA agent and enhances the following:

- Security/Reliability and Scale - The Azure Monitor Agent enhances security, reliability, and facilitates multi-homing experience to store data.

- Simplified onboarding- You can onboard to Virtual Machines directly to Change Tracking (add link) without needing to configure an Automation account. In new experience, there is

- Rules management – Uses Data Collection Rules to configure or customize various aspects of data collection. For example, you can change the frequency of file collection. DCRs let you configure data collection for specific machines connected to a workspace as compared to the "all or nothing" approach of legacy agents.

Public Preview: Azure Automation Visual Studio Code Extension

You can now use Azure Automation Extension to quickly create and manage runbooks. All runbook creation and management operations like editing runbook, triggering jobs, tracking recent jobs, linking a schedule or webhook, asset management, local debugging, and many more are supported in the extension to make it easier for you to work in the IDE like interface provided by VS Code.

Source: Public Preview: Azure Automation Visual Studio Code Extension

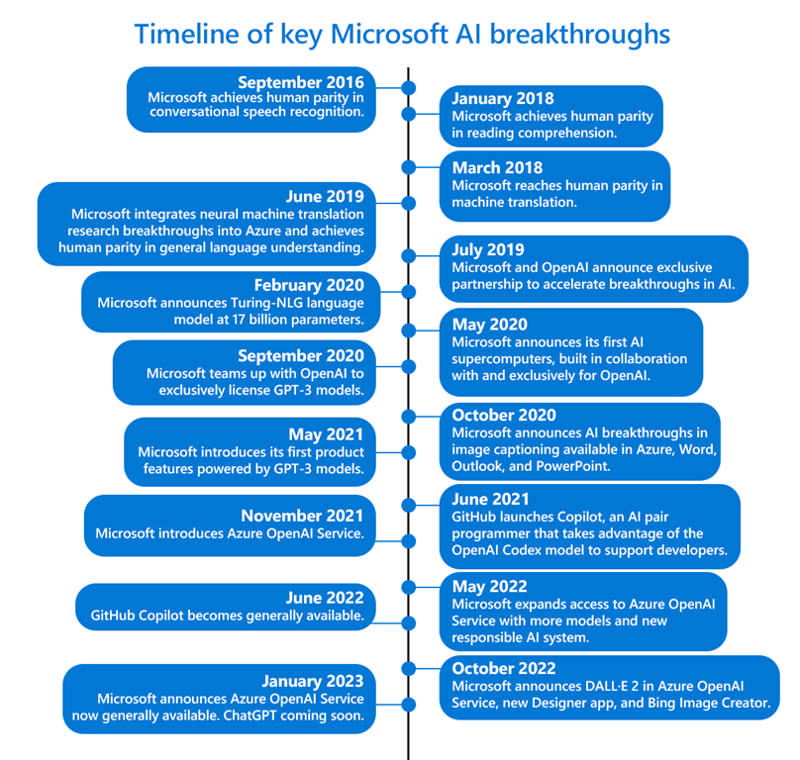

Large language models are quickly becoming an essential platform for people to innovate, apply AI to solve big problems, and imagine what’s possible. Today, we are excited to announce the general availability of Azure OpenAI Service as part of Microsoft’s continued commitment to democratizing AI, and ongoing partnership with OpenAI.

With Azure OpenAI Service now generally available, more businesses can apply for access to the most advanced AI models in the world—including GPT-3.5, Codex, and DALL•E 2—backed by the trusted enterprise-grade capabilities and AI-optimized infrastructure of Microsoft Azure, to create cutting-edge applications. Customers will also be able to access ChatGPT—a fine-tuned version of GPT-3.5 that has been trained and runs inference on Azure AI infrastructure—through Azure OpenAI Service soon.

Empowering customers to achieve more

We debuted Azure OpenAI Service in November 2021 to enable customers to tap into the power of large-scale generative AI models with the enterprise promises customers have come to expect from our Azure cloud and computing infrastructure—security, reliability, compliance, data privacy, and built-in Responsible AI capabilities.

Since then, one of the most exciting things we’ve seen is the breadth of use cases Azure OpenAI Service has enabled our customers—from generating content that helps better match shoppers with the right purchases to summarizing customer service tickets, freeing up time for employees to focus on more critical tasks.

Customers of all sizes across industries are using Azure OpenAI Service to do more with less, improve experiences for end-users, and streamline operational efficiencies internally. From startups like Moveworks to multinational corporations like KPMG, organizations small and large are applying the capabilities of Azure OpenAI Service to advanced use cases such as customer support, customization, and gaining insights from data using search, data extraction, and classification.

“At Moveworks, we see Azure OpenAI Service as an important component of our machine learning architecture. It enables us to solve several novel use cases, such as identifying gaps in our customer’s internal knowledge bases and automatically drafting new knowledge articles based on those gaps. This saves IT and HR teams a significant amount of time and improves employee self-service. Azure OpenAI Service will also radically enhance our existing enterprise search capabilities and supercharge our analytics and data visualization offerings. Given that so much of the modern enterprise relies on language to get work done, the possibilities are endless—and we look forward to continued collaboration and partnership with Azure OpenAI Service."—Vaibhav Nivargi, Chief Technology Officer and Founder at Moveworks.

“Al Jazeera Digital is constantly exploring new ways to use technology to support our journalism and better serve our audience. Azure OpenAI Service has the potential to enhance our content production in several ways, including summarization and translation, selection of topics, AI tagging, content extraction, and style guide rule application. We are excited to see this service go to general availability so it can help us further contextualize our reporting by conveying the opinion and the other opinion.”—Jason McCartney, Vice President of Engineering at Al Jazeera.

“KPMG is using Azure OpenAI Service to help companies realize significant efficiencies in their Tax ESG (Environmental, Social, and Governance) initiatives. Companies are moving to make their total tax contributions publicly available. With much of these tax payments buried in IT systems outside of finance, massive data volumes, and incomplete data attributes, Azure OpenAI Service finds the data relationships to predict tax payments and tax type—making it much easier to validate accuracy and categorize payments by country and tax type.”—Brett Weaver, Partner, Tax ESG Leader at KPMG.

Azure—the best place to build AI workloads

The general availability of Azure OpenAI Service is not only an important milestone for our customers but also for Azure.

Azure OpenAI Service provides businesses and developers with high-performance AI models at production scale with industry-leading uptime. This is the same production service that Microsoft uses to power its own products, including GitHub Copilot, an AI pair programmer that helps developers write better code, Power BI, which leverages GPT-3-powered natural language to automatically generate formulae and expressions, and the recently-announced Microsoft Designer, which helps creators build stunning content with natural language prompts.

All of this innovation shares a common thread: Azure’s purpose-built, AI-optimized infrastructure.

Azure is also the core computing power behind OpenAI API’s family of models for research advancement and developer production.

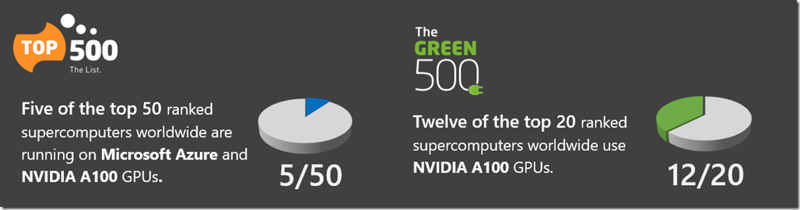

Azure is currently the only global public cloud that offers AI supercomputers with massive scale-up and scale-out capabilities. With a unique architecture design that combines leading GPU and networking solutions, Azure delivers best-in-class performance and scale for the most compute-intensive AI training and inference workloads. It’s the reason the world’s leading AI companies—including OpenAI, Meta, Hugging Face, and others—continue to choose Azure to advance their AI innovation. Azure currently ranks in the top 15 of the TOP500 supercomputers worldwide and is the highest-ranked global cloud services provider today. Azure continues to be the cloud and compute power that propels large-scale AI advancements across the globe.

Source: TOP500 The List: TOP500 November 2022, Green500 November 2022.

A responsible approach to AI

As an industry leader, we recognize that any innovation in AI must be done responsibly. This becomes even more important with powerful, new technologies like generative models. We have taken an iterative approach to large models, working closely with our partner OpenAI and our customers to carefully assess use cases, learn, and address potential risks. Additionally, we’ve implemented our own guardrails for Azure OpenAI Service that align with our Responsible AI principles. As part of our Limited Access Framework, developers are required to apply for access, describing their intended use case or application before they are given access to the service. Content filters uniquely designed to catch abusive, hateful, and offensive content constantly monitor the input provided to the service as well as the generated content. In the event of a confirmed policy violation, we may ask the developer to take immediate action to prevent further abuse.

We are confident in the quality of the AI models we are using and offering customers today, and we strongly believe they will empower businesses and people to innovate in entirely new and exciting ways.

The pace of innovation in the AI community is moving at lightning speed. We’re tremendously excited to be at the forefront of these advancements with our customers, and look forward to helping more people benefit from them in 2023 and beyond.

Getting started with Azure OpenAI Service

- Learn more about Azure OpenAI Service and more about all the latest enhancements.

- Apply for access to Azure OpenAI Service. Once approved for use, customers can log in to the Azure portal to create an Azure OpenAI Service resource and then get started either in our Studio website or via code:

- Read the blog: AI and the need for purpose-built cloud infrastructure.

- Watch a video with tips on how to get started with Azure OpenAI Service:

Generally available: Azure Ultra Disk Storage in Switzerland North and Korea South

Azure Ultra Disk Storage is now available in one zone in Switzerland North and with Regional VMs in Korea South. Azure Ultra Disk Storage offers high throughput, high IOPS, and consistent low latency disk storage for Azure Virtual Machines (VMs). Ultra Disk Storage is well-suited for data-intensive workloads such as SAP HANA, top-tier databases, and transaction-heavy workloads.

Source: Generally available: Azure Ultra Disk Storage in Switzerland North and Korea South

GitHub Actions: OpenID Connect token now supports more claims for configuring granular cloud access

OpenID Connect (OIDC) support in GitHub Actions enables secure cloud deployments using short-lived tokens that are automatically rotated for each deployment.

Each OIDC token includes standard claims like the audience, issuer, subject and many more custom claims that uniquely define the workflow job that generated the token. These claims can be used to define fine grained trust policies to control the access to specific cloud roles and resources.

These changes enable developers to define more advanced access policies using OpenID connect and do more secure cloud deployments at scale with GitHub Actions.

Source: GitHub Actions: OpenID Connect token now supports more claims for configuring granular cloud access

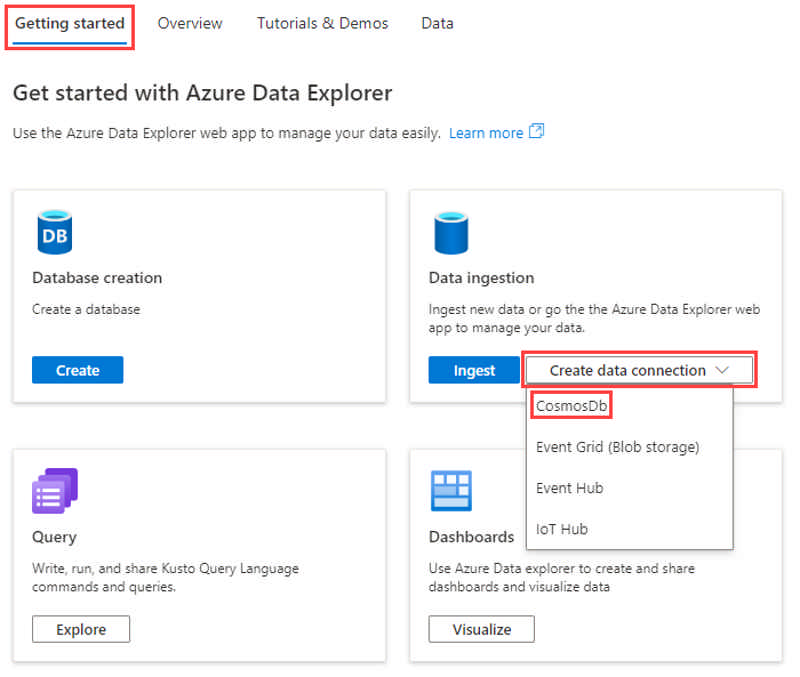

Azure Data Explorer (ADX) now supports managed ingestion from Azure Cosmos DB.

This feature enables near real-time analytics on Cosmos DB data in a managed setting (ADX data connection). Since ADX supports Power BI direct query, it enables near real-time Power BI reporting. The latency between Cosmos DB and ADX can be as low as sub-seconds (using streaming ingestion).

This brings the best of both worlds: fast/low latency transactional workload with Azure Cosmos DB and fast / ad hoc analytical with Azure Data Explorer.

Only Azure Cosmos DB NoSQL is supported.

Source: Public Preview: Azure Cosmos DB to Azure Data Explorer Synapse Link

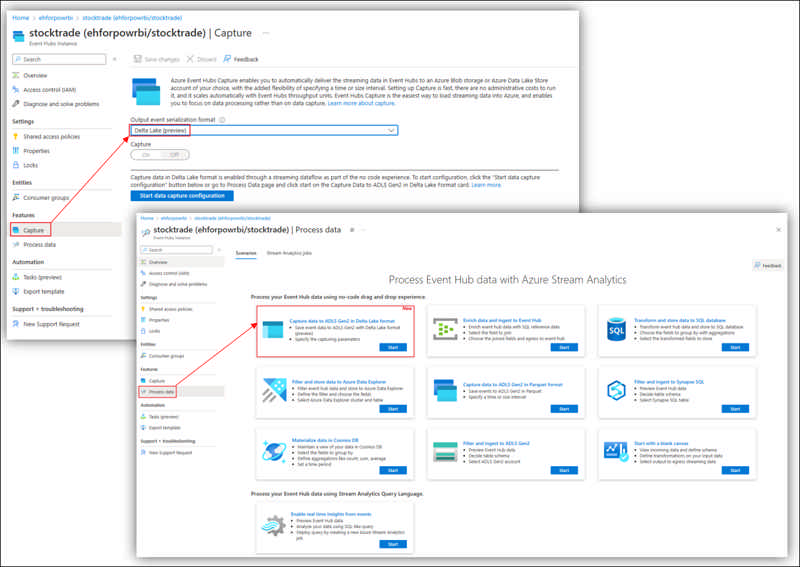

Delta Lake is open source software that extends Parquet data files with a file-based transaction log for ACID transactions and scalable metadata handling. Now, Stream Analytics no-code editor provides you an easiest way (drag and drop experience) to capture your Event Hubs data into ADLS Gen2 with this Delta Lake format without a piece of code. A pre-defined canvas template has been prepared for you to further speed up your data capturing with such format.

To access this capability, simply go to your Event Hubs in Azure portal -> Features -> Process data or Capture.

Source: Public preview: Capture Event Hubs data with Stream Analytics no-code editor in Delta Lake format

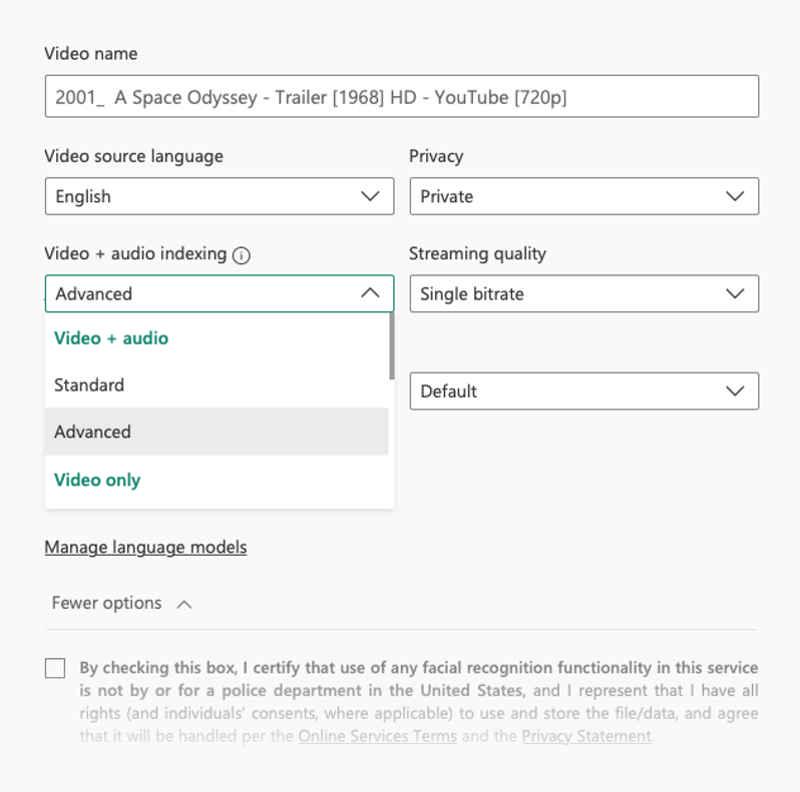

With the "Featured Clothing" insight, you are able to identify key items worn by individuals within videos. This allows high-quality in-video contextual advertising by matching relevant clothing ads with the specific time within the video in which they are viewed. "Featured Clothing" is now available to you using Azure Video Indexer advanced preset. With the new featured clothing insight information, you can enable more targeted ads placement.

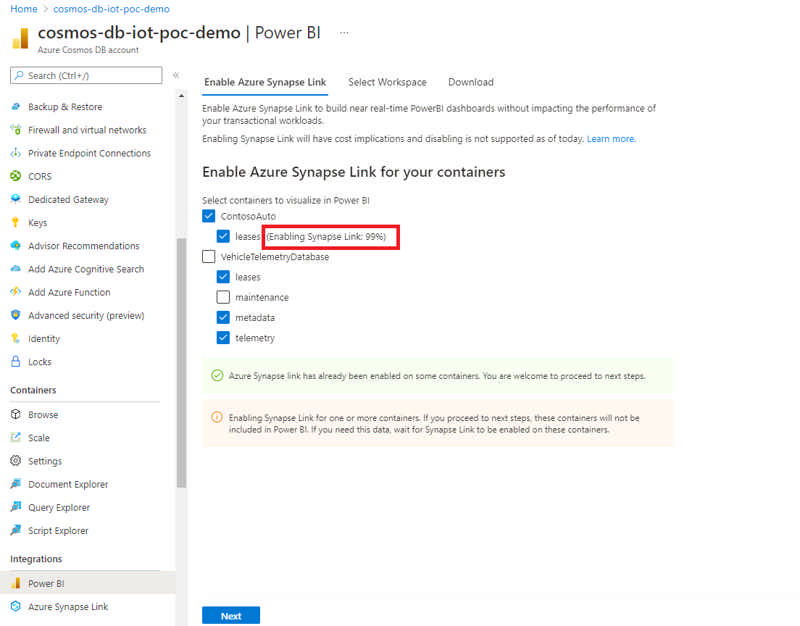

The new Azure Cosmos DB connector V2 for Power BI now allows you to import data into your dashboards using the DirectQuery mode, in addition to the previously available Import mode.

The DirectQuery mode in the V2 connector is helpful in scenarios where Azure Cosmos DB container data volume is large enough to be imported into Power BI cache via Import mode. It’s also helpful in scenarios where real-time reporting with the latest ingested data is a requirement. This feature can help you reduce data movement between Azure Cosmos DB and Power BI, with filtering and aggregations being pushed down. It is also helpful in user scenarios where real-time reporting with the latest ingested data is a requirement. Direct Query also has performance optimizations related to query pushdown and data serialization.

Source: Public preview: Azure Cosmos DB V2 Connector for Power BI

We are certifying IT Service Management Connector (ITSMC) on Tokyo version of ServiceNow.

Azure services like Log Analytics and Azure Monitor provide tools to detect, analyze and troubleshoot issues with your Azure and non-Azure resources to enable work item integration with IT Service Management products. The ITSM connector provides a bi-directional connection between Azure and ITSM tools to help track and resolve issues faster.

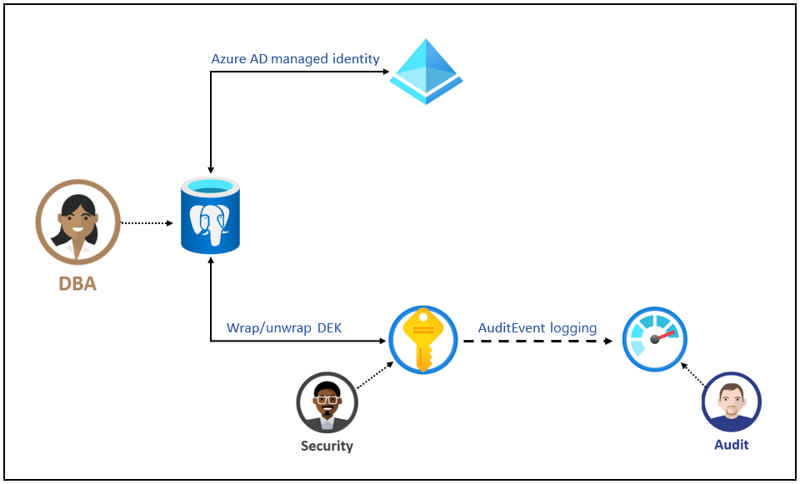

Azure Database for PostgreSQL – Flexible Server uses storage encryption of data at-rest for data using service managed encryption keys in limited Azure regions. Data, including backups, are encrypted on disk and this encryption is always on and can't be disabled. The encryption uses FIPS 140-2 validated cryptographic module and an AES 256-bit cipher for the Azure storage encryption. Currently this feature is available in the Switzerland North, Switzerland West, Canada East, Canada Central, Southeast Asia, Asia East and Brazil South regions.

Infrastructure encryption with customer managed keys (CMK) adds a second layer of protection by encrypting service-managed keys with customer managed keys. It uses FIPS 140-2 validated cryptographic module, but with a different encryption algorithm. This provides an additional layer of protection for your data at rest. The key managed by the customer that is used to encrypt the service supplied key is stored in Azure Key Vault service, providing additional security, high availability, and disaster recovery features.

Source: General availability: Encryption using CMK for Azure Database for PostgreSQL – Flexible Server

Azure Dedicated Host gives you more control over the hosts you deployed by giving you the option to restart any host. When undergoing a restart, the host and its associated VMs will restart while staying on the same underlying physical hardware.

With this new capability, now generally available, you can take troubleshooting steps at the host level. This feature is currently available only in Azure public cloud and we will soon be launching this feature in sovereign clouds as well.

Today, customers and partners manage hundreds or even thousands of active databases. For each of these databases, it is essential to be able to create an accurate mapping of the active configurations. This could be for inventorying or even reporting purposes. Centralizing this database inventory in Azure using Azure Arc allows you to create a unified view of all your databases in one place regardless of which infrastructure those databases might be located on – in Azure, in your datacenter, in edge sites, or even in other clouds.

Source: Public Preview of Viewing SQL Server Databases via Azure Arc

- .NET

- .NET 6.0 Migration

- 5 Best websites to read books online free with no downloads

- 5 surprising things that men find unattractive

- 5 Ways To Take Control of Overthinking

- 6 simple methods for a more productive workday

- 6 Ways To Stop Stressing About Things You Can't Control

- Add React to ASP.NET Core

- Adding reCAPTCHA to a .NET Core Web Site

- Admin Accounts

- Adobe Acrobat

- Afraid of the new job? 7 positive tips against negative feelings

- Agile

- AKS and Kubernetes Commands (kubectl)

- API Lifecycle Management

- arc42

- Article Writing Tools

- Atlassian

- Azure API Management

- Azure App Registration

- Azure Application Gateway

- Azure Arc

- Azure Arc Commands

- Azure Architectures

- Azure Bastion

- Azure Bicep

- Azure CLI Commands

- Azure Cloud Products

- Azure Cognitive Services

- Azure Container Apps

- Azure Cosmos DB

- Azure Cosmos DB Commands

- Azure Costs

- Azure Daily

- Azure Daily 2022

- Azure Daily 2023

- Azure Data Factory

- Azure Database for MySQL

- Azure Databricks

- Azure Diagram Samples

- Azure Durable Functions

- Azure Firewall

- Azure Functions

- Azure Kubernetes Service (AKS)

- Azure Landing Zone

- Azure Log Analytics

- Azure Logic Apps

- Azure Maps

- Azure Monitor

- Azure News

- Azure PowerShell Cmdlets

- Azure PowerShell Login

- Azure Private Link

- Azure Purview

- Azure Redis Cache

- Azure Security Groups

- Azure Sentinel

- Azure Service Bus

- Azure Service Bus Questions (FAQ)

- Azure Services Abstract

- Azure SQL

- Azure Tips and Tricks

- Backlog Items

- BASH Programming

- Best LinkedIn Tips (Demo Test)

- Best Practices for RESTful API

- Bing Maps

- Birthday Gift Ideas for Wife

- Birthday Poems

- Black Backgrounds and Wallpapers

- Bootstrap Templates

- Brave New World

- Brian Tracy Quotes

- Build Websites Resources

- C# Development Issues

- C# Programming Guide

- Caching

- Caching Patterns

- Camping Trip Checklist

- Canary Deployment

- Careers of the Future You Should Know About

- Cheap Vacation Ideas

- Cloud Computing

- Cloud Migration Methods

- Cloud Native Applications

- Cloud Service Models

- Cloudflare

- Code Snippets

- Compelling Reasons Why Money Can’t Buy Happiness

- Conditional Access

- Configurations for Application Insights

- Create a Routine

- Create sitemap.xml in ASP.NET Core

- Creative Writing: Exercises for creative texts

- CSS Selectors Cheat Sheet

- Cultivate a Growth Mindset

- Cultivate a Growth Mindset by Stealing From Silicon Valley

- Custom Script Extension for Windows

- Daily Scrum (Meeting)

- Dalai Lama Quotes

- DataGridView

- Decision Trees

- Deployments in Azure

- Dev Box

- Development Flows

- Docker

- Don’t End a Meeting Without Doing These 3 Things

- Drink More Water: This is How it Works

- Dropdown Filter

- Earl Nightingale Quotes

- Easy Steps Towards Energy Efficiency

- EF Core

- Elon Musk

- Elon Musk Companies

- Employment

- English

- Escape Double Quotes in C#

- Escaping characters in C#

- Executing Raw SQL Queries using Entity Framework Core

- Factors to Consider While Selecting the Best Earthmoving System

- Feng Shui 101: How to Harmonize Your Home in the New Year

- Flying Machines

- Foods against cravings

- Foods that cool you from the inside

- Four Misconceptions About Drinking

- Fox News

- Free APIs

- Funny Life Quotes

- Generate Faces

- Generate Random Numbers in C#

- Genius Money Hacks for Massive Savings

- GitHub

- GitHub Concepts

- Green Careers Set to Grow in the Next Decade

- Habits Of Highly Stressed People and how to avoid them

- Happy Birthday Wishes & Quotes

- Helm Overview

- How to Clean Floors – Tips & Tricks

- How to invest during the 2021 pandemic

- How To Make Money From Real Estate

- How To Stop Drinking Coffee

- Image for Websites

- Inspirational Quotes

- Iqra Technology, IT Services provider Company

- Jobs Of 2050

- jQuery

- jQuery plugins

- JSON for Linking Data (JSON-LD)

- Json to C# Converters

- Karen Lamb Quotes

- Kubernetes Objects

- Kubernetes Tools

- Kusto Query Language

- Lack of time at work? 5 simple tricks to help you avoid stress

- Lambda (C#)

- Last Minute Travel Tips

- Last-Minute-Reisetipps

- Latest Robotics

- Leadership

- List Of Hobbies And Interests

- Logitech BRIO Webcam

- Management

- Mark Twain Quotes

- Markdown

- Meet Sophia

- Message-Oriented Architecture

- Microservices

- Microsoft Authenticator App

- Microsoft Power Automate

- Microsoft SQL Server

- Microsoft Teams

- Mobile UI Frameworks

- Motivation

- Multilingual Applications

- NBC News

- NuGet

- Objectives and Key Results (OKR)

- Objectives and Key Results (OKR) Samples

- OKR Software

- Online JSON Viewer and Parser

- Outlook Automation

- PCMag

- Phases of any relationship

- Playwright

- Popular cars per decade

- Popular Quotes

- PowerShell

- PowerShell Array Guide

- PowerShell Coding Samples

- PowerToys

- Prism

- Pros & Cons Of Alternative Energy

- Quill Rich Text Editor

- Quotes

- RACI Matrix

- Razor Syntax

- Reasons why singletasking is better than multitasking

- Regular Expression (RegEx)

- Resize Images in C#

- RESTful APIs

- Rich Text Editors

- Rob Siltanen Quotes

- Robots

- Run sudo commands

- Salesforce Offshore Support Services Provider

- Salesforce Offshore Support Services Providers

- Sample Data

- Save Money On Food

- Score with authenticity in the job interview

- Security

- Semantic Versioning

- Serialization using Thread Synchronization

- Service Worker

- Snipps

- Speak and Presentation

- SQL References

- SQL Server Full-Text Search

- Successful

- Surface Lineup 2021

- Surface Lineup 2021 Videos

- SVG Online Editors

- Team Manifesto

- Technologies

- Technology Abbreviations

- Technology Glossary

- TechSpot

- That is why you should drink cucumber water every day

- The Cache Tag Helper in ASP.NET Core

- The Verge

- Theodore Roosevelt Quotes

- These 7 things make you unattractive

- Things Successful People Do That Others Don’t

- Things to Consider for a Great Birthday Party

- Things to Consider When Designing A Website

- Thoughts

- TinyMCE Image Options

- TinyMCE Toolbar Options

- Tips for a Joyful Life

- Tips for fewer emails at work

- Tips for Making Better Decisions

- Tips for Managing the Stress of Working at Home

- Tips for Writing that Great Blog Post

- Tips On Giving Flowers As Gifts

- Tips you will listen better

- Top Fitness Tips

- Top Healthy Tips

- Top Money Tips

- Top Ten Jobs

- Track Authenticated Users in Application Insights

- Unicode Characters

- Visual Studio 2022

- Vital everyday work: tips for healthy work

- Walking barefoot strengthens your immune system

- Walt Disney Quotes

- Ways for Kids to Make Money

- Web Design Trends & Ideas

- Web Icons

- Web Scraping

- Webhooks

- Website Feature Development

- What are my options for investing money?

- What happens when you drink water in the morning

- What Is Stressful About Working at Home

- What To Eat For Lunch

- Windows 11 Top Features You Should Know

- Winston Churchill Quotes

- XPath

- You'll burn out your team with these 5 leadership mistakes

- ZDNet